1. 官网Api文档只有英文版的,PDF格式的在Amazon Athena Documentation PDF版,网页版的在Amazon Athena Documentation网页版。

2. 官方提供了两个版本的SDK,下载源码后可以很方便地找到example,至少大部分是可以的。

3. 在使用Athena的java api之前,首先我们需要在Athena控制台创建Database,里面的创建有操作提示就不多说了。值得注意的是,你目前使用的region是哪个,因为后面通过Java查询数据的时候,设置的region要和这个一致。

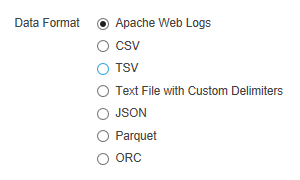

1) 简单来说,就是我们先把parquet文件或者其它格式的文件存放在S3上,然后通过Athena来查询这个文件里面的数据,数据文件格式的支持类型如图:

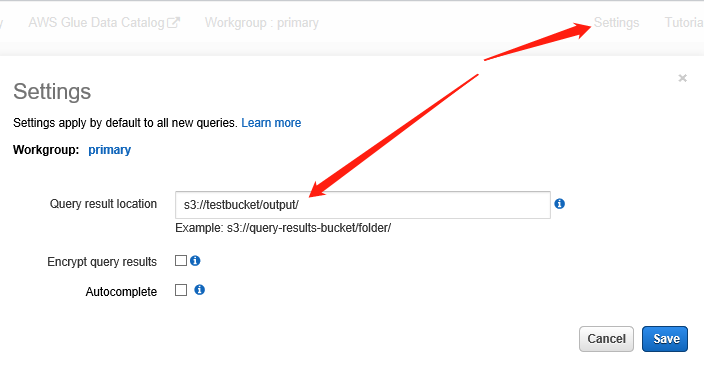

2) 如图,设置我们Athena查询的日志保存路径。

3) 假设我们这里用的是parquet格式的数据文件,存放的S3 partition路径是 s3://testbucket/parquet/date=20191227/

那么我们的执行下面这个语句创建表(默认的database是default)。

CREATE EXTERNAL TABLE `test`(

`id` bigint,

`numbers` int,

`field3` bigint)

PARTITIONED BY (

`date` int)

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'

LOCATION

's3://testbucket/parquet/date=20191227/'

TBLPROPERTIES (

'has_encrypted_data'='false',

'last_modified_by'='hadoop',

'last_modified_time'='1577246786',

'parquet.compression'='SNAPPY',

'transient_lastDdlTime'='1577246786')注: PARTITIONED BY ( `date` int) 的date对应的是LOCATION ‘s3://testbucket/parquet/date=20191227/’ 里面的date,否则数据不能加载。

4) 创建test表之后,执行语句刷新分区。

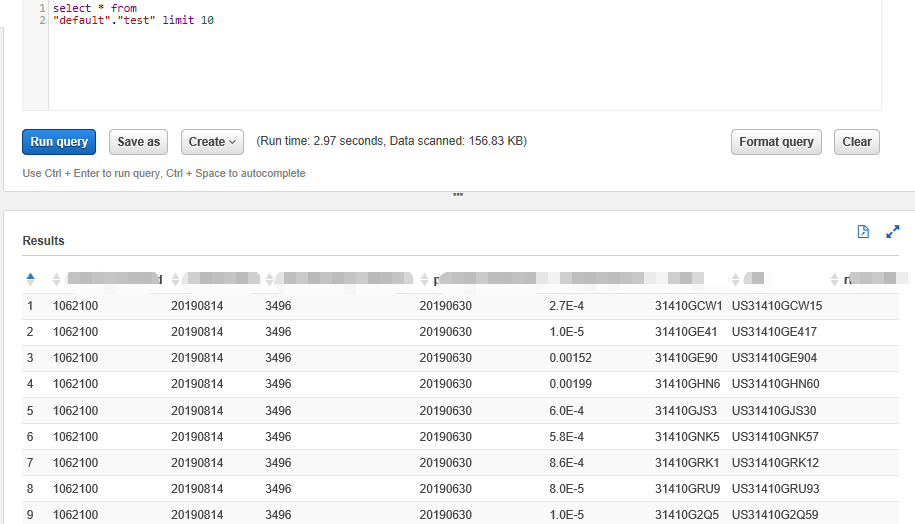

MSCK REPAIR TABLE test;5) 查询测试。

select * from "default"."test" limit 10 (事实上为了方便测试可以不使用partition,我这里为了方便直接使用的是别的表查询,看的也效果大概这样子的。)

4. 创建好表并且能正常查询数据之后,就可以使用java来查询数据了,假设Athena服务是US-EAST-2区的。

以下是总结使用javav2版本的Athena使用,关于Java如何使用Athena的教程也是英文版的,Java code Samples。

1) 下载了官网源码之后,我们使用javav2\example_code\athena\src\main\java\aws\example\athena\StartQueryExample.java来测试查询效果。首先确保能够连接上Athena并且创建实例。

package aws.example.athena;

//snippet-start:[athena.java2.AthenaClientFactory.client.import]

import software.amazon.awssdk.regions.Region;

import software.amazon.awssdk.services.athena.AthenaClient;

import software.amazon.awssdk.services.athena.AthenaClientBuilder;

//snippet-end:[athena.java2.AthenaClientFactory.client.import]

/**

* AthenaClientFactory

* -------------------------------------

* This code shows how to create and configure an Amazon Athena client.

*/

public class AthenaClientFactory {

//snippet-start:[athena.java2.AthenaClientFactory.client.main]

/**

* AthenaClientClientBuilder to build Athena with the following properties:

* - Set the region of the client

* - Use the instance profile from the EC2 instance as the credentials provider

* - Configure the client to increase the execution timeout.

*/

private final AthenaClientBuilder builder = AthenaClient.builder()

.region(Region.US_EAST_2)

/*.credentialsProvider(InstanceProfileCredentialsProvider.create())*/;

public AthenaClient createClient() {

return builder.build();

}

//snippet-end:[athena.java2.AthenaClientFactory.client.main]

}

//snippet-end:[athena.java.AthenaClientFactory.client]

//snippet-end:[athena.java2.AthenaClientFactory.client]这里面的region就是上面Athena控制台里面的region。创建实例:

AthenaClientFactory factory = new AthenaClientFactory();

AthenaClient athenaClient = factory.createClient();如果这里创建报错显示security token的问题,我们需要申请到IAM role的accessKeyId和secretAccessKey作为登入凭据。然后设置到环境变量。

System.setProperty("aws.accessKeyId", "AKIAQDBBBBbbb3VHH4V");

System.setProperty("aws.secretAccessKey", "tTcadqweL+asdasdq");2) 创建实例后,在这里ExampleConstants配置查询语句和database。

package aws.example.athena;

public class ExampleConstants {

public static final int CLIENT_EXECUTION_TIMEOUT = 100000;

public static final String ATHENA_OUTPUT_BUCKET = "s3://testbucket/output";

// This example demonstrates how to query a table created by the "Getting Started" tutorial in Athena

public static final String ATHENA_SAMPLE_QUERY = "SELECT *"

+ " FROM default.test"

+ " limit 10;";

public static final long SLEEP_AMOUNT_IN_MS = 1000;

public static final String ATHENA_DEFAULT_DATABASE = "default";

}3) 以下是执行查询的程序入口,通过submitAthenaQuery提交语句得到查询请求的ID,并且轮询获取查询的结果直到取到Athena服务的反馈。

package aws.example.athena;

//snippet-start:[athena.java2.StartQueryExample.import]

import software.amazon.awssdk.services.athena.AthenaClient;

import software.amazon.awssdk.services.athena.model.*;

import software.amazon.awssdk.services.athena.paginators.GetQueryResultsIterable;

import java.util.List;

//snippet-end:[athena.java2.StartQueryExample.import]

/**

* StartQueryExample

* -------------------------------------

* This code shows how to submit a query to Athena for execution, wait till results

* are available, and then process the results.

*/

public class StartQueryExample {

//snippet-start:[athena.java2.StartQueryExample.main]

public static void main(String[] args) throws InterruptedException {

System.setProperty("aws.accessKeyId", "AKIAQDBBBBbbb3VHH4V");

System.setProperty("aws.secretAccessKey", "tTcadqweL+asdasdq");

// Build an AthenaClient client

AthenaClientFactory factory = new AthenaClientFactory();

AthenaClient athenaClient = factory.createClient();

String queryExecutionId = submitAthenaQuery(athenaClient);

waitForQueryToComplete(athenaClient, queryExecutionId);

processResultRows(athenaClient, queryExecutionId);

}

/**

* Submits a sample query to Athena and returns the execution ID of the query.

*/

private static String submitAthenaQuery(AthenaClient athenaClient) {

// The QueryExecutionContext allows us to set the Database.

QueryExecutionContext queryExecutionContext = QueryExecutionContext.builder()

.database(ExampleConstants.ATHENA_DEFAULT_DATABASE).build();

// The result configuration specifies where the results of the query should go in S3 and encryption options

ResultConfiguration resultConfiguration = ResultConfiguration.builder()

// You can provide encryption options for the output that is written.

// .withEncryptionConfiguration(encryptionConfiguration)

.outputLocation(ExampleConstants.ATHENA_OUTPUT_BUCKET).build();

// Create the StartQueryExecutionRequest to send to Athena which will start the query.

StartQueryExecutionRequest startQueryExecutionRequest = StartQueryExecutionRequest.builder()

.queryString(ExampleConstants.ATHENA_SAMPLE_QUERY)

.queryExecutionContext(queryExecutionContext)

.resultConfiguration(resultConfiguration).build();

StartQueryExecutionResponse startQueryExecutionResponse = athenaClient.startQueryExecution(startQueryExecutionRequest);

return startQueryExecutionResponse.queryExecutionId();

}

/**

* Wait for an Athena query to complete, fail or to be cancelled.

* This is done by polling Athena over an interval of time.

* If a query fails or is cancelled, then it will throw an exception.

*/

private static void waitForQueryToComplete(AthenaClient athenaClient, String queryExecutionId) throws InterruptedException {

GetQueryExecutionRequest getQueryExecutionRequest = GetQueryExecutionRequest.builder()

.queryExecutionId(queryExecutionId).build();

GetQueryExecutionResponse getQueryExecutionResponse;

boolean isQueryStillRunning = true;

while (isQueryStillRunning) {

getQueryExecutionResponse = athenaClient.getQueryExecution(getQueryExecutionRequest);

String queryState = getQueryExecutionResponse.queryExecution().status().state().toString();

if (queryState.equals(QueryExecutionState.FAILED.toString())) {

throw new RuntimeException("Query Failed to run with Error Message: " + getQueryExecutionResponse

.queryExecution().status().stateChangeReason());

} else if (queryState.equals(QueryExecutionState.CANCELLED.toString())) {

throw new RuntimeException("Query was cancelled.");

} else if (queryState.equals(QueryExecutionState.SUCCEEDED.toString())) {

isQueryStillRunning = false;

} else {

// Sleep an amount of time before retrying again.

Thread.sleep(ExampleConstants.SLEEP_AMOUNT_IN_MS);

}

System.out.println("Current Status is: " + queryState);

}

}

/**

* This code calls Athena and retrieves the results of a query.

* The query must be in a completed state before the results can be retrieved and

* paginated.

* The first row of results are the column headers.

*/

private static void processResultRows(AthenaClient athenaClient, String queryExecutionId) {

GetQueryResultsRequest getQueryResultsRequest = GetQueryResultsRequest.builder()

// Max Results can be set but if its not set,

// it will choose the maximum page size

// As of the writing of this code, the maximum value is 1000

// .withMaxResults(1000)

.maxResults(5)

.queryExecutionId(queryExecutionId).build();

GetQueryResultsIterable getQueryResultsResults = athenaClient.getQueryResultsPaginator(getQueryResultsRequest);

for (GetQueryResultsResponse Resultresult : getQueryResultsResults) {

List<ColumnInfo> columnInfoList = Resultresult.resultSet().resultSetMetadata().columnInfo();

List<Row> results = Resultresult.resultSet().rows();

processRow(results, columnInfoList);

}

}

private static void processRow(List<Row> rows, List<ColumnInfo> columnInfoList) {

System.out.println("=====================");

for (Row row : rows) {

System.out.println(row.toString());

List<Datum> columns = row.data();

for (Datum column : columns) {

System.out.print(column.varCharValue() + "\t");

}

System.out.println();

}

}

//snippet-end:[athena.java2.StartQueryExample.main]

}3) 查询结果如图:

注:结果的ResultSet有11行,第一行为表头,所以处理的时候要注意以下。GetQueryResultsRequest.builder().maxResults(5)设置为分页5条数据。

注:

1. 遇到:GENERIC_INTERNAL_ERROR: Please reduce your request rate. (Service: Amazon S3; Status Code: 503; Error Code: SlowDown;… 或者 run with Error Message: GENERIC_INTERNAL_ERROR: Unable to create class com.facebook.presto.execution.TaskInfo from JSON response: …….com.fasterxml.jackson.databind.JsonMappingException

可能是碰到Athena服务高峰期的问题,可以尝试降低retry频率。

2. 遇到:InvalidRequestException: Queries of this type are not supported (Service: Athena, Status Code: 400。可能是Sql语句错误。

3. 遇到:InvalidRequestException: The S3 location provided to save your query results is invalid. Please check your S3 location is correct and is in the same region and try again. If you continue to see the issue, contact customer support for further assistance. (Service: Athena, Status Code: 400。这个是Region设置错误导致的。

4. 不同的region,IAM的security token不同。在us-east-1的athena的表,用的us-east-2的security token不能查询

附:

1. 关于Athena的Sql语法使用。

2. 查询具有复杂类型和嵌套结构的数组可以参考:复杂类型和嵌套结构。

今天的文章关于Athena使用的小结分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/63296.html