3.分区聚合操作

分区聚合操作由 聚合器 - CombinerAggregator, ReducerAggregator, Aggregator 来实现。

分区聚合操作(partitionAggregate)对每个Partition中的tuple进行聚合.

与前面的Function在原tuple后面追加数据不同,分区聚合操作的输出会直接替换掉输入的tuple,仅输出分区聚合操作中发射的tuple。

=========================

~方法:

partitionAggregate(Fields,Aggreator/CombinerAggregator/ReducerAggregator,Fields)

第一个参数:要进入聚合器的字段们

第二个参数:聚合器对象

第三个参数:聚合后输出的字段们

~三种聚合器

CombinerAggregator接口:

public interface CombinerAggregator <T> extends Serializable {

T init(TridentTuple tuple);

T combine(T val1, T val2);

T zero();

}

CombinerAggregator接口只返回一个tuple,并且这个tuple也只包含一个field。

执行过程:

针对每个分区都执行如下操作

每个分区处理开始前首先调用zero()方法产生一个初始值val1。

之后对应分区中的每个tuple,都会进行如下操作:

先调用init方法对当前tuple进行处理,产生当前tuple对应的val2

再调用combine函数将之前的val1和当前tuple对应的val2进行合并处理,返回合并后的值成为新的val1

循环如上步骤处理分区中内的所有tuple,并将最终产生的val1作为整个分区合并的结果返回。

ReducerAggregator接口:

public interface ReducerAggregator <T> extends Serializable {

T init();

T reduce(T curr, TridentTuple tuple);

}

ReducerAggregator接口只返回一个tuple,并且这个tuple也只包含一个field。

执行过程:

针对每一个分区都执行如下操作

每个分区处理开始时先调用init方法产生初始值curr。

对分区中的每个tuple,依次调用reduce方法,方法中传入当前curr和当前tuple进行处理,产生新的curr返回

整个分区处理完,将最终产生的curr作为整个分区合并的结果返回。

Aggregator接口 - 通常我们不会直接实现此接口,更多的时候继承BaseAggregator抽象类:

public interface Aggregator<T> extends BaseAggregator {

T init(Object batchId, TridentCollector collector);

void aggregate(T val, TridentTuple tuple, TridentCollector collector);

void complete(T val, TridentCollector collector);

}

Aggregator是最通用的聚合器。

Aggregator接口可以发射含任意数量属性的任意数据量的tuples,并且可以在执行过程中的任何时候发射:

执行过程:

针对每一个分区都执行如下操作

init:在处理数据之前被调用,它的返回值会作为一个状态值传递给aggregate和complete方法

aggregate:用来处理每一个输入的tuple,它可以更新状态值也可以发射tuple

complete:当所有tuple都被处理完成后被调用

=========================案例四:统计中日志条数CombinerAggregator

package com.liming.count_combiner_aggerate;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.tuple.Fields;

import backtype.storm.utils.Utils;

import storm.trident.Stream;

import storm.trident.TridentTopology;

public class TridentDemo {

public static void main(String[] args) {

//--创建topology

TridentTopology topology = new TridentTopology();

Stream s = topology.newStream("xx", new SentenceSpout())

.each(new Fields("name"), new GenderFunc(),new Fields("gender"))

.partitionAggregate(new Fields("name"), new CountCombinerAggerate(), new Fields("count"));

s.each(s.getOutputFields(), new PrintFilter());

//--提交到集群中运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology.build());

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 10);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

package com.liming.count_combiner_aggerate;

import java.util.Map;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

import backtype.storm.utils.Utils;

public class SentenceSpout extends BaseRichSpout{

private SpoutOutputCollector collector = null;

private Values [] values = {

new Values("xiaoming","i am so shuai"),

new Values("xiaoming","do you like me"),

new Values("xiaohua","i do not like you"),

new Values("xiaohua","you look like fengjie"),

new Values("xiaoming","are you sure you do not like me"),

new Values("xiaohua","yes i am"),

new Values("xiaoming","ok i am sure")

};

private int index = 0;

@Override

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

@Override

public void nextTuple() {

if(index<values.length){

collector.emit(values[index]);

index++;

}

Utils.sleep(100);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

Fields fields = new Fields("name","sentence");

declarer.declare(fields);

}

}

package com.liming.count_combiner_aggerate;

import backtype.storm.tuple.Values;

import storm.trident.operation.BaseFunction;

import storm.trident.operation.TridentCollector;

import storm.trident.tuple.TridentTuple;

public class GenderFunc extends BaseFunction{

@Override

public void execute(TridentTuple tuple, TridentCollector collector) {

String name = tuple.getStringByField("name");

if("xiaoming".equals(name)){

collector.emit(new Values("male"));

}else if("xiaohua".equals(name)){

collector.emit(new Values("female"));

}else{

}

}

}

package com.liming.count_combiner_aggerate;

import storm.trident.operation.CombinerAggregator;

import storm.trident.tuple.TridentTuple;

public class CountCombinerAggerate implements CombinerAggregator<Integer> {

@Override

public Integer init(TridentTuple tuple) {

return 1;

}

@Override

public Integer combine(Integer val1, Integer val2) {

return val1+val2;

}

@Override

public Integer zero() {

return 0;

}

}

package com.liming.count_combiner_aggerate;

import java.util.Iterator;

import java.util.Map;

import backtype.storm.tuple.Fields;

import storm.trident.operation.BaseFilter;

import storm.trident.operation.TridentOperationContext;

import storm.trident.tuple.TridentTuple;

public class PrintFilter extends BaseFilter{

private TridentOperationContext context = null;

@Override

public void prepare(Map conf, TridentOperationContext context) {

super.prepare(conf, context);

this.context = context;

}

@Override

public boolean isKeep(TridentTuple tuple) {

StringBuffer buf = new StringBuffer();

Fields fields = tuple.getFields();

Iterator<String> it = fields.iterator();

while(it.hasNext()){

String key = it.next();

Object value = tuple.getValueByField(key);

buf.append("---"+key+":"+value+"---");

}

System.out.println(buf.toString());

return true;

}

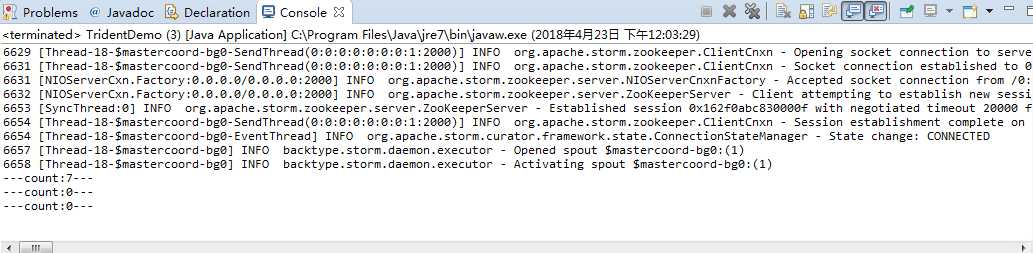

}测试结果:

案例五:测试ReducerAggregator:

package com.liming.count_ReducerAggregator;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.tuple.Fields;

import backtype.storm.utils.Utils;

import storm.trident.Stream;

import storm.trident.TridentTopology;

public class TridentDemo {

public static void main(String[] args) {

//--创建topology

TridentTopology topology = new TridentTopology();

Stream s = topology.newStream("xx", new SentenceSpout())

.partitionAggregate(new Fields("name"), new CountReducerAggerator(), new Fields("count"));

s.each(s.getOutputFields(), new PrintFilter());

//--提交到集群中运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology.build());

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 10);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

package com.liming.count_ReducerAggregator;

import java.util.Map;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

import backtype.storm.utils.Utils;

public class SentenceSpout extends BaseRichSpout{

private SpoutOutputCollector collector = null;

private Values [] values = {

new Values("xiaoming","i am so shuai"),

new Values("xiaoming","do you like me"),

new Values("xiaohua","i do not like you"),

new Values("xiaohua","you look like fengjie"),

new Values("xiaoming","are you sure you do not like me"),

new Values("xiaohua","yes i am"),

new Values("xiaoming","ok i am sure")

};

private int index = 0;

@Override

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

@Override

public void nextTuple() {

if(index<values.length){

collector.emit(values[index]);

index++;

}

Utils.sleep(100);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

Fields fields = new Fields("name","sentence");

declarer.declare(fields);

}

}

package com.liming.count_ReducerAggregator;

import storm.trident.operation.ReducerAggregator;

import storm.trident.tuple.TridentTuple;

public class CountReducerAggerator implements ReducerAggregator<Integer> {

@Override

public Integer init() {

return 0;

}

@Override

public Integer reduce(Integer curr, TridentTuple tuple) {

return curr+1;

}

}

package com.liming.count_ReducerAggregator;

import java.util.Iterator;

import java.util.Map;

import backtype.storm.tuple.Fields;

import storm.trident.operation.BaseFilter;

import storm.trident.operation.TridentOperationContext;

import storm.trident.tuple.TridentTuple;

public class PrintFilter extends BaseFilter{

private TridentOperationContext context = null;

@Override

public void prepare(Map conf, TridentOperationContext context) {

super.prepare(conf, context);

this.context = context;

}

@Override

public boolean isKeep(TridentTuple tuple) {

StringBuffer buf = new StringBuffer();

Fields fields = tuple.getFields();

Iterator<String> it = fields.iterator();

while(it.hasNext()){

String key = it.next();

Object value = tuple.getValueByField(key);

buf.append("---"+key+":"+value+"---");

}

System.out.println(buf.toString());

return true;

}

}

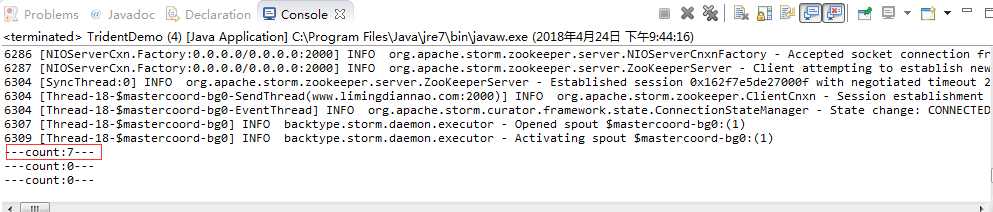

测试结果如下:

案例6 – 测试Aggregator:

package com.liming.count_Aggregator;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.tuple.Fields;

import backtype.storm.utils.Utils;

import storm.trident.Stream;

import storm.trident.TridentTopology;

public class TridentDemo {

public static void main(String[] args) {

//--创建topology

TridentTopology topology = new TridentTopology();

Stream s = topology.newStream("xx", new SentenceSpout())

.partitionAggregate(new Fields("name"), new CountAggerator(), new Fields("count"));

s.each(s.getOutputFields(), new PrintFilter());

//--提交到集群中运行

Config conf = new Config();

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("MyTopology", conf, topology.build());

//--运行10秒钟后杀死Topology关闭集群

Utils.sleep(1000 * 10);

cluster.killTopology("MyTopology");

cluster.shutdown();

}

}

package com.liming.count_Aggregator;

import java.util.Map;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

import backtype.storm.utils.Utils;

public class SentenceSpout extends BaseRichSpout{

private SpoutOutputCollector collector = null;

private Values [] values = {

new Values("xiaoming","i am so shuai"),

new Values("xiaoming","do you like me"),

new Values("xiaohua","i do not like you"),

new Values("xiaohua","you look like fengjie"),

new Values("xiaoming","are you sure you do not like me"),

new Values("xiaohua","yes i am"),

new Values("xiaoming","ok i am sure")

};

private int index = 0;

@Override

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

@Override

public void nextTuple() {

if(index<values.length){

collector.emit(values[index]);

index++;

}

Utils.sleep(100);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

Fields fields = new Fields("name","sentence");

declarer.declare(fields);

}

}

package com.liming.count_Aggregator;

import backtype.storm.tuple.Values;

import storm.trident.operation.BaseAggregator;

import storm.trident.operation.TridentCollector;

import storm.trident.tuple.TridentTuple;

public class CountAggerator extends BaseAggregator<Integer> {

int count = 0;

@Override

public Integer init(Object batchId, TridentCollector collector) {

return null;

}

@Override

public void aggregate(Integer val, TridentTuple tuple, TridentCollector collector) {

count++;

}

@Override

public void complete(Integer val, TridentCollector collector) {

collector.emit(new Values(count));

}

}

package com.liming.count_Aggregator;

import java.util.Iterator;

import java.util.Map;

import backtype.storm.tuple.Fields;

import storm.trident.operation.BaseFilter;

import storm.trident.operation.TridentOperationContext;

import storm.trident.tuple.TridentTuple;

public class PrintFilter extends BaseFilter{

private TridentOperationContext context = null;

@Override

public void prepare(Map conf, TridentOperationContext context) {

super.prepare(conf, context);

this.context = context;

}

@Override

public boolean isKeep(TridentTuple tuple) {

StringBuffer buf = new StringBuffer();

Fields fields = tuple.getFields();

Iterator<String> it = fields.iterator();

while(it.hasNext()){

String key = it.next();

Object value = tuple.getValueByField(key);

buf.append("---"+key+":"+value+"---");

}

System.out.println(buf.toString());

return true;

}

}

今天的文章storm-01(3)分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/73916.html