目录

文章目录

前言

最近在学习PaddlePaddle在各个显卡驱动版本的安装和使用,所以同时也学习如何在Ubuntu安装和卸载CUDA和CUDNN,在学习过程中,顺便记录学习过程。在供大家学习的同时,也在加强自己的记忆。本文章以卸载CUDA 11.8 和 CUDNN 8.9.6 为例,以安装CUDA 11.8 和 CUDNN 8.9.6 为例。

安装显卡驱动

禁用nouveau驱动

sudo vim /etc/modprobe.d/blacklist.conf

在文本最后添加:

blacklist nouveau

options nouveau modeset=0

然后执行:

sudo update-initramfs -u

重启后,执行以下命令,如果没有屏幕输出,说明禁用nouveau成功:

lsmod | grep nouveau

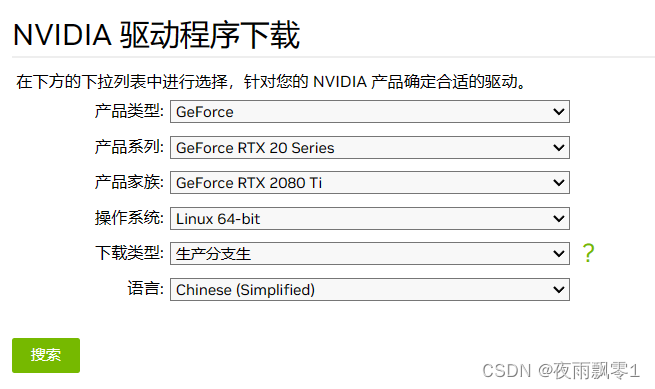

下载驱动

官网下载地址:https://www.nvidia.cn/Download/index.aspx?lang=cn ,根据自己显卡的情况下载对应版本的显卡驱动,比如笔者的显卡是RTX2080ti:

下载完成之后会得到一个安装包,不同版本文件名可能不一样:

NVIDIA-Linux-x86_64-535.113.01.run

卸载旧驱动

以下操作都需要在命令界面操作,执行以下快捷键进入命令界面,并登录(注意:如果是桌面,操作这个会黑屏,如果是远程登录,不需要执行这条命令):

Ctrl-Alt+F1

执行以下命令禁用X-Window服务,否则无法安装显卡驱动:

sudo service lightdm stop

执行以下三条命令卸载原有显卡驱动:

sudo apt-get remove --purge nvidia*

sudo chmod +x NVIDIA-Linux-x86_64-410.93.run

sudo ./NVIDIA-Linux-x86_64-535.113.01.run --uninstall

安装新驱动

直接执行驱动文件即可安装新驱动,一直默认即可:

sudo ./NVIDIA-Linux-x86_64-410.93.run

执行以下命令启动X-Window服务

sudo service lightdm start

最后执行重启命令,重启系统即可:

reboot

注意: 如果系统重启之后出现重复登录的情况,多数情况下都是安装了错误版本的显卡驱动。需要下载对应本身机器安装的显卡版本。

卸载CUDA

卸载CUDA很简单,一条命令就可以了,主要执行的是CUDA自带的卸载脚本,读者要根据自己的cuda版本找到卸载脚本:

sudo //usr/local/cuda-11.8/bin/cuda-uninstaller

卸载之后,还有一些残留的文件夹,之前安装的是CUDA 11.8。可以一并删除:

sudo rm -rf /usr/local/cuda-11.8/

这样就算卸载完了CUDA。

安装CUDA

安装的CUDA和CUDNN版本:

- CUDA 11.8

- CUDNN 8.9.6

接下来的安装步骤都是在root用户下操作的。

下载和安装CUDA

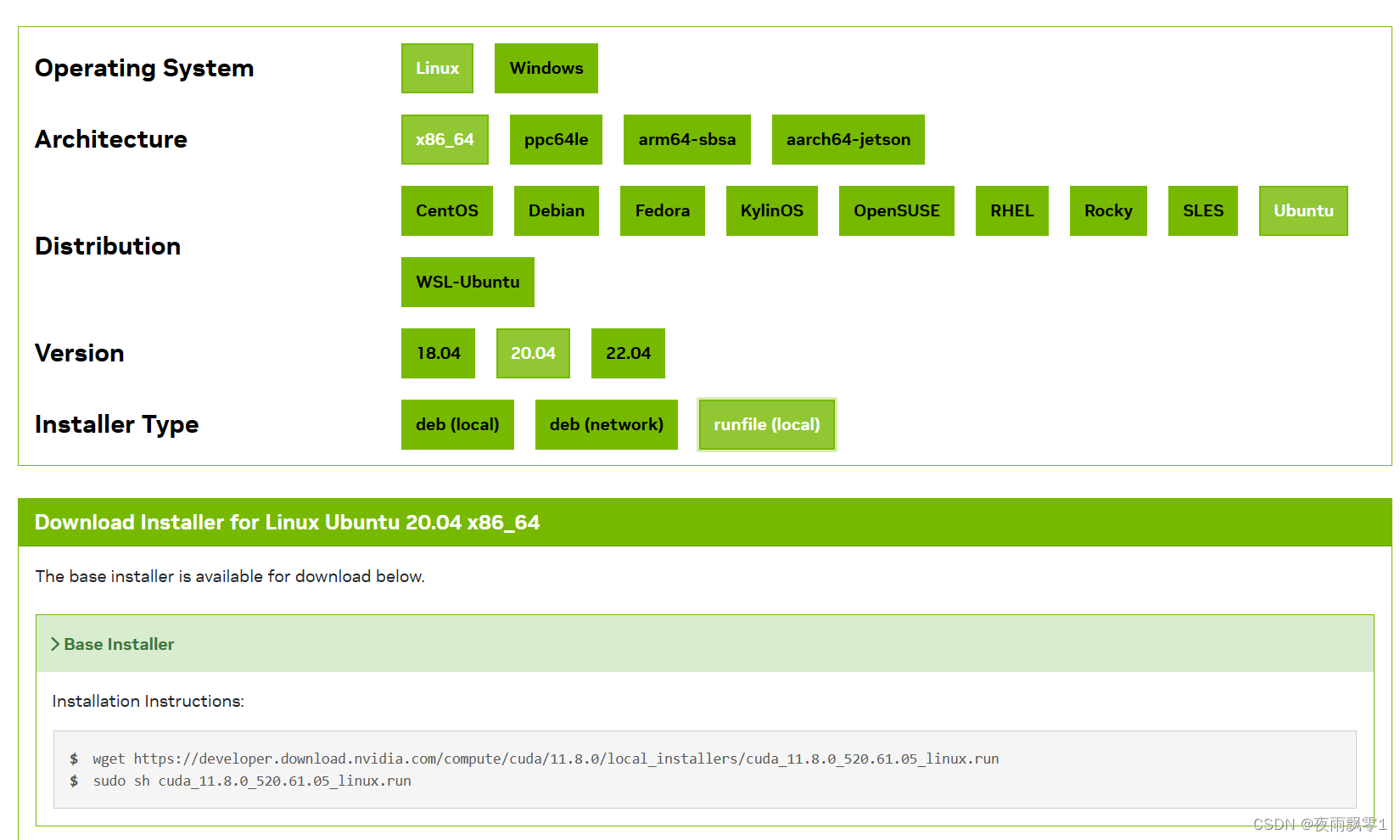

我们可以在官网:CUDA下载页面,

下载符合自己系统版本的CUDA。页面如下:

下载完成之后,给文件赋予执行权限:

chmod +x cuda_11.8.0_520.61.05_linux.run

执行安装包,开始安装:

./cuda_11.8.0_520.61.05_linux.run

开始安装之后,需要阅读说明,可以直接输入accept同意:

┌──────────────────────────────────────────────────────────────────────────────┐

│ End User License Agreement │

│ -------------------------- │

│ │

│ NVIDIA Software License Agreement and CUDA Supplement to │

│ Software License Agreement. Last updated: October 8, 2021 │

│ │

│ The CUDA Toolkit End User License Agreement applies to the │

│ NVIDIA CUDA Toolkit, the NVIDIA CUDA Samples, the NVIDIA │

│ Display Driver, NVIDIA Nsight tools (Visual Studio Edition), │

│ and the associated documentation on CUDA APIs, programming │

│ model and development tools. If you do not agree with the │

│ terms and conditions of the license agreement, then do not │

│ download or use the software. │

│ │

│ Last updated: October 8, 2021. │

│ │

│ │

│ Preface │

│ ------- │

│ │

│──────────────────────────────────────────────────────────────────────────────│

│ Do you accept the above EULA? (accept/decline/quit): │

│ accept │

└──────────────────────────────────────────────────────────────────────────────┘

同意说明之后,可以开始安装,可以通过上下键移动,回车键选择和取消。这里要注意取消勾选安装驱动,因为我们已经安装过驱动了。然后移动到Install回车开始安装即可。

┌──────────────────────────────────────────────────────────────────────────────┐

│ CUDA Installer │

│ - [ ] Driver │

│ [ ] 520.61.05 │

│ + [X] CUDA Toolkit 11.8 │

│ [X] CUDA Demo Suite 11.8 │

│ [X] CUDA Documentation 11.8 │

│ - [ ] Kernel Objects │

│ [ ] nvidia-fs │

│ Options │

│ Install │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ Up/Down: Move | Left/Right: Expand | 'Enter': Select | 'A': Advanced options │

└──────────────────────────────────────────────────────────────────────────────┘

安装完成之后,可以配置他们的环境变量,在vim ~/.bashrc的最后加上以下配置信息:

export LD_LIBRARY_PATH=/usr/local/cuda-11.8/lib64:/usr/local/cuda/extras/CPUTI/lib64

export CUDA_HOME=/usr/local/cuda-11.8

export PATH=$PATH:$LD_LIBRARY_PATH:$CUDA_HOME/bin

最后使用命令source ~/.bashrc使它生效。

可以使用命令nvcc -V查看安装的版本信息:

test@test:~$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Wed_Sep_21_10:33:58_PDT_2022

Cuda compilation tools, release 11.8, V11.8.89

Build cuda_11.8.r11.8/compiler.31833905_0

测试安装是否成功

执行以下几条命令:

/usr/local/cuda-11.8/extras/demo_suite/

./deviceQuery

正常情况下输出:

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 2 CUDA Capable device(s)

Device 0: "NVIDIA GeForce RTX 2080 Ti"

CUDA Driver Version / Runtime Version 12.2 / 11.8

CUDA Capability Major/Minor version number: 7.5

Total amount of global memory: 22189 MBytes (23267246080 bytes)

(68) Multiprocessors, ( 64) CUDA Cores/MP: 4352 CUDA Cores

GPU Max Clock rate: 1545 MHz (1.54 GHz)

Memory Clock rate: 7000 Mhz

Memory Bus Width: 352-bit

L2 Cache Size: 5767168 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1024

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 3 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

················································

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 12.2, CUDA Runtime Version = 11.8, NumDevs = 2, Device0 = NVIDIA GeForce RTX 2080 Ti, Device1 = NVIDIA GeForce RTX 2080 Ti

Result = PASS

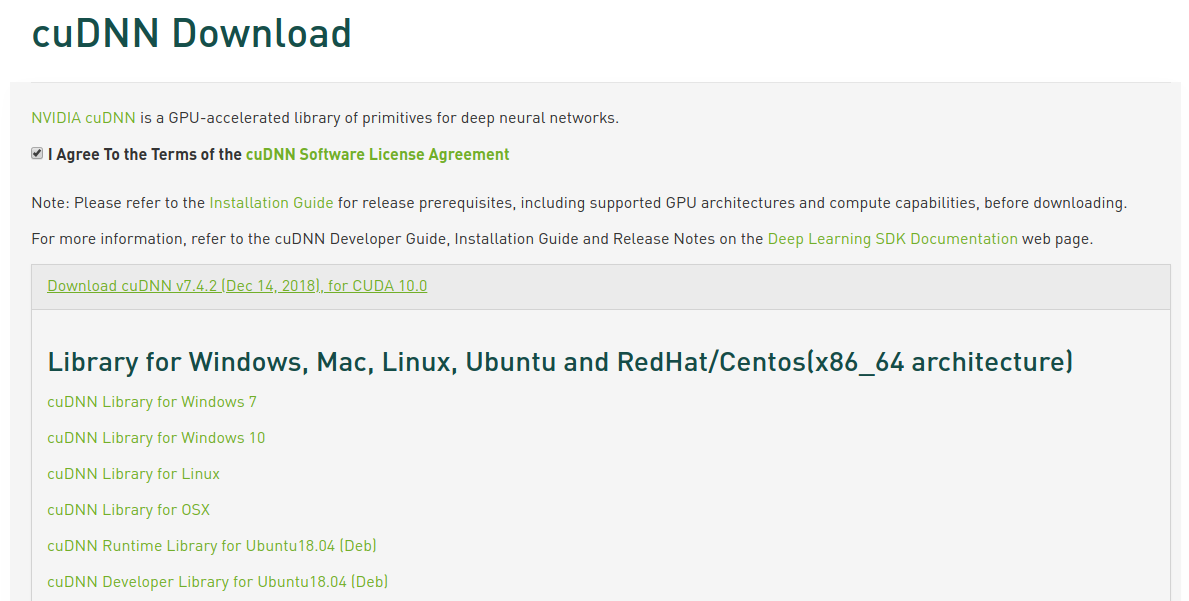

下载和安装CUDNN

进入到CUDNN的下载官网:https://developer.nvidia.com/rdp/cudnn-download ,然点击Download开始选择下载版本,当然在下载之前还有登录,选择版本界面如下,我们选择cuDNN Library for Linux:

下载之后是一个压缩包,如下:

cudnn-linux-x86_64-8.9.6.50_cuda11-archive.tar.xz

然后对它进行解压,命令如下:

tar -xf cudnn-linux-x86_64-8.9.6.50_cuda11-archive.tar.xz

解压之后可以得到两个文件夹:

cudnn-linux-x86_64-8.9.6.50_cuda11-archive/include/

cudnn-linux-x86_64-8.9.6.50_cuda11-archive/lib/

cudnn-linux-x86_64-8.9.6.50_cuda11-archive/LICENSE

使用以下两条命令复制这些文件到CUDA目录下:

cp cudnn-linux-x86_64-8.9.6.50_cuda11-archive/lib/* /usr/local/cuda-11.8/lib64/

cp cudnn-linux-x86_64-8.9.6.50_cuda11-archive/include/* /usr/local/cuda-11.8/include/

拷贝完成之后,可以使用以下命令查看CUDNN的版本信息:

cat /usr/local/cuda-11.8/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

测试安装结果

到这里就已经完成了CUDA 11.8 和 CUDNN 8.9.6 的安装。可以安装对应的Pytorch的GPU版本测试是否可以正常使用了。安装如下:

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

然后使用以下的程序测试安装情况:

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torch.backends.cudnn as cudnn

from torchvision import datasets, transforms

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=1)

def train(model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % 10 == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

def main():

cudnn.benchmark = True

torch.manual_seed(1)

device = torch.device("cuda")

kwargs = {

'num_workers': 1, 'pin_memory': True}

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=64, shuffle=True, **kwargs)

model = Net().to(device)

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

for epoch in range(1, 11):

train(model, device, train_loader, optimizer, epoch)

if __name__ == '__main__':

main()

如果正常输出一下以下信息,证明已经安装成了:

Train Epoch: 1 [0/60000 (0%)] Loss: 2.365850

Train Epoch: 1 [640/60000 (1%)] Loss: 2.305295

Train Epoch: 1 [1280/60000 (2%)] Loss: 2.301407

Train Epoch: 1 [1920/60000 (3%)] Loss: 2.316538

Train Epoch: 1 [2560/60000 (4%)] Loss: 2.255809

Train Epoch: 1 [3200/60000 (5%)] Loss: 2.224511

Train Epoch: 1 [3840/60000 (6%)] Loss: 2.216569

Train Epoch: 1 [4480/60000 (7%)] Loss: 2.181396

参考资料

- https://developer.nvidia.com

- https://www.cnblogs.com/luofeel/p/8654964.html

]

今天的文章ubuntu18.04卸载cuda_ubuntu卸载gnome「建议收藏」分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:http://bianchenghao.cn/77978.html