1、jsoup爬虫简单介绍

jsoup 是一款 Java 的HTML 解析器,可通过DOM,CSS选择器以及类似于JQuery的操作方法来提取和操作Html文档数据。

这两个涉及到的点有以下几个:

1、httpclient获取网页内容

2、Jsoup解析网页内容

3、要达到增量爬取的效果,那么需要利用缓存ehcache对重复URL判重

4、将爬取到的数据存入数据库

5、为解决某些网站防盗链的问题,那么需要将对方网站的静态资源(这里只处理了图片)本地化

2、相关代码

2.1导入pom依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.zrh</groupId>

<artifactId>T226_jsoup</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>T226_jsoup</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<!-- jdbc驱动包 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.44</version>

</dependency>

<!-- 添加Httpclient支持 -->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.2</version>

</dependency>

<!-- 添加jsoup支持 -->

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.10.1</version>

</dependency>

<!-- 添加日志支持 -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.16</version>

</dependency>

<!-- 添加ehcache支持 -->

<dependency>

<groupId>net.sf.ehcache</groupId>

<artifactId>ehcache</artifactId>

<version>2.10.3</version>

</dependency>

<!-- 添加commons io支持 -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.5</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.47</version>

</dependency>

</dependencies>

</project>

2.2、图片爬取

需要修改你要爬取的图片地址

private static String URL = "http://www.yidianzhidao.com/UploadFiles/img_1_446119934_1806045383_26.jpg";

2.3、图片本地化

crawler.properties

dbUrl=jdbc:mysql://localhost:3306/zrh?autoReconnect=true

dbUserName=root

dbPassword=123

jdbcName=com.mysql.jdbc.Driver

ehcacheXmlPath=C://blogCrawler/ehcache.xml

blogImages=D://blogCrawler/blogImages/

log4j.properties

log4j.rootLogger=INFO, stdout,D

#Console

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=[%-5p] %d{

yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

#D

log4j.appender.D = org.apache.log4j.RollingFileAppender

log4j.appender.D.File = C://blogCrawler/bloglogs/log.log

log4j.appender.D.MaxFileSize=100KB

log4j.appender.D.MaxBackupIndex=100

log4j.appender.D.Append = true

log4j.appender.D.layout = org.apache.log4j.PatternLayout

log4j.appender.D.layout.ConversionPattern = %-d{

yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

DbUtil.java

package com.zrh.util;

import java.sql.Connection;

import java.sql.DriverManager;

/** * 数据库工具类 * @author user * */

public class DbUtil {

/** * 获取连接 * @return * @throws Exception */

public Connection getCon()throws Exception{

Class.forName(PropertiesUtil.getValue("jdbcName"));

Connection con=DriverManager.getConnection(PropertiesUtil.getValue("dbUrl"), PropertiesUtil.getValue("dbUserName"), PropertiesUtil.getValue("dbPassword"));

return con;

}

/** * 关闭连接 * @param con * @throws Exception */

public void closeCon(Connection con)throws Exception{

if(con!=null){

con.close();

}

}

public static void main(String[] args) {

DbUtil dbUtil=new DbUtil();

try {

dbUtil.getCon();

System.out.println("数据库连接成功");

} catch (Exception e) {

e.printStackTrace();

System.out.println("数据库连接失败");

}

}

}

PropertiesUtil.java

package com.zrh.util;

import java.io.IOException;

import java.io.InputStream;

import java.util.Properties;

/** * properties工具类 * @author user * */

public class PropertiesUtil {

/** * 根据key获取value值 * @param key * @return */

public static String getValue(String key){

Properties prop=new Properties();

InputStream in=new PropertiesUtil().getClass().getResourceAsStream("/crawler.properties");

try {

prop.load(in);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

return prop.getProperty(key);

}

}

最重要的代码来了:

BlogCrawlerStarter.java(核心代码)

package com.zrh.crawler;

import java.io.File;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.UUID;

import org.apache.commons.io.FileUtils;

import org.apache.http.HttpEntity;

import org.apache.http.client.ClientProtocolException;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.apache.log4j.Logger;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import com.zrh.util.DateUtil;

import com.zrh.util.DbUtil;

import com.zrh.util.PropertiesUtil;

import net.sf.ehcache.Cache;

import net.sf.ehcache.CacheManager;

import net.sf.ehcache.Status;

/** * @author Administrator * */

public class BlogCrawlerStarter {

private static Logger logger = Logger.getLogger(BlogCrawlerStarter.class);

// https://www.csdn.net/nav/newarticles

private static String HOMEURL = "https://www.cnblogs.com/";

private static CloseableHttpClient httpClient;

private static Connection con;

private static CacheManager cacheManager;

private static Cache cache;

/** * httpclient解析首页,获取首页内容 */

public static void parseHomePage() {

logger.info("开始爬取首页:" + HOMEURL);

cacheManager = CacheManager.create(PropertiesUtil.getValue("ehcacheXmlPath"));

cache = cacheManager.getCache("cnblog");

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(HOMEURL);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response == null) {

logger.info(HOMEURL + ":爬取无响应");

return;

}

if (response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String homePageContent = EntityUtils.toString(entity, "utf-8");

// System.out.println(homePageContent);

parseHomePageContent(homePageContent);

}

} catch (ClientProtocolException e) {

logger.error(HOMEURL + "-ClientProtocolException", e);

} catch (IOException e) {

logger.error(HOMEURL + "-IOException", e);

} finally {

try {

if (response != null) {

response.close();

}

if (httpClient != null) {

httpClient.close();

}

} catch (IOException e) {

logger.error(HOMEURL + "-IOException", e);

}

}

if(cache.getStatus() == Status.STATUS_ALIVE) {

cache.flush();

}

cacheManager.shutdown();

logger.info("结束爬取首页:" + HOMEURL);

}

/** * 通过网络爬虫框架jsoup,解析网页类容,获取想要数据(博客的连接) * * @param homePageContent */

private static void parseHomePageContent(String homePageContent) {

Document doc = Jsoup.parse(homePageContent);

//#feedlist_id .list_con .title h2 a

Elements aEles = doc.select("#post_list .post_item .post_item_body h3 a");

for (Element aEle : aEles) {

// 这个是首页中的博客列表中的单个链接URL

String blogUrl = aEle.attr("href");

if (null == blogUrl || "".equals(blogUrl)) {

logger.info("该博客未内容,不再爬取插入数据库!");

continue;

}

if(cache.get(blogUrl) != null) {

logger.info("该数据已经被爬取到数据库中,数据库不再收录!");

continue;

}

// System.out.println("************************"+blogUrl+"****************************");

parseBlogUrl(blogUrl);

}

}

/** * 通过博客地址获取博客的标题,以及博客的类容 * * @param blogUrl */

private static void parseBlogUrl(String blogUrl) {

logger.info("开始爬取博客网页:" + blogUrl);

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(blogUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response == null) {

logger.info(blogUrl + ":爬取无响应");

return;

}

if (response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String blogContent = EntityUtils.toString(entity, "utf-8");

parseBlogContent(blogContent, blogUrl);

}

} catch (ClientProtocolException e) {

logger.error(blogUrl + "-ClientProtocolException", e);

} catch (IOException e) {

logger.error(blogUrl + "-IOException", e);

} finally {

try {

if (response != null) {

response.close();

}

} catch (IOException e) {

logger.error(blogUrl + "-IOException", e);

}

}

logger.info("结束爬取博客网页:" + HOMEURL);

}

/** * 解析博客类容,获取博客中标题以及所有内容 * * @param blogContent */

private static void parseBlogContent(String blogContent, String link) {

Document doc = Jsoup.parse(blogContent);

if(!link.contains("ansion2014")) {

System.out.println(blogContent);

}

Elements titleEles = doc

//#mainBox main .blog-content-box .article-header-box .article-header .article-title-box h1

.select("#topics .post h1 a");

System.out.println(titleEles.toString());

if (titleEles.size() == 0) {

logger.info("博客标题为空,不插入数据库!");

return;

}

String title = titleEles.get(0).html();

Elements blogContentEles = doc.select("#cnblogs_post_body ");

if (blogContentEles.size() == 0) {

logger.info("博客内容为空,不插入数据库!");

return;

}

String blogContentBody = blogContentEles.get(0).html();

// Elements imgEles = doc.select("img");

// List<String> imgUrlList = new LinkedList<String>();

// if(imgEles.size() > 0) {

// for (Element imgEle : imgEles) {

// imgUrlList.add(imgEle.attr("src"));

// }

// }

//

// if(imgUrlList.size() > 0) {

// Map<String, String> replaceUrlMap = downloadImgList(imgUrlList);

// blogContent = replaceContent(blogContent,replaceUrlMap);

// }

String sql = "insert into `t_jsoup_article` values(null,?,?,null,now(),0,0,null,?,0,null)";

try {

PreparedStatement pst = con.prepareStatement(sql);

pst.setObject(1, title);

pst.setObject(2, blogContentBody);

pst.setObject(3, link);

if(pst.executeUpdate() == 0) {

logger.info("爬取博客信息插入数据库失败");

}else {

cache.put(new net.sf.ehcache.Element(link, link));

logger.info("爬取博客信息插入数据库成功");

}

} catch (SQLException e) {

logger.error("数据异常-SQLException:",e);

}

}

/** * 将别人博客内容进行加工,将原有图片地址换成本地的图片地址 * @param blogContent * @param replaceUrlMap * @return */

private static String replaceContent(String blogContent, Map<String, String> replaceUrlMap) {

for(Map.Entry<String, String> entry: replaceUrlMap.entrySet()) {

blogContent = blogContent.replace(entry.getKey(), entry.getValue());

}

return blogContent;

}

/** * 别人服务器图片本地化 * @param imgUrlList * @return */

private static Map<String, String> downloadImgList(List<String> imgUrlList) {

Map<String, String> replaceMap = new HashMap<String, String>();

for (String imgUrl : imgUrlList) {

CloseableHttpClient httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(imgUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response == null) {

logger.info(HOMEURL + ":爬取无响应");

}else {

if (response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String blogImagesPath = PropertiesUtil.getValue("blogImages");

String dateDir = DateUtil.getCurrentDatePath();

String uuid = UUID.randomUUID().toString();

String subfix = entity.getContentType().getValue().split("/")[1];

String fileName = blogImagesPath + dateDir + "/" + uuid + "." + subfix;

FileUtils.copyInputStreamToFile(entity.getContent(), new File(fileName));

replaceMap.put(imgUrl, fileName);

}

}

} catch (ClientProtocolException e) {

logger.error(imgUrl + "-ClientProtocolException", e);

} catch (IOException e) {

logger.error(imgUrl + "-IOException", e);

} catch (Exception e) {

logger.error(imgUrl + "-Exception", e);

} finally {

try {

if (response != null) {

response.close();

}

} catch (IOException e) {

logger.error(imgUrl + "-IOException", e);

}

}

}

return replaceMap;

}

public static void start() {

while(true) {

DbUtil dbUtil = new DbUtil();

try {

con = dbUtil.getCon();

parseHomePage();

} catch (Exception e) {

logger.error("数据库连接势失败!");

} finally {

try {

if (con != null) {

con.close();

}

} catch (SQLException e) {

logger.error("数据关闭异常-SQLException:",e);

}

}

try {

Thread.sleep(1000*60);

} catch (InterruptedException e) {

logger.error("主线程休眠异常-InterruptedException:",e);

}

}

}

public static void main(String[] args) {

start();

}

}

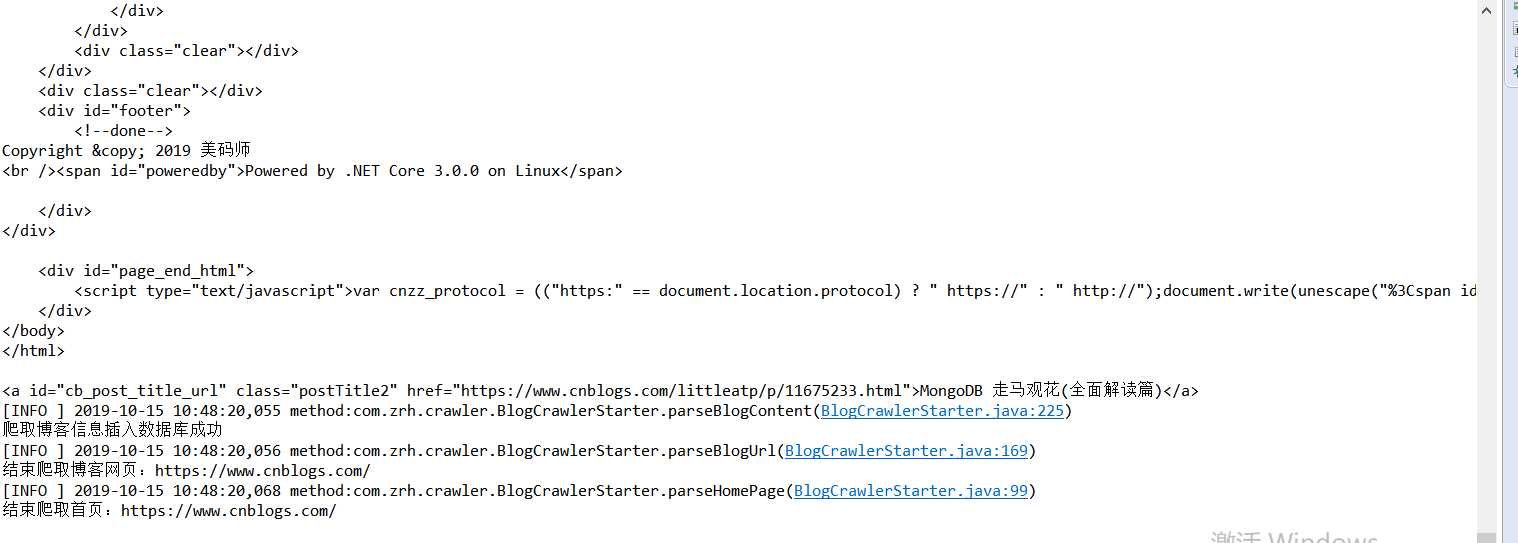

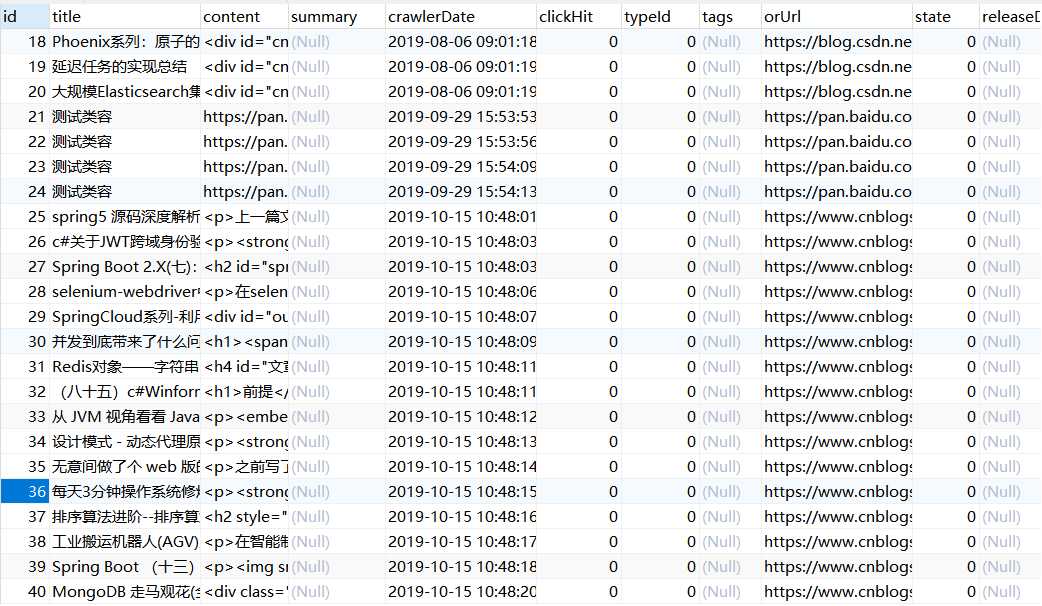

再看下我们的数据库的数据都插入了:

3、百度云链接爬虫

PanZhaoZhaoCrawler3.java

package com.zrh.crawler;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.LinkedList;

import java.util.List;

import java.util.UUID;

import org.apache.http.HttpEntity;

import org.apache.http.client.ClientProtocolException;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.apache.log4j.Logger;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import com.zrh.util.DbUtil;

import com.zrh.util.PropertiesUtil;

import net.sf.ehcache.Cache;

import net.sf.ehcache.CacheManager;

import net.sf.ehcache.Status;

public class PanZhaoZhaoCrawler3 {

private static Logger logger = Logger.getLogger(PanZhaoZhaoCrawler3.class);

private static String URL = "http://www.13910.com/daren/";

private static String PROJECT_URL = "http://www.13910.com";

private static Connection con;

private static CacheManager manager;

private static Cache cache;

private static CloseableHttpClient httpClient;

private static long total = 0;

/** * httpclient获取首页内容 */

public static void parseHomePage() {

logger.info("开始爬取:" + URL);

manager = CacheManager.create(PropertiesUtil.getValue("ehcacheXmlPath"));

cache = manager.getCache("cnblog");

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(URL);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response == null) {

logger.info("链接超时!");

} else {

if (response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String pageContent = EntityUtils.toString(entity, "utf-8");

parsePageContent(pageContent);

}

}

} catch (ClientProtocolException e) {

logger.error(URL + "-解析异常-ClientProtocolException", e);

} catch (IOException e) {

logger.error(URL + "-解析异常-IOException", e);

} finally {

try {

if (response != null) {

response.close();

}

if (httpClient != null) {

httpClient.close();

}

} catch (IOException e) {

logger.error(URL + "-解析异常-IOException", e);

}

}

// 最终将数据缓存到硬盘中

if (cache.getStatus() == Status.STATUS_ALIVE) {

cache.flush();

}

manager.shutdown();

logger.info("结束爬取:" + URL);

}

/** * Jsoup解析首页内容 * @param pageContent */

private static void parsePageContent(String pageContent) {

Document doc = Jsoup.parse(pageContent);

Elements aEles = doc.select(".showtop .key-right .darenlist .list-info .darentitle a");

for (Element aEle : aEles) {

String aHref = aEle.attr("href");

logger.info("提取个人代理分享主页:"+aHref);

String panZhaoZhaoUserShareUrl = PROJECT_URL + aHref;

List<String> panZhaoZhaoUserShareUrls = getPanZhaoZhaoUserShareUrls(panZhaoZhaoUserShareUrl);

for (String singlePanZhaoZhaoUserShareUrl : panZhaoZhaoUserShareUrls) {

// System.out.println("**********************************************************"+singlePanZhaoZhaoUserShareUrl+"**********************************************************");

// continue;

parsePanZhaoZhaoUserShareUrl(singlePanZhaoZhaoUserShareUrl);

}

}

}

/** * 收集个人主页的前15条记录 * @param panZhaoZhaoUserShareUrl * @return */

private static List<String> getPanZhaoZhaoUserShareUrls(String panZhaoZhaoUserShareUrl){

List<String> list = new LinkedList<String>();

list.add(panZhaoZhaoUserShareUrl);

for (int i = 2; i < 16; i++) {

list.add(panZhaoZhaoUserShareUrl+"page-"+i+".html");

}

return list;

}

/** * 解析盘找找加工后的用户URL * 原:http://yun.baidu.com/share/home?uk=1949795117 * 现在:http://www.13910.com/u/1949795117/share/ * @param panZhaoZhaoUserShareUrl 现在的url */

private static void parsePanZhaoZhaoUserShareUrl(String panZhaoZhaoUserShareUrl) {

logger.info("开始爬取个人代理分享主页::"+panZhaoZhaoUserShareUrl);

HttpGet httpGet = new HttpGet(panZhaoZhaoUserShareUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config );

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info("链接超时!");

}else {

if(response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String pageContent = EntityUtils.toString(entity, "utf-8");

parsePanZhaoZhaoUserSharePageContent(pageContent,panZhaoZhaoUserShareUrl);

}

}

} catch (ClientProtocolException e) {

logger.error(panZhaoZhaoUserShareUrl+"-解析异常-ClientProtocolException",e);

} catch (IOException e) {

logger.error(panZhaoZhaoUserShareUrl+"-解析异常-IOException",e);

}finally {

try {

if(response != null) {

response.close();

}

} catch (IOException e) {

logger.error(panZhaoZhaoUserShareUrl+"-解析异常-IOException",e);

}

}

logger.info("结束爬取个人代理分享主页::"+URL);

}

/** * 通过用户分享的百度云主页URL获取的内容,得到所有加工后的链接 * @param pageContent * @param panZhaoZhaoUserShareUrl 加工后的用户分享主页链接 */

private static void parsePanZhaoZhaoUserSharePageContent(String pageContent, String panZhaoZhaoUserShareUrl) {

Document doc = Jsoup.parse(pageContent);

Elements aEles = doc.select("#flist li a");

if(aEles.size() == 0) {

logger.info("没有爬取到百度云地址");

return;

}

for (Element aEle : aEles) {

String ahref = aEle.attr("href");

parseUserHandledTargetUrl(PROJECT_URL + ahref);

}

// System.out.println("***********************************"+aEles.size()+"***********************"+ahref+"**********************************************************");

}

/** * 解析地址 * @param handledTargetUrl 这个地址中包含了加工后的百度云地址 */

private static void parseUserHandledTargetUrl(String handledTargetUrl) {

logger.info("开始爬取blog::"+handledTargetUrl);

if(cache.get(handledTargetUrl) != null) {

logger.info("数据库已存在该记录");

return;

}

HttpGet httpGet = new HttpGet(handledTargetUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config );

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info("链接超时!");

}else {

if(response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String pageContent = EntityUtils.toString(entity, "utf-8");

// System.out.println("**********************************************************"+pageContent+"**********************************************************");

parseHandledTargetUrlPageContent(pageContent,handledTargetUrl);

}

}

} catch (ClientProtocolException e) {

logger.error(handledTargetUrl+"-解析异常-ClientProtocolException",e);

} catch (IOException e) {

logger.error(handledTargetUrl+"-解析异常-IOException",e);

}finally {

try {

if(response != null) {

response.close();

}

} catch (IOException e) {

logger.error(handledTargetUrl+"-解析异常-IOException",e);

}

}

logger.info("结束爬取blog::"+URL);

}

/** * 解析加工后的百度云地址内容 * @param pageContent * @param handledTargetUrl 加工后的百度云地址 */

private static void parseHandledTargetUrlPageContent(String pageContent, String handledTargetUrl) {

Document doc = Jsoup.parse(pageContent);

Elements aEles = doc.select(".fileinfo .panurl a");

if(aEles.size() == 0) {

logger.info("没有爬取到百度云地址");

return;

}

String ahref = aEles.get(0).attr("href");

// System.out.println("**********************************************************"+ahref+"**********************************************************");

getUserBaiduYunUrl(PROJECT_URL+ahref);

}

/** * 获取被处理过的百度云链接内容 * @param handledBaiduYunUrl 被处理过的百度云链接 */

private static void getUserBaiduYunUrl(String handledBaiduYunUrl) {

logger.info("开始爬取blog::"+handledBaiduYunUrl);

HttpGet httpGet = new HttpGet(handledBaiduYunUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config );

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if(response == null) {

logger.info("链接超时!");

}else {

if(response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String pageContent = EntityUtils.toString(entity, "utf-8");

// System.out.println("**********************************************************"+pageContent+"**********************************************************");

parseHandledBaiduYunUrlPageContent(pageContent,handledBaiduYunUrl);

}

}

} catch (ClientProtocolException e) {

logger.error(handledBaiduYunUrl+"-解析异常-ClientProtocolException",e);

} catch (IOException e) {

logger.error(handledBaiduYunUrl+"-解析异常-IOException",e);

}finally {

try {

if(response != null) {

response.close();

}

} catch (IOException e) {

logger.error(handledBaiduYunUrl+"-解析异常-IOException",e);

}

}

logger.info("结束爬取blog::"+URL);

}

/** * 获取百度云链接 * @param pageContent * @param handledBaiduYunUrl */

private static void parseHandledBaiduYunUrlPageContent(String pageContent, String handledBaiduYunUrl) {

Document doc = Jsoup.parse(pageContent);

Elements aEles = doc.select("#check-result-no a");

if(aEles.size() == 0) {

logger.info("没有爬取到百度云地址");

return;

}

String ahref = aEles.get(0).attr("href");

if((!ahref.contains("yun.baidu.com")) && (!ahref.contains("pan.baidu.com"))) return;

logger.info("**********************************************************"+"爬取到第"+(++total)+"个目标对象:"+ahref+"**********************************************************");

// System.out.println("爬取到第"+(++total)+"个目标对象:"+ahref);

String sql = "insert into `t_jsoup_article` values(null,?,?,null,now(),0,0,null,?,0,null)";

try {

PreparedStatement pst = con.prepareStatement(sql);

// pst.setObject(1, UUID.randomUUID().toString());

pst.setObject(1, "测试类容");

pst.setObject(2, ahref);

pst.setObject(3, ahref);

if(pst.executeUpdate() == 0) {

logger.info("爬取链接插入数据库失败!!!");

}else {

cache.put(new net.sf.ehcache.Element(handledBaiduYunUrl, handledBaiduYunUrl));

logger.info("爬取链接插入数据库成功!!!");

}

} catch (SQLException e) {

logger.error(ahref+"-解析异常-SQLException",e);

}

}

public static void start() {

DbUtil dbUtil = new DbUtil();

try {

con = dbUtil.getCon();

parseHomePage();

} catch (Exception e) {

logger.error("数据库创建失败",e);

}

}

public static void main(String[] args) {

start();

}

}

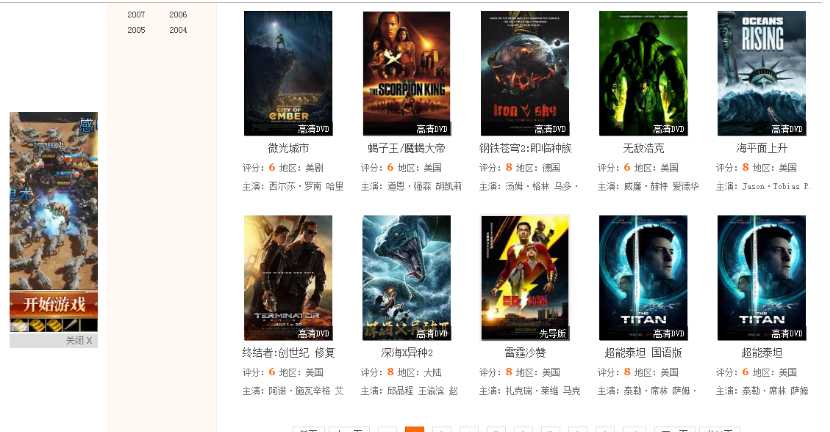

爬这里面的链接

爬想要的电影:

MovieCrawlerStarter.java

package com.zrh.crawler;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.LinkedList;

import java.util.List;

import org.apache.http.HttpEntity;

import org.apache.http.client.ClientProtocolException;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import org.apache.log4j.Logger;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import com.zrh.util.DbUtil;

import com.zrh.util.PropertiesUtil;

import net.sf.ehcache.Cache;

import net.sf.ehcache.CacheManager;

import net.sf.ehcache.Status;

public class MovieCrawlerStarter {

private static Logger logger = Logger.getLogger(MovieCrawlerStarter.class);

private static String URL = "http://www.8gw.com/";

private static String PROJECT_URL = "http://www.8gw.com";

private static Connection con;

private static CacheManager manager;

private static Cache cache;

private static CloseableHttpClient httpClient;

private static long total = 0;

/** * 等待爬取的52个链接的数据 * * @return */

private static List<String> getUrls() {

List<String> list = new LinkedList<String>();

list.add("http://www.8gw.com/8gli/index8.html");

for (int i = 2; i < 53; i++) {

list.add("http://www.8gw.com/8gli/index8_" + i + ".html");

}

return list;

}

/** * 获取URL主体类容 * * @param url */

private static void parseUrl(String url) {

logger.info("开始爬取系列列表::" + url);

HttpGet httpGet = new HttpGet(url);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response == null) {

logger.info("链接超时!");

} else {

if (response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String pageContent = EntityUtils.toString(entity, "GBK");

parsePageContent(pageContent, url);

}

}

} catch (ClientProtocolException e) {

logger.error(url + "-解析异常-ClientProtocolException", e);

} catch (IOException e) {

logger.error(url + "-解析异常-IOException", e);

} finally {

try {

if (response != null) {

response.close();

}

} catch (IOException e) {

logger.error(url + "-解析异常-IOException", e);

}

}

logger.info("结束爬取系列列表::" + url);

}

/** * 获取当前页中的具体影片的链接 * @param pageContent * @param url */

private static void parsePageContent(String pageContent, String url) {

// System.out.println("****************" + url + "***********************");

Document doc = Jsoup.parse(pageContent);

Elements liEles = doc.select(".span_2_800 #list_con li");

for (Element liEle : liEles) {

String movieUrl = liEle.select(".info a").attr("href");

if (null == movieUrl || "".equals(movieUrl)) {

logger.info("该影片未内容,不再爬取插入数据库!");

continue;

}

if(cache.get(movieUrl) != null) {

logger.info("该数据已经被爬取到数据库中,数据库不再收录!");

continue;

}

parseSingleMovieUrl(PROJECT_URL+movieUrl);

}

}

/** * 解析单个影片链接 * @param movieUrl */

private static void parseSingleMovieUrl(String movieUrl) {

logger.info("开始爬取影片网页:" + movieUrl);

httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(movieUrl);

RequestConfig config = RequestConfig.custom().setConnectTimeout(5000).setSocketTimeout(8000).build();

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

response = httpClient.execute(httpGet);

if (response == null) {

logger.info(movieUrl + ":爬取无响应");

return;

}

if (response.getStatusLine().getStatusCode() == 200) {

HttpEntity entity = response.getEntity();

String blogContent = EntityUtils.toString(entity, "GBK");

parseSingleMovieContent(blogContent, movieUrl);

}

} catch (ClientProtocolException e) {

logger.error(movieUrl + "-ClientProtocolException", e);

} catch (IOException e) {

logger.error(movieUrl + "-IOException", e);

} finally {

try {

if (response != null) {

response.close();

}

} catch (IOException e) {

logger.error(movieUrl + "-IOException", e);

}

}

logger.info("结束爬取影片网页:" + movieUrl);

}

/** * 解析页面主体类容(影片名字、影片描述、影片地址) * @param pageContent * @param movieUrl */

private static void parseSingleMovieContent(String pageContent, String movieUrl) {

// System.out.println("****************" + movieUrl + "***********************");

Document doc = Jsoup.parse(pageContent);

Elements divEles = doc.select(".wrapper .main .moviedteail");

// .wrapper .main .moviedteail .moviedteail_tt h1

// .wrapper .main .moviedteail .moviedteail_list .moviedteail_list_short a

// .wrapper .main .moviedteail .moviedteail_img img

Elements h1Eles = divEles.select(".moviedteail_tt h1");

if (h1Eles.size() == 0) {

logger.info("影片名字为空,不插入数据库!");

return;

}

String mname = h1Eles.get(0).html();

Elements aEles = divEles.select(".moviedteail_list .moviedteail_list_short a");

if (aEles.size() == 0) {

logger.info("影片描述为空,不插入数据库!");

return;

}

String mdesc = aEles.get(0).html();

Elements imgEles = divEles.select(".moviedteail_img img");

if (null == imgEles || "".equals(imgEles)) {

logger.info("影片描述为空,不插入数据库!");

return;

}

String mimg = imgEles.attr("src");

String sql = "insert into movie(mname,mdesc,mimg,mlink) values(?,?,?,99)";

try {

System.out.println("****************" + mname + "***********************");

System.out.println("****************" + mdesc + "***********************");

System.out.println("****************" + mimg + "***********************");

PreparedStatement pst = con.prepareStatement(sql);

pst.setObject(1, mname);

pst.setObject(2, mdesc);

pst.setObject(3, mimg);

if(pst.executeUpdate() == 0) {

logger.info("爬取影片信息插入数据库失败");

}else {

cache.put(new net.sf.ehcache.Element(movieUrl, movieUrl));

logger.info("爬取影片信息插入数据库成功");

}

} catch (SQLException e) {

logger.error("数据异常-SQLException:",e);

}

}

public static void main(String[] args) {

manager = CacheManager.create(PropertiesUtil.getValue("ehcacheXmlPath"));

cache = manager.getCache("8gli_movies");

httpClient = HttpClients.createDefault();

DbUtil dbUtil = new DbUtil();

try {

con = dbUtil.getCon();

List<String> urls = getUrls();

for (String url : urls) {

try {

parseUrl(url);

} catch (Exception e) {

// urls.add(url);

}

}

} catch (Exception e1) {

logger.error("数据库连接势失败!");

} finally {

try {

if (httpClient != null) {

httpClient.close();

}

if (con != null) {

con.close();

}

} catch (IOException e) {

logger.error("网络连接关闭异常-IOException:",e);

} catch (SQLException e) {

logger.error("数据关闭异常-SQLException:",e);

}

}

// 最终将数据缓存到硬盘中

if (cache.getStatus() == Status.STATUS_ALIVE) {

cache.flush();

}

manager.shutdown();

}

}

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/38412.html