好像还挺好玩的GAN2——Keras搭建DCGAN利用深度卷积神经网络实现图片生成

注意事项

该博客已经有重置版啦,重制版代码更清晰,效果更好一些:

好像还挺好玩的GAN2——Keras搭建DCGAN利用深度卷积神经网络实现图片生成

学习前言

我又死了我又死了我又死了!

什么是DCGAN

DCGAN的全称是Deep Convolutional Generative Adversarial Networks ,

意即深度卷积对抗生成网络。

它是由Alec Radford在论文Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks中提出的。

实际上它是在GAN的基础上增加深度卷积网络结构。

神经网络构建

1、Generator

生成网络的目标是输入一行正态分布随机数,生成mnist手写体图片,因此它的输入是一个长度为N的一维的向量,输出一个28,28,1维的图片。

与普通GAN不同的是,生成网络是卷积神经网络。

def build_generator(self):

model = Sequential()

# 先全连接到32*7*7的维度上

model.add(Dense(32 * 7 * 7, activation="relu", input_dim=self.latent_dim))

# reshape成特征层的样式

model.add(Reshape((7, 7, 32)))

# 7, 7, 64

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# 上采样

# 7, 7, 64 -> 14, 14, 64

model.add(UpSampling2D())

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# 上采样

# 14, 14, 128 -> 28, 28, 64

model.add(UpSampling2D())

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# 28, 28, 64 -> 28, 28, 1

model.add(Conv2D(self.channels, kernel_size=3, padding="same"))

model.add(Activation("tanh"))

model.summary()

noise = Input(shape=(self.latent_dim,))

img = model(noise)

return Model(noise, img)

2、Discriminator

判别模型的目的是根据输入的图片判断出真伪。因此它的输入一个28,28,1维的图片,输出是0到1之间的数,1代表判断这个图片是真的,0代表判断这个图片是假的。

与普通GAN不同的是,它使用的是卷积神经网络。

def build_discriminator(self):

model = Sequential()

# 28, 28, 1 -> 14, 14, 32

model.add(Conv2D(32, kernel_size=3, strides=2, input_shape=self.img_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

# 14, 14, 32 -> 7, 7, 64

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

# 7, 7, 64 -> 4, 4, 128

model.add(ZeroPadding2D(((0,1),(0,1))))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(GlobalAveragePooling2D())

# 全连接

model.add(Dense(1, activation='sigmoid'))

model.summary()

img = Input(shape=self.img_shape)

validity = model(img)

return Model(img, validity)

训练思路

DCGAN的训练和GAN一样,分为如下几个步骤:

1、随机选取batch_size个真实的图片。

2、随机生成batch_size个N维向量,传入到Generator中生成batch_size个虚假图片。

3、真实图片的label为1,虚假图片的label为0,将真实图片和虚假图片当作训练集传入到Discriminator中进行训练。

4、将虚假图片的Discriminator预测结果与1的对比作为loss对Generator进行训练(与1对比的意思是,如果Discriminator将虚假图片判断为1,说明这个生成的图片很“真实”)。

实现全部代码

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D, GlobalAveragePooling2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam

import matplotlib.pyplot as plt

import sys

import os

import numpy as np

class DCGAN():

def __init__(self):

# 输入shape

self.img_rows = 28

self.img_cols = 28

self.channels = 1

self.img_shape = (self.img_rows, self.img_cols, self.channels)

# 分十类

self.num_classes = 10

self.latent_dim = 100

# adam优化器

optimizer = Adam(0.0002, 0.5)

# 判别模型

self.discriminator = self.build_discriminator()

self.discriminator.compile(loss=['binary_crossentropy'],

optimizer=optimizer,

metrics=['accuracy'])

# 生成模型

self.generator = self.build_generator()

# conbine是生成模型和判别模型的结合

# 判别模型的trainable为False

# 用于训练生成模型

z = Input(shape=(self.latent_dim,))

img = self.generator(z)

self.discriminator.trainable = False

valid = self.discriminator(img)

self.combined = Model(z, valid)

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

def build_generator(self):

model = Sequential()

# 先全连接到64*7*7的维度上

model.add(Dense(32 * 7 * 7, activation="relu", input_dim=self.latent_dim))

# reshape成特征层的样式

model.add(Reshape((7, 7, 32)))

# 7, 7, 64

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# 上采样

# 7, 7, 64 -> 14, 14, 64

model.add(UpSampling2D())

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# 上采样

# 14, 14, 128 -> 28, 28, 64

model.add(UpSampling2D())

model.add(Conv2D(64, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# 上采样

# 28, 28, 64 -> 28, 28, 1

model.add(Conv2D(self.channels, kernel_size=3, padding="same"))

model.add(Activation("tanh"))

model.summary()

noise = Input(shape=(self.latent_dim,))

img = model(noise)

return Model(noise, img)

def build_discriminator(self):

model = Sequential()

# 28, 28, 1 -> 14, 14, 32

model.add(Conv2D(32, kernel_size=3, strides=2, input_shape=self.img_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

# 14, 14, 32 -> 7, 7, 64

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

# 7, 7, 64 -> 4, 4, 128

model.add(ZeroPadding2D(((0,1),(0,1))))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(GlobalAveragePooling2D())

# 全连接

model.add(Dense(1, activation='sigmoid'))

model.summary()

img = Input(shape=self.img_shape)

validity = model(img)

return Model(img, validity)

def train(self, epochs, batch_size=128, save_interval=50):

# 载入数据

(X_train, _), (_, _) = mnist.load_data()

# 归一化

X_train = X_train / 127.5 - 1.

X_train = np.expand_dims(X_train, axis=3)

# Adversarial ground truths

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

for epoch in range(epochs):

# --------------------- #

# 训练判别模型

# --------------------- #

idx = np.random.randint(0, X_train.shape[0], batch_size)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

gen_imgs = self.generator.predict(noise)

# 训练并计算loss

d_loss_real = self.discriminator.train_on_batch(imgs, valid)

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# 训练生成模型

# ---------------------

g_loss = self.combined.train_on_batch(noise, valid)

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

if epoch % save_interval == 0:

self.save_imgs(epoch)

def save_imgs(self, epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, self.latent_dim))

gen_imgs = self.generator.predict(noise)

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

axs[i,j].axis('off')

cnt += 1

fig.savefig("images/mnist_%d.png" % epoch)

plt.close()

if __name__ == '__main__':

if not os.path.exists("./images"):

os.makedirs("./images")

dcgan = DCGAN()

dcgan.train(epochs=20000, batch_size=256, save_interval=50)

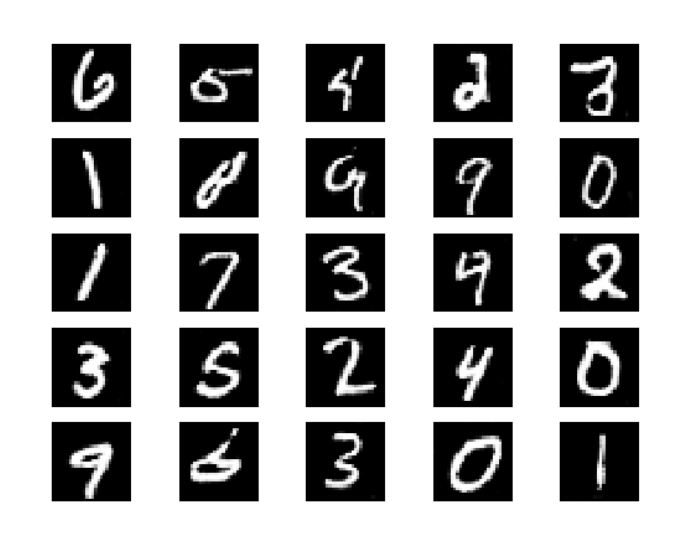

实现效果为:

今天的文章好像还挺好玩的GAN2——Keras搭建DCGAN利用深度卷积神经网络实现图片生成分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/61789.html