一、tracers简介

在linux的kernel/trace目录下,有一些用于调试内核的工具,也就是tracers,包括:

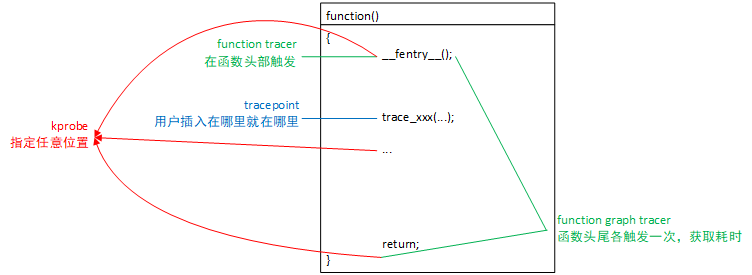

- tracepoint,或者也叫做event trace。tracepoint需要手动在代码中增加,默认情况下功能类似于printk,就是用于打印信息。但在trace框架中,你可以通过操作/sys/kernel/debug/tracing下的对应文件来控制打印的开关、过滤(例如只打印特点值时的信息),也可以配perf、ebpf进行使用。

- ftrace/function tracer。function tracer不需要手动添加,内核中的大部分函数都可以使用function trace,指定特定的function进行trace的方法是echo 函数名 > /sys/kernel/debug/tracing/set_ftrace_filter。可以配置current_tracer,不同的tracer会打印不同的信息,可以打印的信息有:进程、cpu号、时间戳、函数名、函数调用栈等。

- function graph tracer。和function trace一样,但是可以打印出函数的耗时,甚至是函数函数调用的其他函数的耗时。

- kprobe。可以类似设置gdb的断点一样去设置kprobe,可以设置在任意位置。

tracer在函数中的位置:

二、tracers的使用

tracepoint的使用例子

# 关闭trace功能

echo 0 > /sys/kernel/debug/tracing/tracing_on

# 清空trace的buffer内容

echo > /sys/kernel/debug/tracing/trace

# 开启block_rq_issue的trace

echo 1 > /sys/kernel/debug/tracing/events/block/block_rq_issue/enable

# 设置block_rq_issue的filter,dev==0x8000f0,也就是major=8,minor=240

echo dev==0x8000f0 > /sys/kernel/debug/tracing/events/block/block_rq_issue/filter

# 开启block_rq_complete的trace

echo 1 > /sys/kernel/debug/tracing/events/block/block_rq_complete/enable

# 设置block_rq_issue的filter,dev==0x8000f0,也就是major=8,minor=240

echo dev==0x8000f0 > /sys/kernel/debug/tracing/events/block/block_rq_complete/filter

# 开启trace功能

echo 1 > /sys/kernel/debug/tracing/tracing_on

执行如上命令,开启了block_rq_issue与block_rq_complete这两个event的trace,然后执行dd命令对硬盘sdp(8,240)进行数据写入,就会执行到这两个event:

dd if=/dev/zero of=/dev/sdp bs=1048576 count=10

然后我们cat这个文件:/sys/kernel/debug/tracing/trace

root:/# cat /sys/kernel/debug/tracing/trace

# tracer: nop

#

# entries-in-buffer/entries-written: 32/32 #P:4

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

dd-8289 [001] .... 77475.979607: block_rq_issue: 8,240 WS 688128 () 0 + 1344 [dd]

<idle>-0 [003] ..s. 77475.981651: block_rq_complete: 8,240 WS () 0 + 1344 [0]

kworker/3:1H-171 [003] .... 77475.981715: block_rq_issue: 8,240 WS 688128 () 1344 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.001032: block_rq_complete: 8,240 WS () 1344 + 1344 [0]

dd-8289 [001] .... 77476.001158: block_rq_issue: 8,240 WS 688128 () 2688 + 1344 [dd]

<idle>-0 [003] ..s. 77476.004895: block_rq_complete: 8,240 WS () 2688 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.004924: block_rq_issue: 8,240 WS 688128 () 4032 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.008818: block_rq_complete: 8,240 WS () 4032 + 1344 [0]

dd-8289 [001] .... 77476.008939: block_rq_issue: 8,240 WS 688128 () 5376 + 1344 [dd]

<idle>-0 [003] ..s. 77476.013850: block_rq_complete: 8,240 WS () 5376 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.013879: block_rq_issue: 8,240 WS 688128 () 6720 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.017696: block_rq_complete: 8,240 WS () 6720 + 1344 [0]

dd-8289 [001] .... 77476.017816: block_rq_issue: 8,240 WS 688128 () 8064 + 1344 [dd]

<idle>-0 [003] ..s. 77476.022776: block_rq_complete: 8,240 WS () 8064 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.022804: block_rq_issue: 8,240 WS 688128 () 9408 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.026638: block_rq_complete: 8,240 WS () 9408 + 1344 [0]

dd-8289 [001] .... 77476.026755: block_rq_issue: 8,240 WS 688128 () 10752 + 1344 [dd]

<idle>-0 [003] ..s. 77476.030496: block_rq_complete: 8,240 WS () 10752 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.030540: block_rq_issue: 8,240 WS 688128 () 12096 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.035533: block_rq_complete: 8,240 WS () 12096 + 1344 [0]

dd-8289 [001] .... 77476.035647: block_rq_issue: 8,240 WS 688128 () 13440 + 1344 [dd]

<idle>-0 [003] ..s. 77476.039417: block_rq_complete: 8,240 WS () 13440 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.039445: block_rq_issue: 8,240 WS 688128 () 14784 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.043302: block_rq_complete: 8,240 WS () 14784 + 1344 [0]

dd-8289 [001] .... 77476.043425: block_rq_issue: 8,240 WS 688128 () 16128 + 1344 [dd]

<idle>-0 [003] ..s. 77476.048337: block_rq_complete: 8,240 WS () 16128 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.048365: block_rq_issue: 8,240 WS 688128 () 17472 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.052200: block_rq_complete: 8,240 WS () 17472 + 1344 [0]

dd-8289 [001] .... 77476.052317: block_rq_issue: 8,240 WS 688128 () 18816 + 1344 [dd]

<idle>-0 [003] ..s. 77476.056061: block_rq_complete: 8,240 WS () 18816 + 1344 [0]

kworker/3:1H-171 [003] .... 77476.056143: block_rq_issue: 8,240 WS 163840 () 20160 + 320 [kworker/3:1H]

<idle>-0 [003] ..s. 77476.062364: block_rq_complete: 8,240 WS () 20160 + 320 [0]

我们跟踪的这两个event,可以在内核中去搜索trace_block_rq_issue和trace_block_rq_complete去看event是加在哪个位置的。block_rq_issue是在下发block io的请求时执行到,block_rq_complete是在io请求完成后执行到,从打印的内容中我们可以获取一些信息,例如我们可以通过计算两者的时间差得到这次block io的耗时。

function tracer的使用例子

我们还是以block io请求的下发与结束为例。

首先在内核代码中找到trace_block_rq_issue的调用位置,是blk_mq_start_request函数:

void blk_mq_start_request(struct request *rq)

{

struct request_queue *q = rq->q;

trace_block_rq_issue(q, rq);

if (test_bit(QUEUE_FLAG_STATS, &q->queue_flags)) {

rq->io_start_time_ns = ktime_get_ns();

rq->stats_sectors = blk_rq_sectors(rq);

rq->rq_flags |= RQF_STATS;

rq_qos_issue(q, rq);

}

WARN_ON_ONCE(blk_mq_rq_state(rq) != MQ_RQ_IDLE);

blk_add_timer(rq);

WRITE_ONCE(rq->state, MQ_RQ_IN_FLIGHT);

#ifdef CONFIG_BLK_DEV_INTEGRITY

if (blk_integrity_rq(rq) && req_op(rq) == REQ_OP_WRITE)

q->integrity.profile->prepare_fn(rq);

#endif

}

同样的,我们找到block_rq_complete这个event所属的函数是blk_update_request,那么我们就使用function trace去跟踪这两个函数:blk_mq_start_request和blk_update_request,我们看看怎么操作。

我们先确认一下这两个函数能否跟踪,在/sys/kernel/debug/tracing/available_filter_functions中查看是否包含这两个函数:

root:/# cat available_filter_functions | grep blk_mq_start_request

blk_mq_start_request

root:/# cat available_filter_functions | grep blk_update_request

blk_update_request

然后执行如下命令开启function trace:

# 关闭trace功能

echo 0 > /sys/kernel/debug/tracing/tracing_on

# 清空trace的buffer内容

echo > /sys/kernel/debug/tracing/trace

# 设置filter,只跟踪blk_update_request blk_mq_start_request

echo "blk_update_request blk_mq_start_request" > /sys/kernel/debug/tracing/set_ftrace_filter

# 设置使用的tracer

echo function > current_tracer

# 开启trace功能

echo 1 > /sys/kernel/debug/tracing/tracing_on

# tracer: function

#

# entries-in-buffer/entries-written: 98/98 #P:4

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

......

sh-10582 [003] .... 79174.296310: blk_mq_start_request <-scsi_queue_rq

<idle>-0 [001] ..s. 79174.297990: blk_update_request <-scsi_end_request

kworker/1:1H-166 [001] .... 79174.297996: blk_mq_start_request <-scsi_queue_rq

<idle>-0 [001] ..s. 79174.298878: blk_update_request <-scsi_end_request

dd-10585 [001] .... 79174.711883: blk_mq_start_request <-scsi_queue_rq

ksoftirqd/1-20 [001] ..s. 79174.711891: blk_update_request <-scsi_end_request

kworker/u8:1-10333 [002] .... 79174.715406: blk_mq_start_request <-scsi_queue_rq

ksoftirqd/2-26 [002] ..s. 79174.715414: blk_update_request <-scsi_end_request

dd-10585 [001] .... 79174.715473: blk_mq_start_request <-scsi_queue_rq

dd-10585 [001] .... 79174.715473: block_rq_issue: 8,240 WS 688128 () 0 + 1344 [dd]

<idle>-0 [003] ..s. 79174.717521: blk_update_request <-scsi_end_request

<idle>-0 [003] ..s. 79174.717522: block_rq_complete: 8,240 WS () 0 + 1344 [0]

kworker/3:1H-171 [003] .... 79174.717561: blk_mq_start_request <-scsi_queue_rq

kworker/3:1H-171 [003] .... 79174.717561: block_rq_issue: 8,240 WS 688128 () 1344 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 79174.719552: blk_update_request <-scsi_end_request

<idle>-0 [003] ..s. 79174.719552: block_rq_complete: 8,240 WS () 1344 + 1344 [0]

......

dd-10585 [001] .... 79174.781004: blk_mq_start_request <-scsi_queue_rq

dd-10585 [001] .... 79174.781004: block_rq_issue: 8,240 WS 688128 () 16128 + 1344 [dd]

<idle>-0 [003] ..s. 79174.784723: blk_update_request <-scsi_end_request

<idle>-0 [003] ..s. 79174.784724: block_rq_complete: 8,240 WS () 16128 + 1344 [0]

kworker/3:1H-171 [003] .... 79174.784753: blk_mq_start_request <-scsi_queue_rq

kworker/3:1H-171 [003] .... 79174.784753: block_rq_issue: 8,240 WS 688128 () 17472 + 1344 [kworker/3:1H]

<idle>-0 [003] ..s. 79174.788583: blk_update_request <-scsi_end_request

<idle>-0 [003] ..s. 79174.788583: block_rq_complete: 8,240 WS () 17472 + 1344 [0]

......

几点说明:

- blk_mq_start_request与blk_update_request并没有设置像tracepoint的filter,所以会有一些其他设备的打印。

- 可以看到blk_mq_start_request打印后,紧跟着block_rq_issue;blk_update_request后紧跟着block_rq_complete。

我们还可以使用stack trace:

echo 1 > /sys/kernel/debug/tracing/options/func_stack_trace

同样是从/sys/kernel/debug/tracing/trace文件中,我们可以得到以下输出,打印了跟踪函数的调用栈:

sh-11830 [001] .... 80094.295001: blk_mq_start_request <-scsi_queue_rq

sh-11830 [001] .... 80094.295021: <stack trace>

=> ftrace_call

=> blk_mq_start_request

=> scsi_queue_rq

=> blk_mq_dispatch_rq_list

=> __blk_mq_do_dispatch_sched

=> __blk_mq_sched_dispatch_requests

=> blk_mq_sched_dispatch_requests

=> __blk_mq_run_hw_queue

=> __blk_mq_delay_run_hw_queue

=> blk_mq_run_hw_queue

=> blk_mq_sched_insert_requests

=> blk_mq_flush_plug_list

=> blk_flush_plug_list

=> blk_mq_submit_bio

=> submit_bio_noacct

=> submit_bio

=> mpage_readahead

=> blkdev_readahead

=> read_pages

=> page_cache_ra_unbounded

=> do_page_cache_ra

=> ondemand_readahead

=> page_cache_sync_ra

=> generic_file_buffered_read

=> generic_file_read_iter

=> blkdev_read_iter

=> new_sync_read

=> vfs_read

=> ksys_read

=> __x64_sys_read

=> do_syscall_64

=> entry_SYSCALL_64_after_hwframe

function graph tracer的使用例子

我们以blk_mq_start_request为例来看一下function graph tracer的使用。

我们先把前面测试中开启的trace关闭掉,并清空buffer数据:

echo 0 > /sys/kernel/debug/tracing/events/enable

echo > /sys/kernel/debug/tracing/set_ftrace_filter

echo 0 > /sys/kernel/debug/tracing/tracing_on

echo > /sys/kernel/debug/tracing/trace

然后开启对函数blk_mq_start_request的function graph trace:

# 使用function_graph作为tracer

echo function_graph > /sys/kernel/debug/tracing/current_tracer

# 设置跟踪的函数为blk_mq_start_request

echo blk_mq_start_request > /sys/kernel/debug/tracing/set_graph_function

# 设置max_graph_depth为0,不限制跟踪的深度

echo 0 > /sys/kernel/debug/tracing/max_graph_depth

# 重新开启trace

echo 1 > /sys/kernel/debug/tracing/tracing_on

再看输出:

# tracer: function_graph

#

# CPU DURATION FUNCTION CALLS

# | | | | | | |

3) 1.075 us | finish_task_switch();

1) | blk_mq_start_request() {

1) 0.207 us | ktime_get();

1) | __rq_qos_issue() {

1) 0.163 us | wbt_issue();

1) 0.458 us | }

1) | blk_add_timer() {

1) | mod_timer() {

1) | lock_timer_base() {

1) 0.095 us | _raw_spin_lock_irqsave();

1) 0.267 us | }

1) 0.081 us | detach_if_pending();

1) 0.082 us | get_nohz_timer_target();

1) 0.082 us | _raw_spin_lock();

1) | internal_add_timer() {

1) 0.091 us | calc_wheel_index();

1) 0.195 us | enqueue_timer();

1) 0.548 us | }

1) 0.085 us | _raw_spin_unlock_irqrestore();

1) 1.809 us | }

1) 2.160 us | }

1) 4.340 us | }

1) | blk_mq_start_request() {

1) 0.138 us | ktime_get();

1) | __rq_qos_issue() {

1) 0.131 us | wbt_issue();

1) 0.347 us | }

1) 0.245 us | blk_add_timer();

1) 1.978 us | }

------------------------------------------

1) sh-426 => kworker-166

------------------------------------------

1) 0.193 us | finish_task_switch();

1) | blk_mq_start_request() {

1) 0.125 us | ktime_get();

1) | __rq_qos_issue() {

1) 0.088 us | wbt_issue();

1) 0.279 us | }

1) 0.084 us | blk_add_timer();

1) 0.859 us | }

------------------------------------------

1) kworker-166 => sh-434

------------------------------------------

可以看到,blk_mq_start_request这个函数的总耗时在函数的结束位置’}’处打印出来了,其调用的各个函数的耗时也都被打印出来了,非常详细。

我们再看看设置max_graph_depth后有什么区别:

echo 1 > /sys/kernel/debug/tracing/max_graph_depth

输出变成:

1) 0.392 us | blk_mq_start_request();

------------------------------------------

3) sh-636 => sh-691

------------------------------------------

3) 2.409 us | blk_mq_start_request();

3) 1.258 us | blk_mq_start_request();

1) 0.982 us | blk_mq_start_request();

只打印了blk_mq_start_request的耗时。

kprobe的使用例子

kprobe有两种:

- 第一种是传统的使用int3断点指令来实现的,可以加在代码的任意位置。使用这种方式一般需要编写内核module,进行需要的probe的注册,将module加载之后才能插入kprobe。

- 第二种是基于ftrace做的,不需要编写module,与其他tracer一样直接操作/sys/kernel/debug/tracing/下的文件就可以控制kprobe的开关,但是应该无法指定在任意位置插入kprobe,只能在函数开头/结尾处。

由于第一种方法使用比较麻烦,本文就只介绍第二种的用法了。

还是以blk_mq_start_request函数为例:

# 插入对blk_mq_start_request函数的kprobe

echo 'p:my_test blk_mq_start_request' > /sys/kernel/debug/tracing/kprobe_events

# 插入kprobe后,会作为一个event在 /sys/kernel/debug/tracing/events/kprobe/下创建my_test目录。

# my_test这个名字是在echo到/sys/kernel/debug/tracing/kprobe_events文件中时指定的。

echo 1 > /sys/kernel/debug/tracing/events/kprobes/my_test/enable

会输出以下打印:

# tracer: nop

#

# entries-in-buffer/entries-written: 13/13 #P:4

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

sh-6034 [000] .... 3294.372995: my_test: (blk_mq_start_request+0x0/0x100)

sh-6034 [000] .... 3294.373357: my_test: (blk_mq_start_request+0x0/0x100)

kworker/1:1H-171 [001] .... 3294.375052: my_test: (blk_mq_start_request+0x0/0x100)

sh-6040 [002] .... 3299.374970: my_test: (blk_mq_start_request+0x0/0x100)

sh-6040 [002] .... 3299.375407: my_test: (blk_mq_start_request+0x0/0x100)

kworker/1:1H-171 [001] .... 3299.377144: my_test: (blk_mq_start_request+0x0/0x100)

dd-6047 [001] .... 3303.510966: my_test: (blk_mq_start_request+0x0/0x100)

kworker/u8:7-147 [002] .... 3303.511809: my_test: (blk_mq_start_request+0x0/0x100)

dd-6047 [001] .... 3303.511844: my_test: (blk_mq_start_request+0x0/0x100)

kworker/3:1H-416 [003] .... 3303.514001: my_test: (blk_mq_start_request+0x0/0x100)

我们还可以修改echo到kprobe_events的内容,来打印更多的信息,例如:

# 要修改kprobe_events,要先关闭已开启的kprobe_events

echo 0 > /sys/kernel/debug/tracing/events/kprobes/my_test/enable

# 清空之前的缓存数据

echo > /sys/kernel/debug/tracing/trace

# 打印了以下内容:

# 1.[request=$arg1]

# blk_mq_start_request函数的第一个参数,也就是struct request *rq

# 2.[+40($arg1):u32]

# 第一个参数,也就是struct request *rq,再+40后作为u32*,再取值,也就是rq->__data_len

# 3.[+48($arg1):u64]

# rq->__sector

echo 'p:my_test blk_mq_start_request request=$arg1 +40($arg1):u32 +48($arg1):u64' > /sys/kernel/debug/tracing/kprobe_events

echo 1 > /sys/kernel/debug/tracing/events/kprobes/my_test/enable

我们同时开启了block_rq_issue这个event的输出,进行对比验证:

# tracer: nop

#

# entries-in-buffer/entries-written: 20/20 #P:4

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

sh-11183 [000] .... 7129.373391: my_test: (blk_mq_start_request+0x0/0x100) request=0xffff8fd742883c00 arg2=16384 arg3=2048

sh-11183 [000] .... 7129.373392: block_rq_issue: 70,64 RA 16384 () 2048 + 32 [sh]

sh-11183 [000] .... 7129.373848: my_test: (blk_mq_start_request+0x0/0x100) request=0xffff8fd742883f00 arg2=819200 arg3=77824

sh-11183 [000] .... 7129.373850: block_rq_issue: 70,64 RA 819200 () 77824 + 1600 [sh]

kworker/1:1H-171 [001] .... 7129.375853: my_test: (blk_mq_start_request+0x0/0x100) request=0xffff8fd742884200 arg2=229376 arg3=79424

kworker/1:1H-171 [001] .... 7129.375855: block_rq_issue: 70,64 RA 229376 () 79424 + 448 [kworker/1:1H]

dd-11189 [001] .... 7132.463146: my_test: (blk_mq_start_request+0x0/0x100) request=0xffff8fd742a39300 arg2=0 arg3=18446744073709551615

dd-11189 [001] .... 7132.463148: block_rq_issue: 0,0 N 0 () 0 + 0 [dd]

......

我们找一个对比验证一下,my_test的输出:

request=0xffff8fd742883c00 arg2=16384 arg3=2048

block_rq_issue的输出:

70,64 RA 16384 () 2048 + 32 [sh]

长度16384是相等的,sector是2048页式一样的。

echo到kprobe_events的格式在trace_kprobe_create函数中的注释中有说明:

static int trace_kprobe_create(int argc, const char *argv[])

{

/*

* Argument syntax:

* - Add kprobe:

* p[:[GRP/]EVENT] [MOD:]KSYM[+OFFS]|KADDR [FETCHARGS]

* - Add kretprobe:

* r[MAXACTIVE][:[GRP/]EVENT] [MOD:]KSYM[+0] [FETCHARGS]

* Or

* p:[GRP/]EVENT] [MOD:]KSYM[+0]%return [FETCHARGS]

*

* Fetch args:

* $retval : fetch return value

* $stack : fetch stack address

* $stackN : fetch Nth of stack (N:0-)

* $comm : fetch current task comm

* @ADDR : fetch memory at ADDR (ADDR should be in kernel)

* @SYM[+|-offs] : fetch memory at SYM +|- offs (SYM is a data symbol)

* %REG : fetch register REG

* Dereferencing memory fetch:

* +|-offs(ARG) : fetch memory at ARG +|- offs address.

* Alias name of args:

* NAME=FETCHARG : set NAME as alias of FETCHARG.

* Type of args:

* FETCHARG:TYPE : use TYPE instead of unsigned long.

*/

另外,我们设置要插入kprobe的位置,是由参数[MOD:]KSYM[+OFFS]指定的,我们上面的例子就是blk_mq_start_request,也就是只有KSYM。我们传入的参数最前面是p,也可以是r,如果是p就是函数开头,r就是函数的结束位置,这两个位置是明确的,比较常用。如果有需要指定其他位置的需求,也可以加上[+OFFS],但要能够对这个通过OFFS指定的代码位置比较了解。

三、tracer的外围工具

上面的使用例子,我们只是用了/sys/kernel/debug/tracing,也就是tracefs下的文件,进行简单的echo、cat进行分析,还有一些其他工具可以利用tracer提供的信息做更高级的分析。

trace-cmd

trace-cmd支持的命令:

trace-cmd version 2.6.1

usage:

trace-cmd [COMMAND] ...

commands:

record - record a trace into a trace.dat file

start - start tracing without recording into a file

extract - extract a trace from the kernel

stop - stop the kernel from recording trace data

restart - restart the kernel trace data recording

show - show the contents of the kernel tracing buffer

reset - disable all kernel tracing and clear the trace buffers

report - read out the trace stored in a trace.dat file

stream - Start tracing and read the output directly

profile - Start profiling and read the output directly

hist - show a historgram of the trace.dat information

stat - show the status of the running tracing (ftrace) system

split - parse a trace.dat file into smaller file(s)

options - list the plugin options available for trace-cmd report

listen - listen on a network socket for trace clients

list - list the available events, plugins or options

restore - restore a crashed record

snapshot - take snapshot of running trace

stack - output, enable or disable kernel stack tracing

check-events - parse trace event formats

以下是一些使用过程:

# 使用record命令可以记录数据,通过trace-cmd record -h可以查看可用的参数。

root:/# trace-cmd record -e block_rq_issue

Hit Ctrl^C to stop recording

^CCPU0 data recorded at offset=0x67f000 #^C结束record

4096 bytes in size

CPU1 data recorded at offset=0x680000

4096 bytes in size

CPU2 data recorded at offset=0x681000

4096 bytes in size

CPU3 data recorded at offset=0x682000

4096 bytes in size

# 生成一个trace.dat文件,应该保存的是二进制数据

root:/#ls -al

-rw-r--r-- 1 root root 6828032 Mar 25 17:04 trace.dat

# 打印trace.dat中的内容

root:/# trace-cmd report trace.dat

cpus=4

jbd2/sdcw7-8-223 [003] 9726.635682: block_rq_issue: 70,64 WS 24576 () 59795464 + 48 [jbd2/sdcw7-8]

kworker/3:1H-416 [003] 9726.635775: block_rq_issue: 70,64 FF 0 () 0 + 0 [kworker/3:1H]

kworker/1:1H-171 [001] 9726.636249: block_rq_issue: 70,64 WS 4096 () 59795512 + 8 [kworker/1:1H]

kworker/1:1H-171 [001] 9726.636280: block_rq_issue: 70,64 FF 0 () 0 + 0 [kworker/1:1H]

。。。。。。

# 对trace.dat文件进行统计分析

root:/# trace-cmd hist

CPU 0 is empty

CPU 2 is empty

%42.86 (171) kworker/1:1H block_rq_issue #3

|

--- *block_rq_issue*

%28.57 (15050) sh block_rq_issue #2

|

--- *block_rq_issue*

%14.29 (416) kworker/3:1H block_rq_issue #1

|

--- *block_rq_issue*

%14.29 (223) jbd2/sdcw7-8 block_rq_issue #1

|

--- *block_rq_issue*

perf

perf有很多功能,对tracer的信息进行记录、分析是其中一个功能。

使用例子:

# 对event block:block_rq_issue的数据进行记录,保存到perf.data

root:/# perf record -e block:block_rq_issue

^C[ perf record: Woken up 0 times to write data ] #ctrl+c停止

[ perf record: Captured and wrote 7.741 MB perf.data (62359 samples) ]

# 生成perf.data文件,是二进制数据

root:/# ls -al

-rw------- 1 root root 8128875 Mar 25 17:18 perf.data

# 使用文本形式输出

root:/# perf script > ./perf.data.script

root:/# head ./perf.data.script

perf 15915 [003] 10610.606557: block:block_rq_issue: 70,64 WS 8192 () 3892640 + 16 [perf]

jbd2/sdcw7-8 223 [001] 10610.606611: block:block_rq_issue: 70,64 WS 16384 () 59921240 + 32 [jbd2/sdcw7-8]

kworker/1:1H-kb 171 [001] 10610.606678: block:block_rq_issue: 70,64 FF 0 () 0 + 0 [kworker/1:1H]

kworker/1:1H-kb 171 [001] 10610.607076: block:block_rq_issue: 70,64 WS 4096 () 59921272 + 8 [kworker/1:1H]

kworker/1:1H-kb 171 [001] 10610.607112: block:block_rq_issue: 70,64 FF 0 () 0 + 0 [kworker/1:1H]

perf 15915 [003] 10610.607488: block:block_rq_issue: 70,64 WS 4096 () 3892648 + 8 [perf]

jbd2/sdcw7-8 223 [001] 10610.607560: block:block_rq_issue: 70,64 WS 8192 () 59921280 + 16 [jbd2/sdcw7-8]

kworker/1:1H-kb 171 [001] 10610.607605: block:block_rq_issue: 70,64 FF 0 () 0 + 0 [kworker/1:1H]

kworker/1:1H-kb 171 [001] 10610.607917: block:block_rq_issue: 70,64 WS 4096 () 59921296 + 8 [kworker/1:1H]

kworker/1:1H-kb 171 [001] 10610.607955: block:block_rq_issue: 70,64 FF 0 () 0 + 0 [kworker/1:1H]

# 进行统计分析

root:/# perf report > ./perf.data.report

root:/# cat ./perf.data.report

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 62K of event 'block:block_rq_issue'

# Event count (approx.): 62359

#

# Overhead Command Shared Object Symbol

# ........ ............... ................. ........................

#

51.22% kworker/1:1H-kb [kernel.kallsyms] [k] blk_mq_start_request

19.99% jbd2/sdcw7-8 [kernel.kallsyms] [k] blk_mq_start_request

19.99% perf [kernel.kallsyms] [k] blk_mq_start_request

3.69% kworker/2:1H-kb [kernel.kallsyms] [k] blk_mq_start_request

3.27% kworker/3:1H-kb [kernel.kallsyms] [k] blk_mq_start_request

1.83% kworker/0:1H-kb [kernel.kallsyms] [k] blk_mq_start_request

# Samples: 0 of event 'dummy:HG'

# Event count (approx.): 0

#

# Overhead Command Shared Object Symbol

# ........ ....... ............. ......

#

#

# (Cannot load tips.txt file, please install perf!)

#

我们再看一个增加了栈分析的例子,过程类似,就是record时加了-g参数:

root:/# perf record -e block:block_rq_issue -e block:block_rq_issue -g

^C[ perf record: Woken up 0 times to write data ]

[ perf record: Captured and wrote 5.660 MB perf.data (19394 samples) ]

# 文本查看,有栈信息了

root:/# perf script > ./perf.data.script

root:/# head ./perf.data.script

perf 16560 [003] 11077.469077: block:block_rq_issue: 70,64 WS 4096 () 3922080 + 8 [perf]

ffffffffb1372e8c blk_mq_start_request+0x9c ([kernel.kallsyms])

ffffffffb1372e8c blk_mq_start_request+0x9c ([kernel.kallsyms])

ffffffffb167886c scsi_queue_rq+0x2dc ([kernel.kallsyms])

ffffffffb1375687 blk_mq_dispatch_rq_list+0x2d7 ([kernel.kallsyms])

ffffffffb137a9e3 __blk_mq_do_dispatch_sched+0x233 ([kernel.kallsyms])

ffffffffb137ad8a __blk_mq_sched_dispatch_requests+0x15a ([kernel.kallsyms])

ffffffffb137af54 blk_mq_sched_dispatch_requests+0x34 ([kernel.kallsyms])

ffffffffb1373007 __blk_mq_run_hw_queue+0x57 ([kernel.kallsyms])

ffffffffb1373197 __blk_mq_delay_run_hw_queue+0x107 ([kernel.kallsyms])

# 输出结果中,有比较详细的各个函数占据的比例

root:/# perf report > ./perf.data.report

root:/# cat ./perf.data.report

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 19K of event 'block:block_rq_issue'

# Event count (approx.): 19394

#

# Children Self Command Shared Object Symbol

# ........ ........ ............... .................. ....................................

#

45.07% 45.07% kworker/1:1H-kb [kernel.kallsyms] [k] blk_mq_start_request

|

---ret_from_fork

kthread

worker_thread

process_one_work

|

--45.03%--blk_mq_requeue_work

blk_mq_run_hw_queues

blk_mq_run_hw_queue

__blk_mq_delay_run_hw_queue

__blk_mq_run_hw_queue

blk_mq_sched_dispatch_requests

__blk_mq_sched_dispatch_requests

blk_mq_dispatch_rq_list

scsi_queue_rq

blk_mq_start_request

blk_mq_start_request

45.07% 0.00% kworker/1:1H-kb [kernel.kallsyms] [k] ret_from_fork

|

---ret_from_fork

kthread

worker_thread

process_one_work

|

--45.03%--blk_mq_requeue_work

blk_mq_run_hw_queues

blk_mq_run_hw_queue

__blk_mq_delay_run_hw_queue

__blk_mq_run_hw_queue

blk_mq_sched_dispatch_requests

__blk_mq_sched_dispatch_requests

blk_mq_dispatch_rq_list

scsi_queue_rq

blk_mq_start_request

blk_mq_start_request

KernelShark

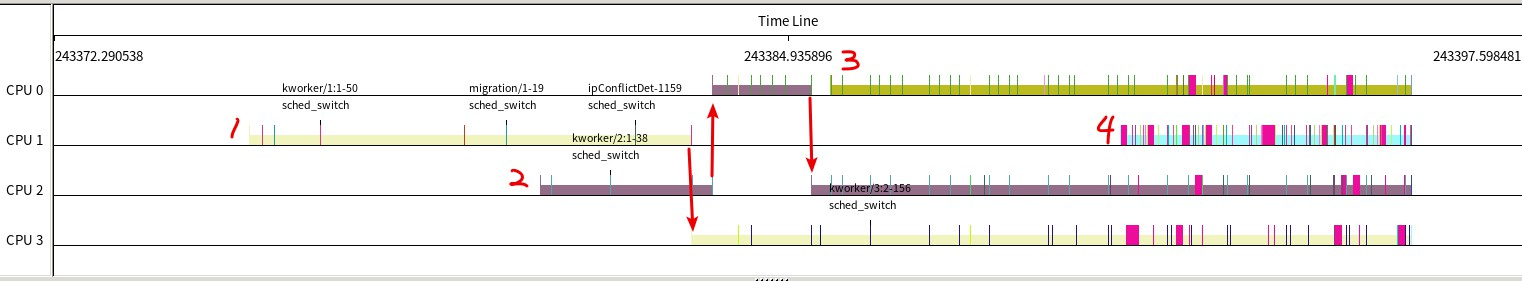

KernelShark是基于trace-cmd的时序分析工具,使用例子如下。

先使用trace-cmd命令来记录数据,起了4个dd进程来作为分析的对象:

root:/# trace-cmd record -e sched_switch & sleep 5; \

> dd if=/dev/random of=/dev/random & sleep 5; \

> dd if=/dev/random of=/dev/random & sleep 5; \

> dd if=/dev/random of=/dev/random & sleep 5; \

> dd if=/dev/random of=/dev/random & sleep 5; \

> killall dd; sleep 1; echo done; fg %1

Hit Ctrl^C to stop recording

log_data_trans:370.no possible file

done

trace-cmd record -e sched_switch -m 65536

^CCPU0 data recorded at offset=0x67f000

53248 bytes in size

CPU1 data recorded at offset=0x68c000

118784 bytes in size

CPU2 data recorded at offset=0x6a9000

36864 bytes in size

CPU3 data recorded at offset=0x6b2000

94208 bytes in size

[6]+ Terminated dd if=/dev/random of=/dev/random

[5]+ Terminated dd if=/dev/random of=/dev/random

[4]+ Terminated dd if=/dev/random of=/dev/random

[3]+ Terminated dd if=/dev/random of=/dev/random

得到trace.dat文件后,使用KernelShark来分析:

root:/# kernelshark trace.dat

会出现一个图形界面:

每个cpu有一条横轴,轴上就是不同时间点上该cpu上运行的进程,以及触发的事件。判断当前运行的是哪个进程是通过sched_switch这个event来进行的,切换进程时都会执行到这个event。

上图可能不太清楚,我们再通过filter过滤一下,只保留4个dd进程:

今天的文章linux tracers使用介绍分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/62239.html