1、简介

分配服务主要是作分片的分配,决定哪些分片应该在哪个节点上,以及哪个为主分片,哪个为副分片 。对于新建索引和已有索引,分片分配过程不相同。

2、基础

包含ShardsAllocator,ExistingShardsAllocator和AllocationDecider

2.1 allocators

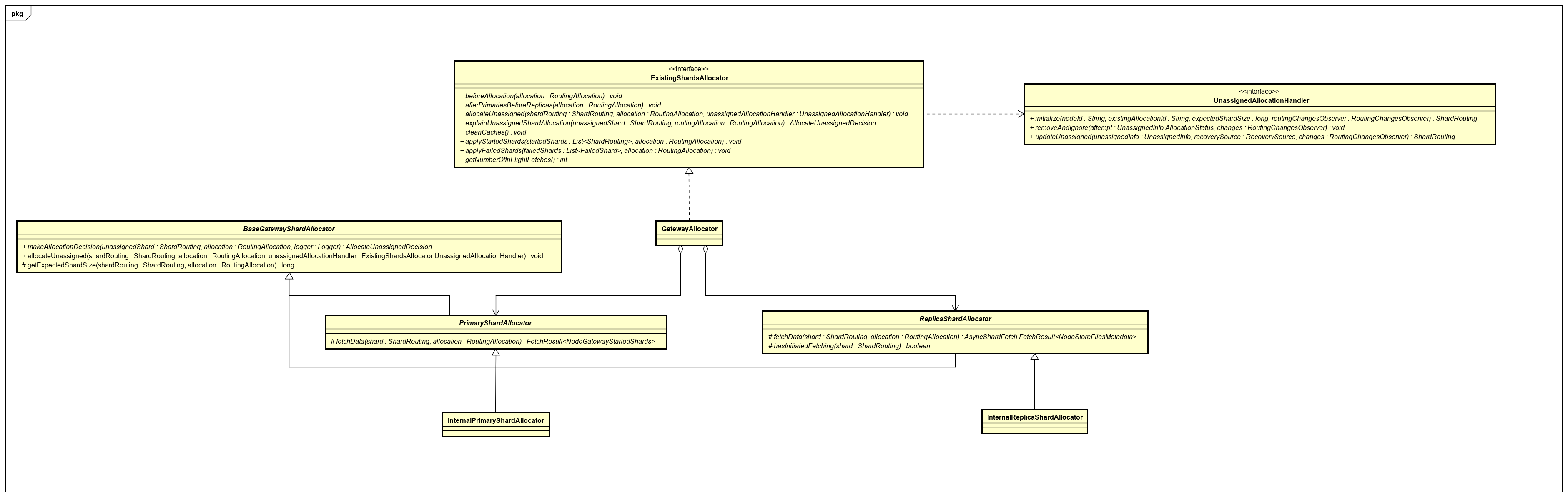

2.1.1 ExistingShardsAllocator

接口方法有

| 方法 | 说明 |

| beforeAllocation | 分配前操作 |

| afterPrimariesBeforeReplicas | 主分片分配后,副分片分配之前 |

| allocateUnassigned | 分配未赋值的分片 |

| explainUnassignedShardAllocation | 解释未赋值分片分配 |

| cleanCaches | 当节点成为主节点或者主节点变为其他时调用 |

| applyStartedShards | 应用启动的分片 |

| applyFailedShards | 应用失败的分片 |

| getNumberOfInFlightFetches | 获取in-flight获取的数目 |

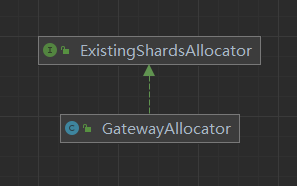

其实现类有

2.1.2 ShardsAllocator

分片分配器接口,方法有

| 方法 | 说明 |

| allocate | 分配分片到集群中的一个节点 |

| decideShardAllocation | 分片分配到集群中的决策 |

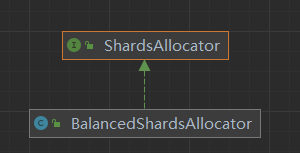

实现类有

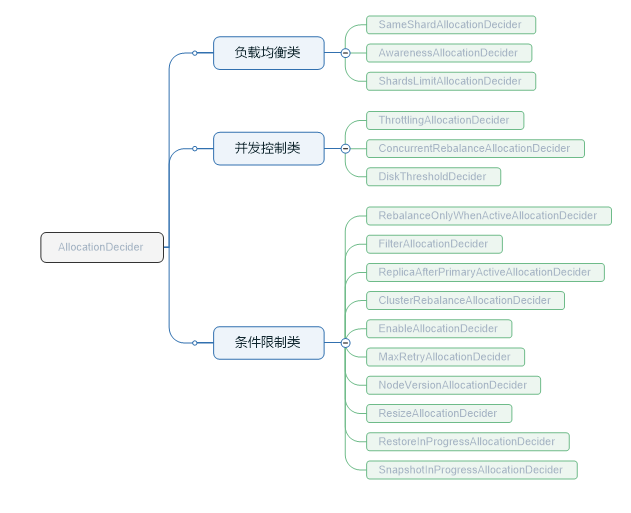

2.1.3 AllocationDecider

分片决策,方法有

| 方法 | 说明 |

| canRebalance | 判断分片路由是否可以平衡 |

| canAllocate | 判断分片路由是否可以分配在指定节点 |

| canRemain | 判断分片路由是否可以保留在指定节点 |

| shouldAutoExpandToNode | 判断索引分片是否应该自动扩展到节点 |

| canForceAllocatePrimary | 判断指定的主分片是否强制分配到指定节点 |

有以下几类决策

2.2 分配时机

以下情况会触发AllocationService#reroute重新分配

- 节点增加或者删除

- 重启恢复

- 恢复快照

- 索引元数据创建、删除、关闭、打开、更新配置

- 集群配置更新

- 执行reroute命令

- 网关恢复

| 类 | 方法 |

| TransportClusterUpdateSettingsAction | masterOperation |

| MetadataCreateIndexService | applyCreateIndexWithTemporaryService |

| MetadataDeleteIndexService | deleteIndices |

| MetadataIndexStateService | closeIndices,onlyOpenIndex |

| MetadataUpdateSettingsService | updateSettings |

| DelayedAllocationService.DelayedRerouteTask | execute |

| AllocationService | disassociateDeadNodes |

| GatewayService.RecoverStateUpdateTask | execute |

| LocalAllocateDangledIndices.AllocateDangledRequestHandler | messageReceived |

| Node | 构造函数中创建RerouteService |

| RestoreService | restoreSnapshot |

3、reroute

3.1 基础分析

reroute中主要实现两种分配

- gatewayAllocator,分配已存在的分片,从磁盘中找到它们

- shardsAllocator, 用于平衡分片在节点中的分布。

private void reroute(RoutingAllocation allocation) {

removeDelayMarkers(allocation);

allocateExistingUnassignedShards(allocation); // try to allocate existing shard copies first

shardsAllocator.allocate(allocation);

}reroute主要运行于MasterService.UpdateTask线程中

3.2 集群启动时gateway触发

创建GatewayService时,如果discovery为Coordinator时,会创建恢复的任务。

if (discovery instanceof Coordinator) {

recoveryRunnable = () ->

clusterService.submitStateUpdateTask("local-gateway-elected-state", new RecoverStateUpdateTask());

} 在两阶段提交的commit阶段时,会执行ClusterStateListener#clusterChanged,调用performStateRecovery

private void performStateRecovery(final boolean enforceRecoverAfterTime, final String reason) {

if (enforceRecoverAfterTime && recoverAfterTime != null) {

if (scheduledRecovery.compareAndSet(false, true)) {

logger.info("delaying initial state recovery for [{}]. {}", recoverAfterTime, reason);

threadPool.schedule(new AbstractRunnable() {

@Override

public void onFailure(Exception e) {

logger.warn("delayed state recovery failed", e);

resetRecoveredFlags();

}

@Override

protected void doRun() {

if (recoveryInProgress.compareAndSet(false, true)) {

logger.info("recover_after_time [{}] elapsed. performing state recovery...", recoverAfterTime);

recoveryRunnable.run();

}

}

}, recoverAfterTime, ThreadPool.Names.GENERIC);

}

} else {

if (recoveryInProgress.compareAndSet(false, true)) {

threadPool.generic().execute(new AbstractRunnable() {

@Override

public void onFailure(final Exception e) {

logger.warn("state recovery failed", e);

resetRecoveredFlags();

}

@Override

protected void doRun() {

logger.debug("performing state recovery...");

recoveryRunnable.run();

}

});

}

}

}3.3 gatewayAllocator

包含主分片器和副分片分配器PrimaryShardAllocator和ReplicaShardAllocator,都继承BaseGatewayShardAllocator

3.3.1 allocateExistingUnassignedShards

- 遍历存在的分片分配器,执行beforeAllocation

- 遍历未分配主分片,执行allocateUnassigned

- 遍历存在的分片分配器,执行afterPrimariesBeforeReplicas

- 遍历未分配副分片,执行allocateUnassigned

3.3.2 PrimaryShardAllocator

allocateUnassigned是调用父类BaseGatewayShardAllocator的,其作分配决策是调用 PrimaryShardAllocator中的makeAllocationDecision。

如果未分配分片的恢复源类型为SNAPSHOT并且路由分配allocation的snapshotShardSizeInfo的分片大小没有设置,则通过决策器来决定是否分配。否则发起向所有数据节点获取某个shard元信息的fetchData请求。

AsyncShardFetch方法有

| 方法 | 说明 |

| fetchData | 向所有节点获取某个shard元信息 |

| asyncFetch | 异步获取集群中指定分片的元数据 |

| reroute | 抽象方法,实现此用于调度另一轮产生调用获取数据 |

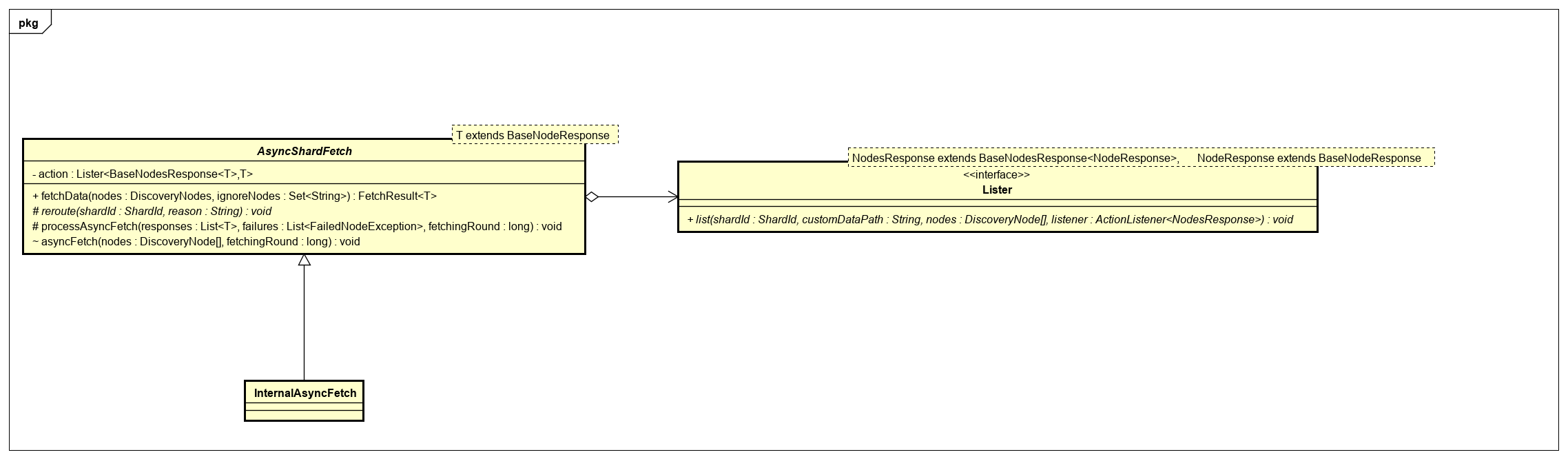

其类层次关系有

InternalAsyncFetch泛化AsyncShardFetch,其实现了reroute方法,调用BatchedRerouteService#reroute

AsyncShardFetch的成员包含Lister接口,InternalPrimaryShardAllocator对应使用的是listStartedShards方法,用来发送TransportNodesListGatewayStartedShards.TYPE请求,请求节点shard元信息的action为internal:gateway/local/started_shards,发送的过程主要实现为AsyncAction#start

void start() {

final DiscoveryNode[] nodes = request.concreteNodes();

if (nodes.length == 0) {

// nothing to notify, so respond immediately, but always fork even if finalExecutor == SAME

final String executor = finalExecutor.equals(ThreadPool.Names.SAME) ? ThreadPool.Names.GENERIC : finalExecutor;

threadPool.executor(executor).execute(() -> newResponse(task, request, responses, listener));

return;

}

final TransportRequestOptions transportRequestOptions = TransportRequestOptions.timeout(request.timeout());

for (int i = 0; i < nodes.length; i++) {

final int idx = i;

final DiscoveryNode node = nodes[i];

final String nodeId = node.getId();

try {

TransportRequest nodeRequest = newNodeRequest(request);

if (task != null) {

nodeRequest.setParentTask(clusterService.localNode().getId(), task.getId());

}

transportService.sendRequest(node, getTransportNodeAction(node), nodeRequest, transportRequestOptions,

new TransportResponseHandler<NodeResponse>() {

@Override

public NodeResponse read(StreamInput in) throws IOException {

return newNodeResponse(in);

}

@Override

public void handleResponse(NodeResponse response) {

onOperation(idx, response);

}

@Override

public void handleException(TransportException exp) {

onFailure(idx, node.getId(), exp);

}

});

} catch (Exception e) {

onFailure(idx, nodeId, e);

}

}

}接收端处理主要是NodeTransportHandler,其调用nodeOperation处理

class NodeTransportHandler implements TransportRequestHandler<NodeRequest> {

@Override

public void messageReceived(NodeRequest request, TransportChannel channel, Task task) throws Exception {

channel.sendResponse(nodeOperation(request, task));

}

}请求端收到响应后处理逻辑为processAsyncFetch,收到各节点返回的shard级别元数据后,放到cache中,下次reroute从cache时取,然后再次执行reroute

protected synchronized void processAsyncFetch(List<T> responses, List<FailedNodeException> failures, long fetchingRound) {

if (closed) {

// we are closed, no need to process this async fetch at all

logger.trace("{} ignoring fetched [{}] results, already closed", shardId, type);

return;

}

logger.trace("{} processing fetched [{}] results", shardId, type);

if (responses != null) {

for (T response : responses) {

NodeEntry<T> nodeEntry = cache.get(response.getNode().getId());

if (nodeEntry != null) {

if (nodeEntry.getFetchingRound() != fetchingRound) {

assert nodeEntry.getFetchingRound() > fetchingRound : "node entries only replaced by newer rounds";

logger.trace("{} received response for [{}] from node {} for an older fetching round (expected: {} but was: {})",

shardId, nodeEntry.getNodeId(), type, nodeEntry.getFetchingRound(), fetchingRound);

} else if (nodeEntry.isFailed()) {

logger.trace("{} node {} has failed for [{}] (failure [{}])", shardId, nodeEntry.getNodeId(), type,

nodeEntry.getFailure());

} else {

// if the entry is there, for the right fetching round and not marked as failed already, process it

logger.trace("{} marking {} as done for [{}], result is [{}]", shardId, nodeEntry.getNodeId(), type, response);

nodeEntry.doneFetching(response);

}

}

}

}

if (failures != null) {

for (FailedNodeException failure : failures) {

logger.trace("{} processing failure {} for [{}]", shardId, failure, type);

NodeEntry<T> nodeEntry = cache.get(failure.nodeId());

if (nodeEntry != null) {

if (nodeEntry.getFetchingRound() != fetchingRound) {

assert nodeEntry.getFetchingRound() > fetchingRound : "node entries only replaced by newer rounds";

logger.trace("{} received failure for [{}] from node {} for an older fetching round (expected: {} but was: {})",

shardId, nodeEntry.getNodeId(), type, nodeEntry.getFetchingRound(), fetchingRound);

} else if (nodeEntry.isFailed() == false) {

// if the entry is there, for the right fetching round and not marked as failed already, process it

Throwable unwrappedCause = ExceptionsHelper.unwrapCause(failure.getCause());

// if the request got rejected or timed out, we need to try it again next time...

if (unwrappedCause instanceof EsRejectedExecutionException ||

unwrappedCause instanceof ReceiveTimeoutTransportException ||

unwrappedCause instanceof ElasticsearchTimeoutException) {

nodeEntry.restartFetching();

} else {

logger.warn(() -> new ParameterizedMessage("{}: failed to list shard for {} on node [{}]",

shardId, type, failure.nodeId()), failure);

nodeEntry.doneFetching(failure.getCause());

}

}

}

}

}

reroute(shardId, "post_response");

}获取到分片数据后,得到索引元数据的inSyncAllocationIds,通过buildNodeShardsResult构建节点分片结果

- 对于分片恢复源为SNAPSHOT,排序规则为顺序为,是否在inSyncAllocationIds->没有存储异常->主分片

- 对于非SNAPSHOT,排序规则顺序为,没有存储异常->主分片

protected static NodeShardsResult buildNodeShardsResult(ShardRouting shard, boolean matchAnyShard,

Set<String> ignoreNodes, Set<String> inSyncAllocationIds,

FetchResult<NodeGatewayStartedShards> shardState,

Logger logger) {

List<NodeGatewayStartedShards> nodeShardStates = new ArrayList<>();

int numberOfAllocationsFound = 0;

for (NodeGatewayStartedShards nodeShardState : shardState.getData().values()) {

DiscoveryNode node = nodeShardState.getNode();

String allocationId = nodeShardState.allocationId();

if (ignoreNodes.contains(node.getId())) {

continue;

}

if (nodeShardState.storeException() == null) {

if (allocationId == null) {

logger.trace("[{}] on node [{}] has no shard state information", shard, nodeShardState.getNode());

} else {

logger.trace("[{}] on node [{}] has allocation id [{}]", shard, nodeShardState.getNode(), allocationId);

}

} else {

final String finalAllocationId = allocationId;

if (nodeShardState.storeException() instanceof ShardLockObtainFailedException) {

logger.trace(() -> new ParameterizedMessage("[{}] on node [{}] has allocation id [{}] but the store can not be " +

"opened as it's locked, treating as valid shard", shard, nodeShardState.getNode(), finalAllocationId),

nodeShardState.storeException());

} else {

logger.trace(() -> new ParameterizedMessage("[{}] on node [{}] has allocation id [{}] but the store can not be " +

"opened, treating as no allocation id", shard, nodeShardState.getNode(), finalAllocationId),

nodeShardState.storeException());

allocationId = null;

}

}

if (allocationId != null) {

assert nodeShardState.storeException() == null ||

nodeShardState.storeException() instanceof ShardLockObtainFailedException :

"only allow store that can be opened or that throws a ShardLockObtainFailedException while being opened but got a " +

"store throwing " + nodeShardState.storeException();

numberOfAllocationsFound++;

if (matchAnyShard || inSyncAllocationIds.contains(nodeShardState.allocationId())) {

nodeShardStates.add(nodeShardState);

}

}

}

final Comparator<NodeGatewayStartedShards> comparator; // allocation preference

if (matchAnyShard) {

// prefer shards with matching allocation ids

Comparator<NodeGatewayStartedShards> matchingAllocationsFirst = Comparator.comparing(

(NodeGatewayStartedShards state) -> inSyncAllocationIds.contains(state.allocationId())).reversed();

comparator = matchingAllocationsFirst.thenComparing(NO_STORE_EXCEPTION_FIRST_COMPARATOR)

.thenComparing(PRIMARY_FIRST_COMPARATOR);

} else {

comparator = NO_STORE_EXCEPTION_FIRST_COMPARATOR.thenComparing(PRIMARY_FIRST_COMPARATOR);

}

nodeShardStates.sort(comparator);

if (logger.isTraceEnabled()) {

logger.trace("{} candidates for allocation: {}", shard, nodeShardStates.stream().map(s -> s.getNode().getName())

.collect(Collectors.joining(", ")));

}

return new NodeShardsResult(nodeShardStates, numberOfAllocationsFound);

}基于分片决策做分片分配,分成yes/no/throttle三组。

private static NodesToAllocate buildNodesToAllocate(RoutingAllocation allocation,

List<NodeGatewayStartedShards> nodeShardStates,

ShardRouting shardRouting,

boolean forceAllocate) {

List<DecidedNode> yesNodeShards = new ArrayList<>();

List<DecidedNode> throttledNodeShards = new ArrayList<>();

List<DecidedNode> noNodeShards = new ArrayList<>();

for (NodeGatewayStartedShards nodeShardState : nodeShardStates) {

RoutingNode node = allocation.routingNodes().node(nodeShardState.getNode().getId());

if (node == null) {

continue;

}

Decision decision = forceAllocate ? allocation.deciders().canForceAllocatePrimary(shardRouting, node, allocation) :

allocation.deciders().canAllocate(shardRouting, node, allocation);

DecidedNode decidedNode = new DecidedNode(nodeShardState, decision);

if (decision.type() == Type.THROTTLE) {

throttledNodeShards.add(decidedNode);

} else if (decision.type() == Type.NO) {

noNodeShards.add(decidedNode);

} else {

yesNodeShards.add(decidedNode);

}

}

return new NodesToAllocate(Collections.unmodifiableList(yesNodeShards), Collections.unmodifiableList(throttledNodeShards),

Collections.unmodifiableList(noNodeShards));

}3.3.3 ReplicaShardAllocator

与PrimaryShardAllocator在作分配决策时,有一些差异,在fetchData前判断是否可以在一个节点上分配canBeAllocatedToAtLeastOneNode

public static Tuple<Decision, Map<String, NodeAllocationResult>> canBeAllocatedToAtLeastOneNode(ShardRouting shard,

RoutingAllocation allocation) {

Decision madeDecision = Decision.NO;

final boolean explain = allocation.debugDecision();

Map<String, NodeAllocationResult> nodeDecisions = explain ? new HashMap<>() : null;

for (ObjectCursor<DiscoveryNode> cursor : allocation.nodes().getDataNodes().values()) {

RoutingNode node = allocation.routingNodes().node(cursor.value.getId());

if (node == null) {

continue;

}

// if we can't allocate it on a node, ignore it, for example, this handles

// cases for only allocating a replica after a primary

Decision decision = allocation.deciders().canAllocate(shard, node, allocation);

if (decision.type() == Decision.Type.YES && madeDecision.type() != Decision.Type.YES) {

if (explain) {

madeDecision = decision;

} else {

return Tuple.tuple(decision, null);

}

} else if (madeDecision.type() == Decision.Type.NO && decision.type() == Decision.Type.THROTTLE) {

madeDecision = decision;

}

if (explain) {

nodeDecisions.put(node.nodeId(), new NodeAllocationResult(node.node(), null, decision));

}

}

return Tuple.tuple(madeDecision, nodeDecisions);

}今天的文章es中的读流程_es可视化管理工具「建议收藏」分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/87101.html