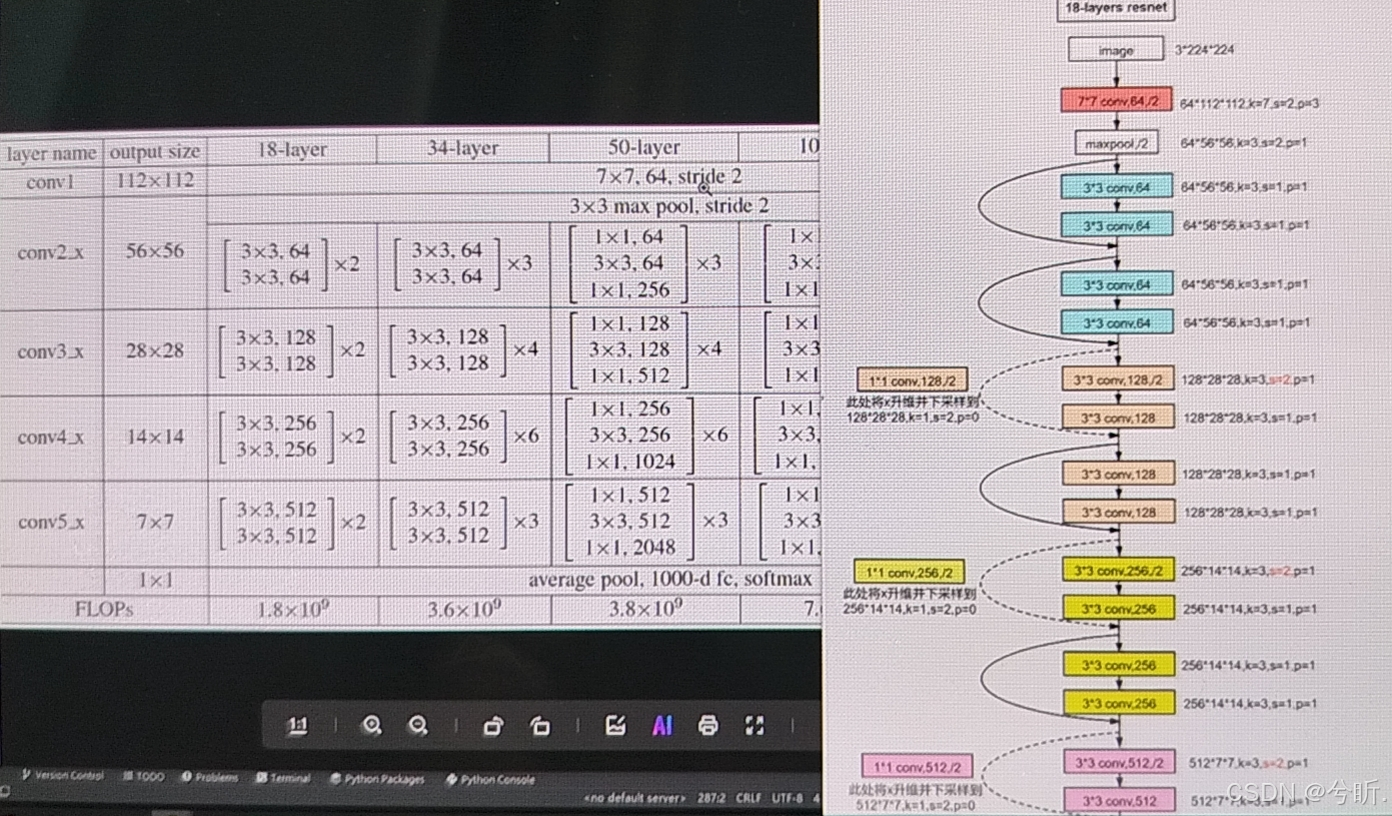

2015顶峰 何凯明

from streamlit.testing.v1.element_tree import SpecialBlock

from torch import nn

from torch.testing._internal.common_nn import output_size

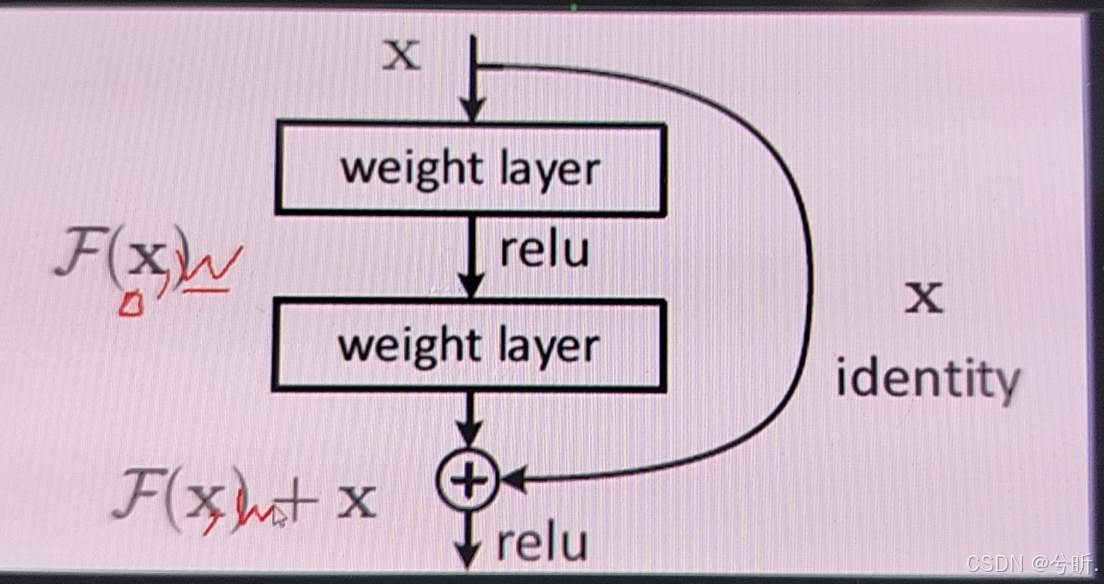

def CommonBlock(param, param1, param2):

pass

def SpecialBlockBlock(param, param1, param2):

pass

class ResNet18(nn.Module):

def __init__(self,classes_num):

super(ResNet18,self).__init__()

self.prepare = nn.Sequential(

nn.Conv2d(3,64,7,2,3),

nn.BatchNorm2d(64),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3,2,1)

)

self.layer1 = nn.Sequential(

CommonBlock(64,64,1),

CommonBlock(64,64,1)

)

self.layer2 = nn.Sequential(

SpecialBlockBlock(64,128,[2,1]),

CommonBlock(64,128,1)

)

self.layer3 = nn.Sequential(

SpecialBlock(128,256,[2,1]),

CommonBlock(256,256,1)

)

self.layer4 = nn.Sequential(

SpecialBlock(256,512,[2,1]),

CommonBlock(512,512,1)

)

self.pool = nn.AdaptiveAvgPool2d(output_size)

self.fc = nn.Sequential(

# nn.Dropout(p=0.5),

# nn.Linear(512,256),

# nn.ReLU(inplace=True),

# nn.Dropout(p=0.5),

nn.Linear(512,classes_num)

)

def forward(self,x):

x = self.prepare(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.pool(x)

x = x.reshape(x.shape[0],-1)

x = self.fc(x)

return x

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/bian-cheng-ri-ji/55021.html