1 原理

1.1 B树

(1)m路查找树

一棵m路查找树,它或者是一棵空树,或者是满足如下性质的树:

- 根最多有m棵子树,并具有以下结构:

- 在子树

中所有的关键码都大于

,小于

。

- 在子树

中所有的关键码都大于

- 在子树

中所有的关键码都小于

- 子树

也是m路查找树

(2)B树

m阶B树时一棵m路查找树,它或是空树,或者满足以下性质:

- 树中每个节点至多有m棵子树

- 根节点至少有两棵子树

- 除根节点以外的所有非终端节点至少有

棵子树

- 所有的叶子节点都位于同一层

1.2 步骤

具体模拟过程参考:https://www.cnblogs.com/pinard/p/6179132.html

参考资料:

BIRCH能够识别出数据集中数据分布的不均衡性,将分布在稠密区域中的点聚类并移除将分布在稀疏区域中的异常点。此外,BIRCH是一种增量聚类方法,针对每一个点的聚类决策都是基于当前已经处理过的数据点,而不是全局的数据点。

① 建立一个聚类特征树

首先是遍历所有数据,使用给定的内存数量和磁盘上的回收空间构建一个初始的内存CF树,来反映数据集上的聚类信息。对于稠密数据,分组成更精细的簇,稀疏的数据点则被作为异常点移除。

② 缩小范围,精简聚类特征树

该过程是可选择的,这个部分是连接步骤①和步骤③的桥梁,相似于步骤①,他开始遍历初始化的聚类特征树叶子节点,移除更多的异常点和缩小范围进行分组。

③ 全局聚类

使用全局聚类或者半全局聚类来操作所有的叶子节点,有数据点的聚类算法很容易适应一组子簇,每个子簇由其聚类特征向量表示。计算子簇的质心,然后每个子簇用质心表示,这部分可以捕捉到数据的主要分布规律。

④ 簇类细化

因为步骤③只是对数据进行粗略总结,原数据只是被扫描了一次,需要继续完善簇类。使用上阶段产生的簇的中心作为种子,并将数据点重新分配到最近的种子,以获得一组新的簇。这不仅允许属于该子簇的点迁移,而且可以确保给定数据点的所有副本都迁移到同一个子簇中。还为提供了丢弃异常值的选项。也就是说,如果距离最近的点太远,种子可以作为离群值处理,而不包含在结果中。

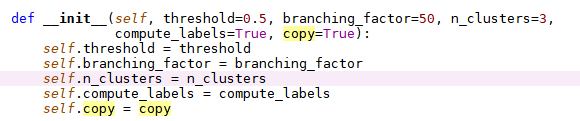

2.参数说明

函数:sklearn.cluster.Birch

参数:

- threshold:(float,default=0.5)新的子聚类和最近的子聚类合并的子聚类的半径小于阈值,否则将进行分裂。

- branching_factor:(int,default=50)每个结点中CF子聚类的最大数量。

- n_cluster:(int,default=3)最终聚类步骤的聚类数量,if None,不执行最终的聚类步骤,子聚类原样返回;if sklearn.cluster.Estimator,则该模型将子聚类作为新样本执行。

- compute_labels:(bool,default=True)每次拟合的时候是否标签值计算。

- copy:(bool,default=True)是否复制获得的数据,如果设置为false,初始化数据将被重写。

属性:

- root_:CF tree的root

- dummy_leaf_:所有叶子节点的指针

- subcluster_centers_:所有叶子里子聚类的质心

- subcluster_labels_:全聚类之后子聚类质心的labels

- labels_:所有输入数据的labels

3 具体实现

可参考scikit-learn的实例:https://scikit-learn.org/stable/auto_examples/cluster/plot_birch_vs_minibatchkmeans.html#sphx-glr-auto-examples-cluster-plot-birch-vs-minibatchkmeans-py

4 源码解析

源码在:Anaconda3/Lib/site-packages/sklearn/cluster/birch.py中

(1)前缀知识

-

hasattr()函数用来判断某个类实例对象是否包含指定名称的属性或方法,返回True和False

hasattr(obj, name),其中obj 指的是某个类的实例对象,name 表示指定的属性名或方法名。

- getattr()函数获取某个类实例对象中指定属性的值

getattr(obj, name[, default]),其中obj 表示指定的类实例对象,name 表示指定的属性名,而 default 是可选参数,用于设定该函数的默认返回值,即当函数查找失败时,如果不指定 default 参数,则程序将直接报 AttributeError 错误,反之该函数将返回 default 指定的值。

-

setattr()函数的功能相对比较复杂,它最基础的功能是修改类实例对象中的属性值。其次,它还可以实现为实例对象动态添加属性或者方法。

(2)Birch函数

- Birch(BaseEstimator, TransformerMixin, ClusterMixin)在sklearn的base文件里

- 其他参数

- fit函数(主要核心计算在_fit函数中)

def fit(self, X, y=None):

"""

Build a CF Tree for the input data.

Parameters

----------

X : {array-like, sparse matrix} of shape (n_samples, n_features)

Input data.

y : Ignored

Not used, present here for API consistency by convention.

Returns

-------

self

Fitted estimator.

"""

self.fit_, self.partial_fit_ = True, False

return self._fit(X)

def _fit(self, X):

X = self._validate_data(X, accept_sparse='csr', copy=self.copy)

threshold = self.threshold

branching_factor = self.branching_factor

if branching_factor <= 1:

raise ValueError("Branching_factor should be greater than one.")

n_samples, n_features = X.shape

# If partial_fit is called for the first time or fit is called, we

# start a new tree.

partial_fit = getattr(self, 'partial_fit_')

has_root = getattr(self, 'root_', None)

if getattr(self, 'fit_') or (partial_fit and not has_root):

# The first root is the leaf. Manipulate this object throughout.

self.root_ = _CFNode(threshold=threshold,

branching_factor=branching_factor,

is_leaf=True,

n_features=n_features)

# To enable getting back subclusters.

self.dummy_leaf_ = _CFNode(threshold=threshold,

branching_factor=branching_factor,

is_leaf=True, n_features=n_features)

self.dummy_leaf_.next_leaf_ = self.root_

self.root_.prev_leaf_ = self.dummy_leaf_

# Cannot vectorize. Enough to convince to use cython.

if not sparse.issparse(X):

iter_func = iter

else:

iter_func = _iterate_sparse_X

#遍历数据,构建子聚类

for sample in iter_func(X):

subcluster = _CFSubcluster(linear_sum=sample)

split = self.root_.insert_cf_subcluster(subcluster)

#如果确定CF要分裂,则使用分裂算法,返回两个子聚类,并将子聚类增加到root上

if split:

new_subcluster1, new_subcluster2 = _split_node(

self.root_, threshold, branching_factor)

del self.root_

self.root_ = _CFNode(threshold=threshold,

branching_factor=branching_factor,

is_leaf=False,

n_features=n_features)

self.root_.append_subcluster(new_subcluster1)

self.root_.append_subcluster(new_subcluster2)

#获取叶子节点的质心

centroids = np.concatenate([

leaf.centroids_ for leaf in self._get_leaves()])

self.subcluster_centers_ = centroids

self._global_clustering(X)

return self其他函数:

构建稀疏矩阵

def _iterate_sparse_X(X):

"""This little hack returns a densified row when iterating over a sparse

matrix, instead of constructing a sparse matrix for every row that is

expensive.

"""

n_samples = X.shape[0]

X_indices = X.indices

X_data = X.data

X_indptr = X.indptr

for i in range(n_samples):

row = np.zeros(X.shape[1])

startptr, endptr = X_indptr[i], X_indptr[i + 1]

nonzero_indices = X_indices[startptr:endptr]

row[nonzero_indices] = X_data[startptr:endptr]

yield row

分裂叶子结点的函数:定义两个子聚类,两个CF节点,并将CF节点加入到CF子聚类中,如果传入的子聚类是叶子节点,就进行一系列的指针变换,计算子聚类的质心和平方和之间的距离,选择距离最大的矩阵,然后选择较小的值为一个子聚类,其他的归为另一个子聚类。

def _split_node(node, threshold, branching_factor):

"""The node has to be split if there is no place for a new subcluster

in the node.

1. Two empty nodes and two empty subclusters are initialized.

2. The pair of distant subclusters are found.

3. The properties of the empty subclusters and nodes are updated

according to the nearest distance between the subclusters to the

pair of distant subclusters.

4. The two nodes are set as children to the two subclusters.

"""

new_subcluster1 = _CFSubcluster()

new_subcluster2 = _CFSubcluster()

new_node1 = _CFNode(

threshold=threshold, branching_factor=branching_factor,

is_leaf=node.is_leaf,

n_features=node.n_features)

new_node2 = _CFNode(

threshold=threshold, branching_factor=branching_factor,

is_leaf=node.is_leaf,

n_features=node.n_features)

new_subcluster1.child_ = new_node1

new_subcluster2.child_ = new_node2

if node.is_leaf:

if node.prev_leaf_ is not None:

node.prev_leaf_.next_leaf_ = new_node1

new_node1.prev_leaf_ = node.prev_leaf_

new_node1.next_leaf_ = new_node2

new_node2.prev_leaf_ = new_node1

new_node2.next_leaf_ = node.next_leaf_

if node.next_leaf_ is not None:

node.next_leaf_.prev_leaf_ = new_node2

dist = euclidean_distances(

node.centroids_, Y_norm_squared=node.squared_norm_, squared=True)

n_clusters = dist.shape[0]

farthest_idx = np.unravel_index(

dist.argmax(), (n_clusters, n_clusters))

node1_dist, node2_dist = dist[(farthest_idx,)]

node1_closer = node1_dist < node2_dist

for idx, subcluster in enumerate(node.subclusters_):

if node1_closer[idx]:

new_node1.append_subcluster(subcluster)

new_subcluster1.update(subcluster)

else:

new_node2.append_subcluster(subcluster)

new_subcluster2.update(subcluster)

return new_subcluster1, new_subcluster2获取叶子结点:

def _get_leaves(self):

"""

Retrieve the leaves of the CF Node.

Returns

-------

leaves : list of shape (n_leaves,)

List of the leaf nodes.

"""

leaf_ptr = self.dummy_leaf_.next_leaf_

leaves = []

while leaf_ptr is not None:

leaves.append(leaf_ptr)

leaf_ptr = leaf_ptr.next_leaf_

return leaves

进行全局聚类:增加了AgglomerativeClustering算法(另写)。

def _global_clustering(self, X=None):

"""

Global clustering for the subclusters obtained after fitting

"""

clusterer = self.n_clusters

centroids = self.subcluster_centers_

compute_labels = (X is not None) and self.compute_labels

# Preprocessing for the global clustering.

not_enough_centroids = False

if isinstance(clusterer, numbers.Integral):

clusterer = AgglomerativeClustering(

n_clusters=self.n_clusters)

# There is no need to perform the global clustering step.

if len(centroids) < self.n_clusters:

not_enough_centroids = True

elif (clusterer is not None and not

hasattr(clusterer, 'fit_predict')):

raise ValueError("n_clusters should be an instance of "

"ClusterMixin or an int")

# To use in predict to avoid recalculation.

self._subcluster_norms = row_norms(

self.subcluster_centers_, squared=True)

if clusterer is None or not_enough_centroids:

self.subcluster_labels_ = np.arange(len(centroids))

if not_enough_centroids:

warnings.warn(

"Number of subclusters found (%d) by Birch is less "

"than (%d). Decrease the threshold."

% (len(centroids), self.n_clusters), ConvergenceWarning)

else:

# The global clustering step that clusters the subclusters of

# the leaves. It assumes the centroids of the subclusters as

# samples and finds the final centroids.

self.subcluster_labels_ = clusterer.fit_predict(

self.subcluster_centers_)

if compute_labels:

self.labels_ = self.predict(X)

(3)CFNode

| 参数 | 属性 | ||

| threshold:float | 确定子聚类的阈值 | subclusters_ : list | 指定结点的子聚类 |

| branching_factor: int | 分支因子 | prev_leaf_ : _CFNode | 前叶子结点 |

| is_leaf : bool | 是否是叶子节点 | next_leaf_ : _CFNode | 后叶子结点 |

| n_features : int | 特征数量 | init_centroids_ | 初始化质心,shape=(branching_factor + 1, n_features) |

| init_sq_norm_ | 初始化平方和,shape=(branching_factor + 1, n_features) | ||

| centroids_ | 质心 | ||

| squared_norm_ | 平方和 | ||

CFNode有三个函数构成:

第一个函数:append_subcluster(self, subcluster)更新CF的特征值

def append_subcluster(self, subcluster):

#获取CF的子聚类长度

n_samples = len(self.subclusters_)

#将新的子聚类加入到CF中

self.subclusters_.append(subcluster)

#初始化新子聚类的质心和平方和(将质心和平和方加入到列表中)

self.init_centroids_[n_samples] = subcluster.centroid_

self.init_sq_norm_[n_samples] = subcluster.sq_norm_

# Keep centroids and squared norm as views. In this way

# if we change init_centroids and init_sq_norm_, it is

# sufficient,

#更新最终的子聚类的质心和平方和(将质心和平和方加入到列表中)

self.centroids_ = self.init_centroids_[:n_samples + 1, :]

self.squared_norm_ = self.init_sq_norm_[:n_samples + 1]第二个函数:update_split_subclusters(self, subcluster,new_subcluster1, new_subcluster2):更新分裂节点

def update_split_subclusters(self, subcluster,

new_subcluster1, new_subcluster2):

"""Remove a subcluster from a node and update it with the

split subclusters.

"""

ind = self.subclusters_.index(subcluster)

self.subclusters_[ind] = new_subcluster1

self.init_centroids_[ind] = new_subcluster1.centroid_

self.init_sq_norm_[ind] = new_subcluster1.sq_norm_

self.append_subcluster(new_subcluster2)第三个函数:insert_cf_subcluster(self, subcluster):子聚类中插入CF特征

def insert_cf_subcluster(self, subcluster):

"""Insert a new subcluster into the node."""

# self.subclusters_不存在,则将新的子聚类加入到子聚类列表中

if not self.subclusters_:

self.append_subcluster(subcluster)

return False

threshold = self.threshold

branching_factor = self.branching_factor

# We need to find the closest subcluster among all the

# subclusters so that we can insert our new subcluster.

#计算距离矩阵

dist_matrix = np.dot(self.centroids_, subcluster.centroid_)

dist_matrix *= -2.

dist_matrix += self.squared_norm_

closest_index = np.argmin(dist_matrix)

closest_subcluster = self.subclusters_[closest_index]

# If the subcluster has a child, we need a recursive strategy.

#如果子聚类存在字迹,需要采用递归策略,更新CF参数

if closest_subcluster.child_ is not None:

split_child = closest_subcluster.child_.insert_cf_subcluster(

subcluster)

if not split_child:

# If it is determined that the child need not be split, we

# can just update the closest_subcluster

closest_subcluster.update(subcluster)

self.init_centroids_[closest_index] = \

self.subclusters_[closest_index].centroid_

self.init_sq_norm_[closest_index] = \

self.subclusters_[closest_index].sq_norm_

return False

# things not too good. we need to redistribute the subclusters in

# our child node, and add a new subcluster in the parent

# subcluster to accommodate the new child.

else:

new_subcluster1, new_subcluster2 = _split_node(

closest_subcluster.child_, threshold, branching_factor)

self.update_split_subclusters(

closest_subcluster, new_subcluster1, new_subcluster2)

if len(self.subclusters_) > self.branching_factor:

return True

return False

# good to go!

else:

#当子聚类的残差半径小于阈值时,更新CF参数

merged = closest_subcluster.merge_subcluster(

subcluster, self.threshold)

#如果merged存在,将新的子聚类加入到子聚类中,并更新子聚类的参数

if merged:

self.init_centroids_[closest_index] = \

closest_subcluster.centroid_

self.init_sq_norm_[closest_index] = \

closest_subcluster.sq_norm_

return False

# not close to any other subclusters, and we still

# have space, so add.

#如果子聚类的CF树超过分支因子数,分裂成新的子聚类加入到Node中

elif len(self.subclusters_) < self.branching_factor:

self.append_subcluster(subcluster)

return False

# We do not have enough space nor is it closer to an

# other subcluster. We need to split.

else:

self.append_subcluster(subcluster)

return True(4)CFSubcluster

| 参数 | 属性 | ||

| linear_sum:narray | 样本 | n_samples_ :int | 每个子聚类的样本数 |

| linear_sum_ : narray | 子聚类所有样本的线性和 | ||

| squared_sum_ : float | Sum of the squared l2 norms | ||

| centroids_ | 质心 | ||

| child_ | 孩子结点 | ||

| sq_norm_ | 子聚类的平方和 | ||

CFSubcluster有三个函数构成:

第一个函数:update(self, subcluster)更新数值(线性和、质心、平方和等数值)

def update(self, subcluster):

self.n_samples_ += subcluster.n_samples_

self.linear_sum_ += subcluster.linear_sum_

self.squared_sum_ += subcluster.squared_sum_

self.centroid_ = self.linear_sum_ / self.n_samples_

self.sq_norm_ = np.dot(self.centroid_, self.centroid_)第二个函数:merge_subcluster(self, nominee_cluster, threshold)连接subclustert

def merge_subcluster(self, nominee_cluster, threshold):

"""Check if a cluster is worthy enough to be merged. If

yes then merge.

"""

new_ss = self.squared_sum_ + nominee_cluster.squared_sum_

new_ls = self.linear_sum_ + nominee_cluster.linear_sum_

new_n = self.n_samples_ + nominee_cluster.n_samples_

new_centroid = (1 / new_n) * new_ls

new_norm = np.dot(new_centroid, new_centroid)

dot_product = (-2 * new_n) * new_norm

sq_radius = (new_ss + dot_product) / new_n + new_norm

if sq_radius <= threshold ** 2:

(self.n_samples_, self.linear_sum_, self.squared_sum_,

self.centroid_, self.sq_norm_) = \

new_n, new_ls, new_ss, new_centroid, new_norm

return True

return False第三个函数:radius(self):计算残差

def radius(self):

"""Return radius of the subcluster"""

dot_product = -2 * np.dot(self.linear_sum_, self.centroid_)

return sqrt(

((self.squared_sum_ + dot_product) / self.n_samples_) +

self.sq_norm_)

今天的文章聚类算法——Birch详解分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/11971.html