Video4Linux2(Video for Linux Two, 简称V4L2)是Linux中关于视频设备的驱动框架,为上层访问底层的视频设备提供统一接口。V4L2主要支持三类设备:视频输入输出设备、VBI设备和Radio设备,分别会在/dev目录下产生videoX、vbiX和radioX设备节点,其中X是0,1,2等的数字。如USB摄像头是我们常见的视频输入设备。FFmpeg和OpenCV对V4L2均支持。

在使用USB摄像头获取视频时,外部调用V4L2 API的包含的主要头文件为videodev2.h,在/usr/include/linux目录下。

V4L2获取USB视频流执行流程如下:代码参考:https://linuxtv.org/downloads/v4l-dvb-apis-new/uapi/v4l/capture.c.html

1. 打开设备调用open_device函数:

(1). 调用stat函数,通过设备名字获取设备信息,并将结果保存在结构体stat中,结构体stat内容如下:

struct stat {

dev_t st_dev; /* ID of device containing file */

ino_t st_ino; /* inode number */

mode_t st_mode; /* protection */

nlink_t st_nlink; /* number of hard links */

uid_t st_uid; /* user ID of owner */

gid_t st_gid; /* group ID of owner */

dev_t st_rdev; /* device ID (if special file) */

off_t st_size; /* total size, in bytes */

blksize_t st_blksize; /* blocksize for file system I/O */

blkcnt_t st_blocks; /* number of 512B blocks allocated */

time_t st_atime; /* time of last access */

time_t st_mtime; /* time of last modification */

time_t st_ctime; /* time of last status change */

};(2). 调用S_ISCHR函数判断是否是字符设备(character device);

(3). 调用open函数打开设备,以可读可写方式(O_RDWR)和无阻塞方式(O_NONBLOCK)打开,open函数返回一个文件(或设备)描述符。

2. 初始化设备调用init_device函数:

(1). 调用ioctl函数,对设备的I/O通道进行管理,查询设备能力,并将结果保存在结构体v4l2_capability中,结构体v4l2_capability内容如下:

struct v4l2_capability {

__u8 driver[16]; // name of the driver module (e.g. "bttv")

__u8 card[32]; // name of the card (e.g. "Hauppauge WinTV")

__u8 bus_info[32]; // name of the bus (e.g. "PCI:" + pci_name(pci_dev) )

__u32 version; // KERNEL_VERSION

__u32 capabilities; // capabilities of the physical device as a whole

__u32 device_caps; // capabilities accessed via this particular device (node)

__u32 reserved[3]; // reserved fields for future extensions

};(2). 判断是否是视频捕获设备:V4L2_CAP_VIDEO_CAPTURE;

(3). 判断是否支持流I/O(streaming I/O):V4L2_CAP_STREAMING;

(4). 调用ioctl函数,查询设备视频裁剪和缩放功能信息,并将结果保存在结构体v4l2_cropcap中,结构体v4l2_cropcap内容如下:

enum v4l2_buf_type {

V4L2_BUF_TYPE_VIDEO_CAPTURE = 1,

V4L2_BUF_TYPE_VIDEO_OUTPUT = 2,

V4L2_BUF_TYPE_VIDEO_OVERLAY = 3,

V4L2_BUF_TYPE_VBI_CAPTURE = 4,

V4L2_BUF_TYPE_VBI_OUTPUT = 5,

V4L2_BUF_TYPE_SLICED_VBI_CAPTURE = 6,

V4L2_BUF_TYPE_SLICED_VBI_OUTPUT = 7,

#if 1

/* Experimental */

V4L2_BUF_TYPE_VIDEO_OUTPUT_OVERLAY = 8,

#endif

V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE = 9,

V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE = 10,

/* Deprecated, do not use */

V4L2_BUF_TYPE_PRIVATE = 0x80,

};

struct v4l2_cropcap {

__u32 type; /* enum v4l2_buf_type */

struct v4l2_rect bounds;

struct v4l2_rect defrect;

struct v4l2_fract pixelaspect;

};(5). 调用ioctl函数,设置当前裁剪矩形,并将结果保存在结构体v4l2_crop中,结构体v4l2_crop内容如下:

struct v4l2_crop {

__u32 type; /* enum v4l2_buf_type */

struct v4l2_rect c;

};(6). 调用ioctl函数,设置流数据格式,包括宽、高、像素格式,并将结果保存在结构体v4l2_format中,结构体v4l2_format内容如下:

struct v4l2_pix_format {

__u32 width;

__u32 height;

__u32 pixelformat;

__u32 field; /* enum v4l2_field */

__u32 bytesperline; /* for padding, zero if unused */

__u32 sizeimage;

__u32 colorspace; /* enum v4l2_colorspace */

__u32 priv; /* private data, depends on pixelformat */

};

struct v4l2_format { // stream data format

__u32 type; // enum v4l2_buf_type; type of the data stream

union {

struct v4l2_pix_format pix; // V4L2_BUF_TYPE_VIDEO_CAPTURE, definition of an image format

struct v4l2_pix_format_mplane pix_mp; // V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE, definition of a multiplanar image format

struct v4l2_window win; // V4L2_BUF_TYPE_VIDEO_OVERLAY, definition of an overlaid image

struct v4l2_vbi_format vbi; // V4L2_BUF_TYPE_VBI_CAPTURE, raw VBI capture or output parameters

struct v4l2_sliced_vbi_format sliced; // V4L2_BUF_TYPE_SLICED_VBI_CAPTURE, sliced VBI capture or output parameters

__u8 raw_data[200]; // user-defined, placeholder for future extensions and custom formats

} fmt;

};3. 因为io采用的是MMAP即内存映射方式,因此调用init_mmap函数:

(1). 调用ioctl函数,设置内存映射I/O,并将结果保存在结构体v4l2_requestbuffers中,结构体v4l2_requestbuffers内容如下:

enum v4l2_memory {

V4L2_MEMORY_MMAP = 1,

V4L2_MEMORY_USERPTR = 2,

V4L2_MEMORY_OVERLAY = 3,

V4L2_MEMORY_DMABUF = 4,

};

struct v4l2_requestbuffers {

__u32 count;

__u32 type; /* enum v4l2_buf_type */

__u32 memory; /* enum v4l2_memory */

__u32 reserved[2];

};(2). 调用ioctl函数,查询缓冲区状态,并将结果保存在结构体v4l2_buffer中,结构体v4l2_buffer内容如下:

/**

* struct v4l2_buffer - video buffer info

* @index: id number of the buffer

* @type: enum v4l2_buf_type; buffer type (type == *_MPLANE for

* multiplanar buffers);

* @bytesused: number of bytes occupied by data in the buffer (payload);

* unused (set to 0) for multiplanar buffers

* @flags: buffer informational flags

* @field: enum v4l2_field; field order of the image in the buffer

* @timestamp: frame timestamp

* @timecode: frame timecode

* @sequence: sequence count of this frame

* @memory: enum v4l2_memory; the method, in which the actual video data is

* passed

* @offset: for non-multiplanar buffers with memory == V4L2_MEMORY_MMAP;

* offset from the start of the device memory for this plane,

* (or a "cookie" that should be passed to mmap() as offset)

* @userptr: for non-multiplanar buffers with memory == V4L2_MEMORY_USERPTR;

* a userspace pointer pointing to this buffer

* @fd: for non-multiplanar buffers with memory == V4L2_MEMORY_DMABUF;

* a userspace file descriptor associated with this buffer

* @planes: for multiplanar buffers; userspace pointer to the array of plane

* info structs for this buffer

* @length: size in bytes of the buffer (NOT its payload) for single-plane

* buffers (when type != *_MPLANE); number of elements in the

* planes array for multi-plane buffers

* @input: input number from which the video data has has been captured

*

* Contains data exchanged by application and driver using one of the Streaming

* I/O methods.

*/

struct v4l2_buffer {

__u32 index;

__u32 type;

__u32 bytesused;

__u32 flags;

__u32 field;

struct timeval timestamp;

struct v4l2_timecode timecode;

__u32 sequence;

/* memory location */

__u32 memory;

union {

__u32 offset;

unsigned long userptr;

struct v4l2_plane *planes;

__s32 fd;

} m;

__u32 length;

__u32 reserved2;

__u32 reserved;

};(3). 调用mmap函数,应用程序通过内存映射将帧缓冲区地址映射到用户空间;通常在需要对文件进行频繁读写时使用,这样用内存读写取代I/O读写,以获得较高的性能。

4. 开始捕捉,调用start_capturing函数:

(1). 调用ioctl函数,VIDIOC_QBUF,并将结果保存在结构体v4l2_buffer中;

(2). 调用ioctl函数,启动流I/O,并将结果保存在结构体v4l2_buf_type中;

5. 主循环,调用mainloop函数:

(1). 清空集合调用FD_ZERO函数,将一个给定的文件描述符加入到集合中调用FD_SET函数;

(2). 调用select函数;

(3). 调用ioctl函数,VIDIOC_DQBUF,并将结果保存在结构体v4l2_buffer中;

(4). 将帧数据写入指定的文件;

(5). 调用ioctl函数,VIDIOC_QBUF,并将结果保存在结构体v4l2_buffer中。

6. 停止捕捉,调用stop_capturing函数:

(1). 调用ioctl函数,停止流I/O,并将结果保存在结构体v4l2_buf_type中。

7. 调用uninit_device函数:

(1). 调用munmap函数,取消映射设备内存;

(2). 调用free函数,删除calloc申请的内存。

8. 关闭设备,调用close_device函数:

(1). 调用close函数关闭设备。

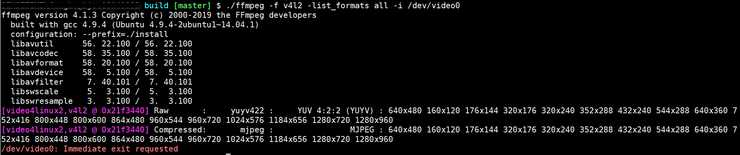

这里通过ffmpeg查看usb摄像头支持的编码类型,执行结果如下图所示,编码类型支持Raw和Mjpeg两种,对应的像素格式为V4L2_PIX_FMT_YUYV和V4L2_PIX_FMT_MJPEG:

测试代码如下:

#include "funset.hpp"

#include <string.h>

#include <assert.h>

#include <iostream>

#ifndef _MSC_VER

#include <fcntl.h>

#include <unistd.h>

#include <errno.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <sys/time.h>

#include <sys/mman.h>

#include <sys/ioctl.h>

#include <linux/videodev2.h>

namespace {

#define CLEAR(x) memset(&(x), 0, sizeof(x))

enum io_method {

IO_METHOD_READ,

IO_METHOD_MMAP,

IO_METHOD_USERPTR,

};

struct buffer {

void *start;

size_t length;

};

char* dev_name;

enum io_method io = IO_METHOD_MMAP;

int fd = -1;

struct buffer* buffers;

unsigned int n_buffers;

int out_buf;

int force_format = 1;

int frame_count = 10;

int width = 640;

int height = 480;

FILE* f;

void errno_exit(const char *s)

{

fprintf(stderr, "%s error %d, %s\n", s, errno, strerror(errno));

exit(EXIT_FAILURE);

}

int xioctl(int fh, int request, void *arg)

{

int r;

do {

r = ioctl(fh, request, arg);

} while (-1 == r && EINTR == errno);

return r;

}

void process_image(const void *p, int size)

{

/*if (out_buf)

fwrite(p, size, 1, stdout);

fflush(stderr);

fprintf(stderr, ".");

fflush(stdout);*/

fwrite(p, size, 1, f);

}

int read_frame(void)

{

struct v4l2_buffer buf;

unsigned int i;

switch (io) {

case IO_METHOD_READ:

if (-1 == read(fd, buffers[0].start, buffers[0].length)) {

switch (errno) {

case EAGAIN:

return 0;

case EIO:

// Could ignore EIO, see spec. fall through

default:

errno_exit("read");

}

}

process_image(buffers[0].start, buffers[0].length);

break;

case IO_METHOD_MMAP:

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_DQBUF, &buf)) {

switch (errno) {

case EAGAIN:

return 0;

case EIO:

// Could ignore EIO, see spec. fall through

default:

errno_exit("VIDIOC_DQBUF");

}

}

assert(buf.index < n_buffers);

process_image(buffers[buf.index].start, buf.bytesused);

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

errno_exit("VIDIOC_QBUF");

break;

case IO_METHOD_USERPTR:

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_USERPTR;

if (-1 == xioctl(fd, VIDIOC_DQBUF, &buf)) {

switch (errno) {

case EAGAIN:

return 0;

case EIO:

// Could ignore EIO, see spec. fall through

default:

errno_exit("VIDIOC_DQBUF");

}

}

for (i = 0; i < n_buffers; ++i)

if (buf.m.userptr == (unsigned long)buffers[i].start && buf.length == buffers[i].length)

break;

assert(i < n_buffers);

process_image((void *)buf.m.userptr, buf.bytesused);

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

errno_exit("VIDIOC_QBUF");

break;

}

return 1;

}

void mainloop(void)

{

unsigned int count = frame_count;

while (count-- > 0) {

for (;;) {

fd_set fds;

struct timeval tv;

int r;

FD_ZERO(&fds);

FD_SET(fd, &fds);

// Timeout

tv.tv_sec = 2;

tv.tv_usec = 0;

r = select(fd + 1, &fds, NULL, NULL, &tv);

if (-1 == r) {

if (EINTR == errno)

continue;

errno_exit("select");

}

if (0 == r) {

fprintf(stderr, "select timeout\n");

exit(EXIT_FAILURE);

}

if (read_frame())

break;

// EAGAIN - continue select loop

}

}

}

void stop_capturing(void)

{

enum v4l2_buf_type type;

switch (io) {

case IO_METHOD_READ:

// Nothing to do

break;

case IO_METHOD_MMAP:

case IO_METHOD_USERPTR:

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMOFF, &type))

errno_exit("VIDIOC_STREAMOFF");

break;

}

}

void start_capturing(void)

{

unsigned int i;

enum v4l2_buf_type type;

switch (io) {

case IO_METHOD_READ:

// Nothing to do

break;

case IO_METHOD_MMAP:

for (i = 0; i < n_buffers; ++i) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

errno_exit("VIDIOC_QBUF");

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMON, &type))

errno_exit("VIDIOC_STREAMON");

break;

case IO_METHOD_USERPTR:

for (i = 0; i < n_buffers; ++i) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_USERPTR;

buf.index = i;

buf.m.userptr = (unsigned long)buffers[i].start;

buf.length = buffers[i].length;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf))

errno_exit("VIDIOC_QBUF");

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMON, &type))

errno_exit("VIDIOC_STREAMON");

break;

}

f = fopen("usb.yuv", "w");

if (!f) {

errno_exit("fail to open file");

}

}

void uninit_device(void)

{

unsigned int i;

switch (io) {

case IO_METHOD_READ:

free(buffers[0].start);

break;

case IO_METHOD_MMAP:

for (i = 0; i < n_buffers; ++i)

if (-1 == munmap(buffers[i].start, buffers[i].length))

errno_exit("munmap");

break;

case IO_METHOD_USERPTR:

for (i = 0; i < n_buffers; ++i)

free(buffers[i].start);

break;

}

free(buffers);

fclose(f);

}

void init_read(unsigned int buffer_size)

{

buffers = (struct buffer*)calloc(1, sizeof(*buffers));

if (!buffers) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

buffers[0].length = buffer_size;

buffers[0].start = malloc(buffer_size);

if (!buffers[0].start) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

}

void init_mmap(void)

{

struct v4l2_requestbuffers req;

CLEAR(req);

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_REQBUFS, &req)) {

if (EINVAL == errno) {

fprintf(stderr, "%s does not support memory mappingn\n", dev_name);

exit(EXIT_FAILURE);

} else {

errno_exit("VIDIOC_REQBUFS");

}

}

if (req.count < 2) {

fprintf(stderr, "Insufficient buffer memory on %s\n", dev_name);

exit(EXIT_FAILURE);

}

buffers = (struct buffer*)calloc(req.count, sizeof(*buffers));

if (!buffers) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

for (n_buffers = 0; n_buffers < req.count; ++n_buffers) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffers;

if (-1 == xioctl(fd, VIDIOC_QUERYBUF, &buf))

errno_exit("VIDIOC_QUERYBUF");

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start =

mmap(NULL, // start anywhere

buf.length,

PROT_READ | PROT_WRITE, // required

MAP_SHARED, // recommended

fd, buf.m.offset);

if (MAP_FAILED == buffers[n_buffers].start)

errno_exit("mmap");

}

}

void init_userp(unsigned int buffer_size)

{

struct v4l2_requestbuffers req;

CLEAR(req);

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_USERPTR;

if (-1 == xioctl(fd, VIDIOC_REQBUFS, &req)) {

if (EINVAL == errno) {

fprintf(stderr, "%s does not support user pointer i/on", dev_name);

exit(EXIT_FAILURE);

} else {

errno_exit("VIDIOC_REQBUFS");

}

}

buffers = (struct buffer*)calloc(4, sizeof(*buffers));

if (!buffers) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

for (n_buffers = 0; n_buffers < 4; ++n_buffers) {

buffers[n_buffers].length = buffer_size;

buffers[n_buffers].start = malloc(buffer_size);

if (!buffers[n_buffers].start) {

fprintf(stderr, "Out of memory\n");

exit(EXIT_FAILURE);

}

}

}

void init_device(void)

{

struct v4l2_capability cap;

struct v4l2_cropcap cropcap;

struct v4l2_crop crop;

struct v4l2_format fmt;

unsigned int min;

if (-1 == xioctl(fd, VIDIOC_QUERYCAP, &cap)) {

if (EINVAL == errno) {

fprintf(stderr, "%s is no V4L2 device\n", dev_name);

exit(EXIT_FAILURE);

} else {

errno_exit("VIDIOC_QUERYCAP");

}

}

if (!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE)) {

fprintf(stderr, "%s is no video capture device\n", dev_name);

exit(EXIT_FAILURE);

}

switch (io) {

case IO_METHOD_READ:

if (!(cap.capabilities & V4L2_CAP_READWRITE)) {

fprintf(stderr, "%s does not support read i/o\n", dev_name);

exit(EXIT_FAILURE);

}

break;

case IO_METHOD_MMAP:

case IO_METHOD_USERPTR:

if (!(cap.capabilities & V4L2_CAP_STREAMING)) {

fprintf(stderr, "%s does not support streaming i/o\n", dev_name);

exit(EXIT_FAILURE);

}

break;

}

// Select video input, video standard and tune here

CLEAR(cropcap);

cropcap.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (0 == xioctl(fd, VIDIOC_CROPCAP, &cropcap)) {

crop.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

crop.c = cropcap.defrect; // reset to default

if (-1 == xioctl(fd, VIDIOC_S_CROP, &crop)) {

switch (errno) {

case EINVAL:

// Cropping not supported

break;

default:

// Errors ignored

break;

}

}

} else {

// Errors ignored

}

CLEAR(fmt);

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (force_format) {

fmt.fmt.pix.width = width;

fmt.fmt.pix.height = height;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

// Note VIDIOC_S_FMT may change width and height

if (-1 == xioctl(fd, VIDIOC_S_FMT, &fmt))

errno_exit("VIDIOC_S_FMT");

} else {

// Preserve original settings as set by v4l2-ctl for example

if (-1 == xioctl(fd, VIDIOC_G_FMT, &fmt))

errno_exit("VIDIOC_G_FMT");

}

// Buggy driver paranoia

min = fmt.fmt.pix.width * 2;

if (fmt.fmt.pix.bytesperline < min)

fmt.fmt.pix.bytesperline = min;

min = fmt.fmt.pix.bytesperline * fmt.fmt.pix.height;

if (fmt.fmt.pix.sizeimage < min)

fmt.fmt.pix.sizeimage = min;

switch (io) {

case IO_METHOD_READ:

init_read(fmt.fmt.pix.sizeimage);

break;

case IO_METHOD_MMAP:

init_mmap();

break;

case IO_METHOD_USERPTR:

init_userp(fmt.fmt.pix.sizeimage);

break;

}

}

void close_device(void)

{

if (-1 == close(fd))

errno_exit("close");

fd = -1;

}

void open_device(void)

{

struct stat st;

if (-1 == stat(dev_name, &st)) {

fprintf(stderr, "Cannot identify '%s': %d, %s\n", dev_name, errno, strerror(errno));

exit(EXIT_FAILURE);

}

if (!S_ISCHR(st.st_mode)) {

fprintf(stderr, "%s is no devicen\n", dev_name);

exit(EXIT_FAILURE);

}

fd = open(dev_name, O_RDWR | O_NONBLOCK, 0); // O_RDWR: required

if (-1 == fd) {

fprintf(stderr, "Cannot open '%s': %d, %s\n", dev_name, errno, strerror(errno));

exit(EXIT_FAILURE);

}

}

} // namespace

int test_v4l2_usb_stream()

{

// reference: https://linuxtv.org/downloads/v4l-dvb-apis-new/uapi/v4l/capture.c.html

dev_name = "/dev/video0";

open_device();

init_device();

start_capturing();

mainloop();

stop_capturing();

uninit_device();

close_device();

fprintf(stdout, "test finish\n");

return 0;

}

#else

int test_v4l2_usb_stream()

{

fprintf(stderr, "Error: this test code only support linux platform\n");

return -1;

}

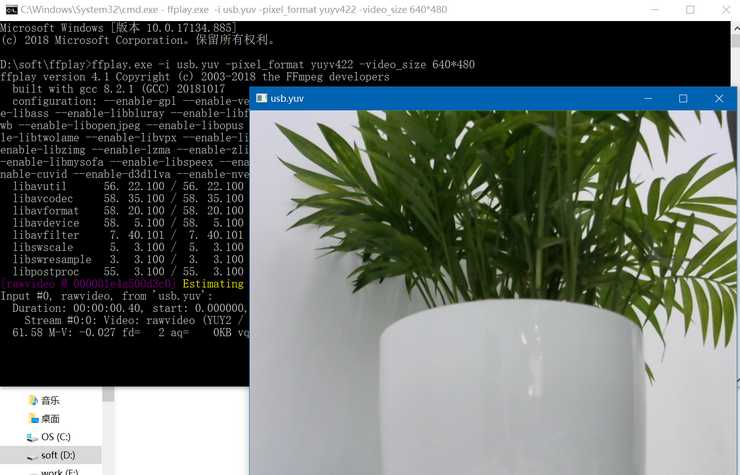

#endif通过ffplay播放生成的usb.yuv文件,执行结果如下:

GitHub:https://github.com//fengbingchun/OpenCV_Test

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/34876.html