文章目录

mistletoe 安装

pip3 install mistletoe

mistletoe 使用

with open('D:/GZUniversity/Project/prettifyMarkdown/example.md', 'r', encoding='utf-8') as fin:

rendered = mistletoe.markdown(fin)

mistletoe 入口

# iterable:由 open()获取的文件句柄

# renderer:具体的渲染类

def markdown(iterable, renderer=HTMLRenderer):

"""

Output HTML with default settings.

Enables inline and block-level HTML tags.

"""

with renderer() as renderer:

return renderer.render(Document(iterable))

mistletoe 源码解析

document

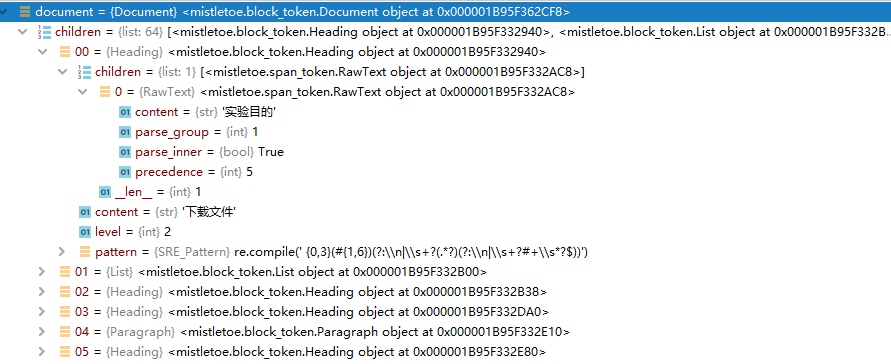

Document 是包 mistletoe.block_token 中的工具类,其作用是将 .md 文件生成抽象语法树,语法树如下图所示:

from mistletoe.block_token import Document

from targetRender import targetRender

with open('D:/GZUniversity/Project/prettifyMarkdown/example.md', 'r', encoding='utf-8') as fin:

# 如下图所示,是源文件生成的抽象语法树

document = Document(fin)

targetRender().render(document)

其中,一个 Children 代表 Markdown 文件中的一个基本组成单元,如标题、图片、代码块等。

解析为抽象语法树之后,将其抽象语法树交由 Renderer 处理。

以下为 Markdown 中各种元素对应的类,如 class Heading(BlockToken) 对应 Markdown 中的标题。

Document

文档本生,包含所有的元素。可以理解为 根节点。

class Document(BlockToken):

"""

Document token.

"""

def __init__(self, lines):

if isinstance(lines, str):

lines = lines.splitlines(keepends=True)

lines = [line if line.endswith('\n') else '{}\n'.format(line) for line in lines]

self.footnotes = {}

global _root_node

_root_node = self

span_token._root_node = self

self.children = tokenize(lines)

span_token._root_node = None

_root_node = None

Heading

class Heading(BlockToken):

"""

Heading token. (["### some heading ###\\n"])

Boundary between span-level and block-level tokens.

Attributes:

level (int): heading level.

children (list): inner tokens.

"""

pattern = re.compile(r' {0,3}(#{1,6})(?:\n|\s+?(.*?)(?:\n|\s+?#+\s*?$))')

level = 0

content = ''

def __init__(self, match):

self.level, content = match

super().__init__(content, span_token.tokenize_inner)

@classmethod

def start(cls, line):

match_obj = cls.pattern.match(line)

if match_obj is None:

return False

cls.level = len(match_obj.group(1))

cls.content = (match_obj.group(2) or '').strip()

if set(cls.content) == {'#'}:

cls.content = ''

return True

@classmethod

def read(cls, lines):

next(lines)

return cls.level, cls.content

SetextHeading

class SetextHeading(BlockToken):

"""

Setext headings.

Not included in the parsing process, but called by Paragraph.__new__.

"""

def __init__(self, lines):

self.level = 1 if lines.pop().lstrip().startswith('=') else 2

content = '\n'.join([line.strip() for line in lines])

super().__init__(content, span_token.tokenize_inner)

@classmethod

def start(cls, line):

raise NotImplementedError()

@classmethod

def read(cls, lines):

raise NotImplementedError()

Quote

class Quote(BlockToken):

"""

Quote token. (["> # heading\\n", "> paragraph\\n"])

"""

def __init__(self, parse_buffer):

# span-level tokenizing happens here.

self.children = tokenizer.make_tokens(parse_buffer)

@staticmethod

def start(line):

stripped = line.lstrip(' ')

if len(line) - len(stripped) > 3:

return False

return stripped.startswith('>')

@classmethod

def read(cls, lines):

# first line

line = cls.convert_leading_tabs(next(lines).lstrip()).split('>', 1)[1]

if len(line) > 0 and line[0] == ' ':

line = line[1:]

line_buffer = [line]

# set booleans

in_code_fence = CodeFence.start(line)

in_block_code = BlockCode.start(line)

blank_line = line.strip() == ''

# loop

next_line = lines.peek()

while (next_line is not None

and next_line.strip() != ''

and not Heading.start(next_line)

and not CodeFence.start(next_line)

and not ThematicBreak.start(next_line)

and not List.start(next_line)):

stripped = cls.convert_leading_tabs(next_line.lstrip())

prepend = 0

if stripped[0] == '>':

# has leader, not lazy continuation

prepend += 1

if stripped[1] == ' ':

prepend += 1

stripped = stripped[prepend:]

in_code_fence = CodeFence.start(stripped)

in_block_code = BlockCode.start(stripped)

blank_line = stripped.strip() == ''

line_buffer.append(stripped)

elif in_code_fence or in_block_code or blank_line:

# not paragraph continuation text

break

else:

# lazy continuation, preserve whitespace

line_buffer.append(next_line)

next(lines)

next_line = lines.peek()

# block level tokens are parsed here, so that footnotes

# in quotes can be recognized before span-level tokenizing.

Paragraph.parse_setext = False

parse_buffer = tokenizer.tokenize_block(line_buffer, _token_types)

Paragraph.parse_setext = True

return parse_buffer

@staticmethod

def convert_leading_tabs(string):

string = string.replace('>\t', ' ', 1)

count = 0

for i, c in enumerate(string):

if c == '\t':

count += 4

elif c == ' ':

count += 1

else:

break

if i == 0:

return string

return '>' + ' ' * count + string[i:]

Paragraph

class Paragraph(BlockToken):

"""

Paragraph token. (["some\\n", "continuous\\n", "lines\\n"])

Boundary between span-level and block-level tokens.

"""

setext_pattern = re.compile(r' {0,3}(=|-)+ *$')

parse_setext = True # can be disabled by Quote

def __new__(cls, lines):

if not isinstance(lines, list):

# setext heading token, return directly

return lines

return super().__new__(cls)

def __init__(self, lines):

content = ''.join([line.lstrip() for line in lines]).strip()

super().__init__(content, span_token.tokenize_inner)

@staticmethod

def start(line):

return line.strip() != ''

@classmethod

def read(cls, lines):

line_buffer = [next(lines)]

next_line = lines.peek()

while (next_line is not None

and next_line.strip() != ''

and not Heading.start(next_line)

and not CodeFence.start(next_line)

and not Quote.start(next_line)):

# check if next_line starts List

list_pair = ListItem.parse_marker(next_line)

if (len(next_line) - len(next_line.lstrip()) < 4

and list_pair is not None):

prepend, leader = list_pair

# non-empty list item

if next_line[:prepend].endswith(' '):

# unordered list, or ordered list starting from 1

if not leader[:-1].isdigit() or leader[:-1] == '1':

break

# check if next_line starts HTMLBlock other than type 7

html_block = HTMLBlock.start(next_line)

if html_block and html_block != 7:

break

# check if we see a setext underline

if cls.parse_setext and cls.is_setext_heading(next_line):

line_buffer.append(next(lines))

return SetextHeading(line_buffer)

# check if we have a ThematicBreak (has to be after setext)

if ThematicBreak.start(next_line):

break

# no other tokens, we're good

line_buffer.append(next(lines))

next_line = lines.peek()

return line_buffer

@classmethod

def is_setext_heading(cls, line):

return cls.setext_pattern.match(line)

BlockCode

class BlockCode(BlockToken):

"""

Indented code.

Attributes:

children (list): contains a single span_token.RawText token.

language (str): always the empty string.

"""

def __init__(self, lines):

self.language = ''

self.children = (span_token.RawText(''.join(lines).strip('\n')+'\n'),)

@staticmethod

def start(line):

return line.replace('\t', ' ', 1).startswith(' ')

@classmethod

def read(cls, lines):

line_buffer = []

for line in lines:

if line.strip() == '':

line_buffer.append(line.lstrip(' ') if len(line) < 5 else line[4:])

continue

if not line.replace('\t', ' ', 1).startswith(' '):

lines.backstep()

break

line_buffer.append(cls.strip(line))

return line_buffer

@staticmethod

def strip(string):

count = 0

for i, c in enumerate(string):

if c == '\t':

return string[i+1:]

elif c == ' ':

count += 1

else:

break

if count == 4:

return string[i+1:]

return string

CodeFence

class CodeFence(BlockToken):

"""

Code fence. (["```sh\\n", "rm -rf /", ..., "```"])

Boundary between span-level and block-level tokens.

Attributes:

children (list): contains a single span_token.RawText token.

language (str): language of code block (default to empty).

"""

pattern = re.compile(r'( {0,3})((?:`|~){3,}) *(\S*)')

_open_info = None

def __init__(self, match):

lines, open_info = match

self.language = span_token.EscapeSequence.strip(open_info[2])

self.children = (span_token.RawText(''.join(lines)),)

@classmethod

def start(cls, line):

match_obj = cls.pattern.match(line)

if not match_obj:

return False

prepend, leader, lang = match_obj.groups()

if leader[0] in lang or leader[0] in line[match_obj.end():]:

return False

cls._open_info = len(prepend), leader, lang

return True

@classmethod

def read(cls, lines):

next(lines)

line_buffer = []

for line in lines:

stripped_line = line.lstrip(' ')

diff = len(line) - len(stripped_line)

if (stripped_line.startswith(cls._open_info[1])

and len(stripped_line.split(maxsplit=1)) == 1

and diff < 4):

break

if diff > cls._open_info[0]:

stripped_line = ' ' * (diff - cls._open_info[0]) + stripped_line

line_buffer.append(stripped_line)

return line_buffer, cls._open_info

List

class List(BlockToken):

"""

List token.

Attributes:

children (list): a list of ListItem tokens.

loose (bool): whether the list is loose.

start (NoneType or int): None if unordered, starting number if ordered.

"""

pattern = re.compile(r' {0,3}(?:\d{0,9}[.)]|[+\-*])(?:[ \t]*$|[ \t]+)')

def __init__(self, matches):

self.children = [ListItem(*match) for match in matches]

self.loose = any(item.loose for item in self.children)

leader = self.children[0].leader

self.start = None

if len(leader) != 1:

self.start = int(leader[:-1])

@classmethod

def start(cls, line):

return cls.pattern.match(line)

@classmethod

def read(cls, lines):

leader = None

next_marker = None

matches = []

while True:

output, next_marker = ListItem.read(lines, next_marker)

item_leader = output[2]

if leader is None:

leader = item_leader

elif not cls.same_marker_type(leader, item_leader):

lines.reset()

break

matches.append(output)

if next_marker is None:

break

if matches:

# Only consider the last list item loose if there's more than one element

last_parse_buffer = matches[-1][0]

last_parse_buffer.loose = len(last_parse_buffer) > 1 and last_parse_buffer.loose

return matches

@staticmethod

def same_marker_type(leader, other):

if len(leader) == 1:

return leader == other

return leader[:-1].isdigit() and other[:-1].isdigit() and leader[-1] == other[-1]

ListItem

class ListItem(BlockToken):

"""

List items. Not included in the parsing process, but called by List.

"""

pattern = re.compile(r'\s*(\d{0,9}[.)]|[+\-*])(\s*$|\s+)')

def __init__(self, parse_buffer, prepend, leader):

self.leader = leader

self.prepend = prepend

self.children = tokenizer.make_tokens(parse_buffer)

self.loose = parse_buffer.loose

@staticmethod

def in_continuation(line, prepend):

return line.strip() == '' or len(line) - len(line.lstrip()) >= prepend

@staticmethod

def other_token(line):

return (Heading.start(line)

or Quote.start(line)

or CodeFence.start(line)

or ThematicBreak.start(line))

@classmethod

def parse_marker(cls, line):

"""

Returns a pair (prepend, leader) iff the line has a valid leader.

"""

match_obj = cls.pattern.match(line)

if match_obj is None:

return None # no valid leader

leader = match_obj.group(1)

content = match_obj.group(0).replace(leader+'\t', leader+' ', 1)

# reassign prepend and leader

prepend = len(content)

if prepend == len(line.rstrip('\n')):

prepend = match_obj.end(1) + 1

else:

spaces = match_obj.group(2)

if spaces.startswith('\t'):

spaces = spaces.replace('\t', ' ', 1)

spaces = spaces.replace('\t', ' ')

n_spaces = len(spaces)

if n_spaces > 4:

prepend = match_obj.end(1) + 1

return prepend, leader

@classmethod

def read(cls, lines, prev_marker=None):

next_marker = None

lines.anchor()

prepend = -1

leader = None

line_buffer = []

# first line

line = next(lines)

prepend, leader = prev_marker if prev_marker else cls.parse_marker(line)

line = line.replace(leader+'\t', leader+' ', 1).replace('\t', ' ')

empty_first_line = line[prepend:].strip() == ''

if not empty_first_line:

line_buffer.append(line[prepend:])

next_line = lines.peek()

if empty_first_line and next_line is not None and next_line.strip() == '':

parse_buffer = tokenizer.tokenize_block([next(lines)], _token_types)

next_line = lines.peek()

if next_line is not None:

marker_info = cls.parse_marker(next_line)

if marker_info is not None:

next_marker = marker_info

return (parse_buffer, prepend, leader), next_marker

# loop

newline = 0

while True:

# no more lines

if next_line is None:

# strip off newlines

if newline:

lines.backstep()

del line_buffer[-newline:]

break

next_line = next_line.replace('\t', ' ')

# not in continuation

if not cls.in_continuation(next_line, prepend):

# directly followed by another token

if cls.other_token(next_line):

if newline:

lines.backstep()

del line_buffer[-newline:]

break

# next_line is a new list item

marker_info = cls.parse_marker(next_line)

if marker_info is not None:

next_marker = marker_info

break

# not another item, has newlines -> not continuation

if newline:

lines.backstep()

del line_buffer[-newline:]

break

next(lines)

line = next_line

stripped = line.lstrip(' ')

diff = len(line) - len(stripped)

if diff > prepend:

stripped = ' ' * (diff - prepend) + stripped

line_buffer.append(stripped)

newline = newline + 1 if next_line.strip() == '' else 0

next_line = lines.peek()

# block-level tokens are parsed here, so that footnotes can be

# recognized before span-level parsing.

parse_buffer = tokenizer.tokenize_block(line_buffer, _token_types)

return (parse_buffer, prepend, leader), next_marker

Table

class Table(BlockToken):

"""

Table token.

Attributes:

has_header (bool): whether table has header row.

column_align (list): align options for each column (default to [None]).

children (list): inner tokens (TableRows).

"""

def __init__(self, lines):

if '---' in lines[1]:

self.column_align = [self.parse_align(column)

for column in self.split_delimiter(lines[1])]

self.header = TableRow(lines[0], self.column_align)

self.children = [TableRow(line, self.column_align) for line in lines[2:]]

else:

self.column_align = [None]

self.children = [TableRow(line) for line in lines]

@staticmethod

def split_delimiter(delimiter):

"""

Helper function; returns a list of align options.

Args:

delimiter (str): e.g.: "| :--- | :---: | ---: |\n"

Returns:

a list of align options (None, 0 or 1).

"""

return re.findall(r':?---+:?', delimiter)

@staticmethod

def parse_align(column):

"""

Helper function; returns align option from cell content.

Returns:

None if align = left;

0 if align = center;

1 if align = right.

"""

return (0 if column[0] == ':' else 1) if column[-1] == ':' else None

@staticmethod

def start(line):

return '|' in line

@staticmethod

def read(lines):

lines.anchor()

line_buffer = [next(lines)]

while lines.peek() is not None and '|' in lines.peek():

line_buffer.append(next(lines))

if len(line_buffer) < 2 or '---' not in line_buffer[1]:

lines.reset()

return None

return line_buffer

TableRow

class TableRow(BlockToken):

"""

Table row token.

Should only be called by Table.__init__().

"""

def __init__(self, line, row_align=None):

self.row_align = row_align or [None]

cells = filter(None, line.strip().split('|'))

self.children = [TableCell(cell.strip() if cell else '', align)

for cell, align in zip_longest(cells, self.row_align)]

class TableCell(BlockToken):

"""

Table cell token.

Boundary between span-level and block-level tokens.

Should only be called by TableRow.__init__().

Attributes:

align (bool): align option for current cell (default to None).

children (list): inner (span-)tokens.

"""

def __init__(self, content, align=None):

self.align = align

super().__init__(content, span_token.tokenize_inner)

Footnote

class Footnote(BlockToken):

"""

Footnote token.

The constructor returns None, because the footnote information

is stored in Footnote.read.

"""

label_pattern = re.compile(r'[ \n]{0,3}\[(.+?)\]', re.DOTALL)

def __new__(cls, _):

return None

@classmethod

def start(cls, line):

return line.lstrip().startswith('[')

@classmethod

def read(cls, lines):

line_buffer = []

next_line = lines.peek()

while next_line is not None and next_line.strip() != '':

line_buffer.append(next(lines))

next_line = lines.peek()

string = ''.join(line_buffer)

offset = 0

matches = []

while offset < len(string) - 1:

match_info = cls.match_reference(lines, string, offset)

if match_info is None:

break

offset, match = match_info

matches.append(match)

cls.append_footnotes(matches, _root_node)

return matches or None

@classmethod

def match_reference(cls, lines, string, offset):

match_info = cls.match_link_label(string, offset)

if not match_info:

cls.backtrack(lines, string, offset)

return None

_, label_end, label = match_info

if not follows(string, label_end-1, ':'):

cls.backtrack(lines, string, offset)

return None

match_info = cls.match_link_dest(string, label_end)

if not match_info:

cls.backtrack(lines, string, offset)

return None

_, dest_end, dest = match_info

match_info = cls.match_link_title(string, dest_end)

if not match_info:

cls.backtrack(lines, string, dest_end)

return None

_, title_end, title = match_info

return title_end, (label, dest, title)

@classmethod

def match_link_label(cls, string, offset):

start = -1

end = -1

escaped = False

for i, c in enumerate(string[offset:], start=offset):

if c == '\\' and not escaped:

escaped = True

elif c == '[' and not escaped:

if start == -1:

start = i

else:

return None

elif c == ']' and not escaped:

end = i

label = string[start+1:end]

if label.strip() != '':

return start, end+1, label

return None

elif escaped:

escaped = False

return None

@classmethod

def match_link_dest(cls, string, offset):

offset = shift_whitespace(string, offset+1)

if offset == len(string):

return None

if string[offset] == '<':

escaped = False

for i, c in enumerate(string[offset+1:], start=offset+1):

if c == '\\' and not escaped:

escaped = True

elif c == ' ' or c == '\n' or (c == '<' and not escaped):

return None

elif c == '>' and not escaped:

return offset, i+1, string[offset+1:i]

elif escaped:

escaped = False

return None

else:

escaped = False

count = 0

for i, c in enumerate(string[offset:], start=offset):

if c == '\\' and not escaped:

escaped = True

elif c in whitespace:

break

elif not escaped:

if c == '(':

count += 1

elif c == ')':

count -= 1

elif is_control_char(c):

return None

elif escaped:

escaped = False

if count != 0:

return None

return offset, i, string[offset:i]

@classmethod

def match_link_title(cls, string, offset):

new_offset = shift_whitespace(string, offset)

if (new_offset == len(string)

or '\n' in string[offset:new_offset]

and string[new_offset] == '['):

return offset, new_offset, ''

if string[new_offset] == '"':

closing = '"'

elif string[new_offset] == "'":

closing = "'"

elif string[new_offset] == '(':

closing = ')'

elif '\n' in string[offset:new_offset]:

return offset, offset, ''

else:

return None

offset = new_offset

escaped = False

for i, c in enumerate(string[offset+1:], start=offset+1):

if c == '\\' and not escaped:

escaped = True

elif c == closing and not escaped:

new_offset = shift_whitespace(string, i+1)

if '\n' not in string[i+1:new_offset]:

return None

return offset, new_offset, string[offset+1:i]

elif escaped:

escaped = False

return None

@staticmethod

def append_footnotes(matches, root):

for key, dest, title in matches:

key = normalize_label(key)

dest = span_token.EscapeSequence.strip(dest.strip())

title = span_token.EscapeSequence.strip(title)

if key not in root.footnotes:

root.footnotes[key] = dest, title

@staticmethod

def backtrack(lines, string, offset):

lines._index -= string[offset+1:].count('\n')

ThematicBreak

class ThematicBreak(BlockToken):

"""

Thematic break token (a.k.a. horizontal rule.)

"""

pattern = re.compile(r' {0,3}(?:([-_*])\s*?)(?:\1\s*?){2,}$')

def __init__(self, _):

pass

@classmethod

def start(cls, line):

return cls.pattern.match(line)

@staticmethod

def read(lines):

return [next(lines)]

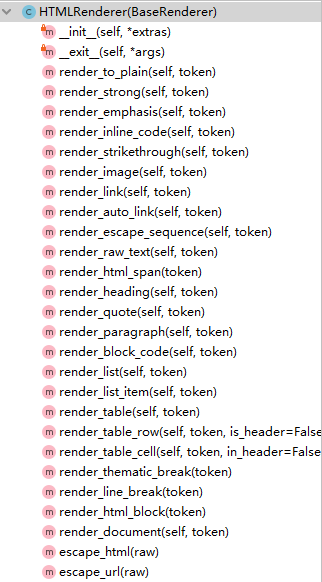

HTMLRenderer

HTMLRenderer 类的方法如下。每个方法对应与 document 中的每一种类型。比如 document 中的 Heading 对应 HTMLRender 中的 方法 render_heading()

render

render 主要是根据输入 token 的类名,使用相应的 方法 处理 token。

def render(self, token):

"""

Grabs the class name from input token and finds its corresponding

render function.

Basically a janky way to do polymorphism.

Arguments:

token: whose __class__.__name__ is in self.render_map.

"""

return self.render_map[token.__class__.__name__](token)

其中,render_map 内容如下,使用 render_map[token.__class__.__name__](token)调用相关的方法。

self.render_map = {

'Strong': self.render_strong,

'Emphasis': self.render_emphasis,

'InlineCode': self.render_inline_code,

'RawText': self.render_raw_text,

'Strikethrough': self.render_strikethrough,

'Image': self.render_image,

'Link': self.render_link,

'AutoLink': self.render_auto_link,

'EscapeSequence': self.render_escape_sequence,

'Heading': self.render_heading,

'SetextHeading': self.render_heading,

'Quote': self.render_quote,

'Paragraph': self.render_paragraph,

'CodeFence': self.render_block_code,

'BlockCode': self.render_block_code,

'List': self.render_list,

'ListItem': self.render_list_item,

'Table': self.render_table,

'TableRow': self.render_table_row,

'TableCell': self.render_table_cell,

'ThematicBreak': self.render_thematic_break,

'LineBreak': self.render_line_break,

'Document': self.render_document,

}

render_document

render_document 处理 document 传入的抽象语法树。其功能如下:

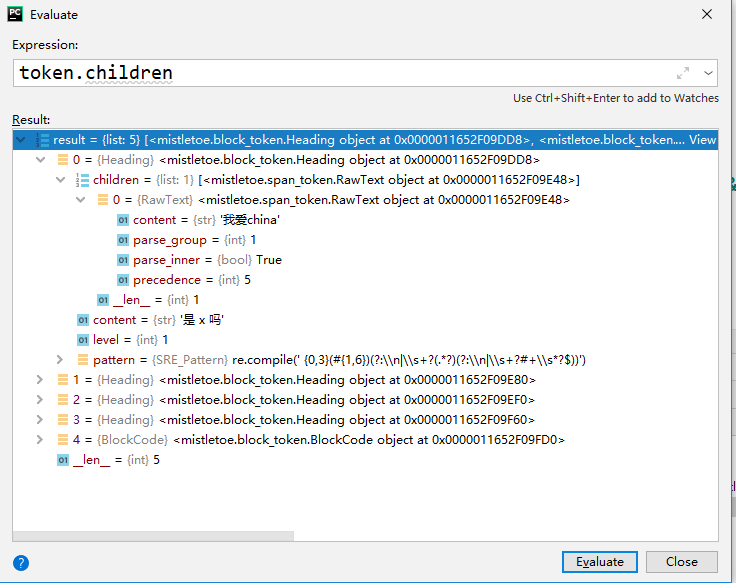

- 遍历 document 中的 children (list 结构),并交由 render()处理。children 的结构如下:

- 用

\n连接选然后的文本。

def render_document(self, token):

self.footnotes.update(token.footnotes)

inner = '\n'.join([self.render(child) for child in token.children])

return '{}\n'.format(inner) if inner else ''

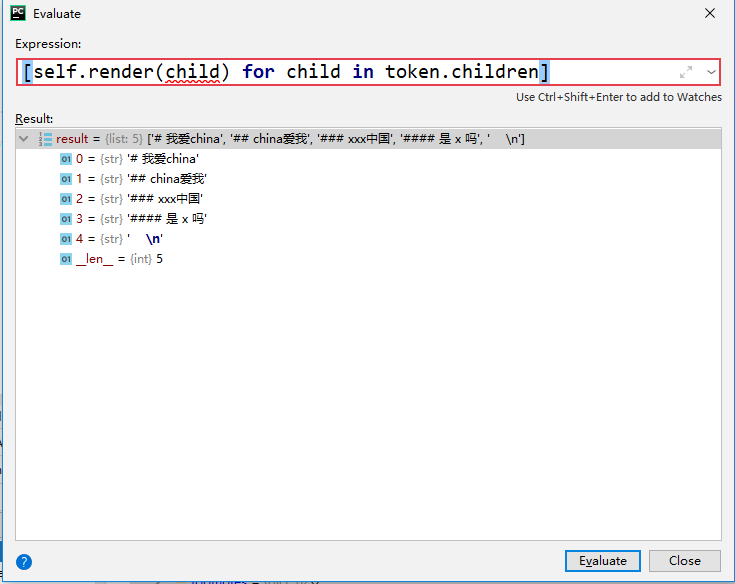

其中,[self.render(child) for child in token.children] 结果如下:

render_heading

render_heading() 根据抽象语法树中的字段 level 和 内容,将其渲染为 <h{level}>{inner}</h{level}> 。

def render_heading(self, token):

template = '<h{level}>{inner}</h{level}>'

inner = self.render_inner(token)

return template.format(level=token.level, inner=inner)

render_inner

递归 render child token,然后直接拼接

def render_inner(self, token):

"""

Recursively renders child tokens. Joins the rendered

strings with no space in between.

If newlines / spaces are needed between tokens, add them

in their respective templates, or override this function

in the renderer subclass, so that whitespace won't seem to

appear magically for anyone reading your program.

Arguments:

token: a branch node who has children attribute.

"""

return ''.join(map(self.render, token.children))

render_raw_text

将 markdown 中的 字符串 转换为 html 中的内容,比如空格、制表符等。

def render_raw_text(self, token):

return self.escape_html(token.content)

escape_html

@staticmethod

def escape_html(raw):

return html.escape(html.unescape(raw)).replace(''', "'")

escape

def escape(s, quote=True):

"""

Replace special characters "&", "<" and ">" to HTML-safe sequences.

If the optional flag quote is true (the default), the quotation mark

characters, both double quote (") and single quote (') characters are also

translated.

"""

s = s.replace("&", "&") # Must be done first!

s = s.replace("<", "<")

s = s.replace(">", ">")

if quote:

s = s.replace('"', """)

s = s.replace('\'', "'")

return s

今天的文章mistletoe 源码解析分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/63276.html