安装

1, 安装java 8

mkdir /usr/java && tar -zxvf jdk-8u121-linux-x64.tar.gz && mv jdk1.8.0_121 /usr/java/java8

export JAVA_HOME=/usr/java/java8

export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar

export PATH=$PATH:$JAVA_HOME/bin

update-alternatives --install /usr/bin/java java /usr/java/java8/bin/java 1100

update-alternatives --install /usr/bin/javac javac /usr/java/java8/bin/javac 1100

update-alternatives --config java

update-alternatives --config javac

java -version

2, 安装 elastic search

es 7.x 底层是lucene8.x, es5需要JDK8+, es6需要JDK11, es7内置了JDK12

es 5.6.x 对应了elasticsearch-php 5.6.x, 对应了php5.6.x

es 从6开始, 只能一个 index 对应一个 type, 并且禁用了 _all, es 从7 开始, 移除了 type.

es 从6开始, 移除了_parent.

es 在6以前, 可以用 _source_include 和 _source_exclude 来指定检索的结果中是否包含 _source 中某个字段.

es 在6以后, 变为了 _source_includes 和 _source_excludes, 多了 s

可以通过源安装最新的

java -version (apt install default-jdk) # 安装jdk (前面已经安装就忽略)

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo sh -c 'echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" > /etc/apt/sources.list.d/elastic-7.x.list'

apt update

apt install elasticsearch

systemctl enable elasticsearch.service --now

journalctl -u elasticsearch

也可以下载后直接

dpkg -i elasticsearch-5.6.16.deb # 安装5.6.16版本

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.9.0-amd64.deb

dpkg -i elasticsearch-7.9.0-amd64.deb # 安装7.9.0版本

测试

curl -X GET "localhost:9200"

curl -X GET "localhost:9200/_cat/nodes?v # _cat 是查看es各种状态, v 是verbose 详细信息

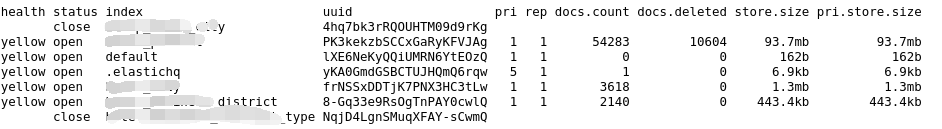

http://localhost:9200/_cat/indices?v 查看所有的索引

第一列是健康度, 有green, yellow, red

第二列是状态, open/close

第三列是index的名字

第四列是uuid

第五列是主分片数

第六列是副本分片数

第七列是文档数, 类似mysql 多少条数据

第八列是已经删除的文档数

第九列是全部分片存储的大小

第十列是主分片存储的大小

配置

数据存储在 /var/lib/elasticsearch, 配置文件在 /etc/elaticsearch, es bin 在 /usr/share/elaticsearch

1, 允许 es 监听外部链接

vim /etc/elasticsearch/elasticsearch.yml

network.host: 0.0.0.0

http.port: 9200

systemctl enable elasticsearch.service

systemctl start elasticsearch

systemctl restart elasticsearch

2, 配置 jvm, 单机一个分片不要超过31G内存, 如果机器大于32G 可以考虑分成多个es node 实例.

-Xms 8g, -Xmx 8g

4, 报错处理

i, 查看日志, 一般是历史的es的数据未删除不兼容, 删除掉就好 /var/lib/elasticsearch/nodes/0/

ii, max number of threads [1024] for user [elasticsearch] is too low, increase to at least

vim /etc/security/limits.conf

soft nproc 4096

hard nproc 4096

vim /etc/security/limits.d/90-nproc.conf // centos, 修改: * soft nproc 4096

通过ulimit -Hu 和 ulimit -Su 查看

iii, seccomp unavailable: CONFIG_SECCOMP not compiled into kernel, CONFIG_SECCOMP and CONFIG_SECCOMP_FILTER are needed

vim elasticsearch.yml

追加

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

iv, max virtual memory areas vm.max_map_count [65530] likely too low, increase to at least [262144]

vim /etc/sysctl.conf

增加

vm.max_map_count=655360

执行 sysctl -p

v, org.elasticsearch.transport.RemoteTransportException: Failed to deserialize exception response from stream

统一 ES 节点之间的jdk版本

分词

在创建索引的时候可以设置分词器, 也可以先创建动态模板, 里面设置分词器.

IK 分词器

安装

7.9 ES

i, /usr/share/elasticsearch/bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.9.0/elasticsearch-analysis-ik-7.9.0.zip

5.6 ES

i, /usr/share/elasticsearch/bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v5.6.16/elasticsearch-analysis-ik-5.6.16.zip

也可以下载下来手动解压安装

i, https://github.com/medcl/elasticsearch-analysis-ik/releases

ii, cd /usr/share/elasticsearch/plugins/ && mkdir ik

iii, unzip plugin to /usr/share/elasticsearch/plugins/ik

ik_max_word: 会将文本做最细粒度的拆分,比如会将"中华人民共和国国歌"拆分为"中华人民共和国,中华人民,中华,华人,人民共和国,人民,人,民,共和国,共和,和,国国,国歌",会穷尽各种可能的组合,适合 Term Query;

ik_smart: 会做最粗粒度的拆分,比如会将"中华人民共和国国歌"拆分为"中华人民共和国,国歌",适合 Phrase 查询.

测试

localhost:9200/_analyze?analyzer=ik_smart&text=王者农药

localhost:9200/_analyze?analyzer=ik_max_words&text=中华人民共和国

自定义IK 分词词库

vim /etc/elasticsearch/analysis-ik/IKAnalyzer.cfg.xml

增加

<entry key="ext_dict">custome/new.txt</entry>

同级目录下(/etc/elasticsearch/analysis-ik/) 新增文件夹 (custom) 和文件(new.txt), 一个关键词一行.

重启elasticsearch

systemctl restart elasticsearch.service

拼音分词

安装

i, https://github.com/medcl/elasticsearch-analysis-pinyin/releases

ii, cd /usr/share/elasticsearch/plugins/ && mkdir ik

iii, unzip plugin to /usr/share/elasticsearch/plugins/ik

或者直接安装

7.9 ES

i, /usr/share/elasticsearch/bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-pinyin/releases/download/v7.9.0/elasticsearch-analysis-pinyin-7.9.0.zip

5.6 ES

i, /usr/share/elasticsearch/bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-pinyin/releases/download/v5.6.16/elasticsearch-analysis-pinyin-5.6.16.zip

配置 (>=v5.0.0)

可选参数

keep_first_letter

启用此选项时,例如:刘德华> ldh,默认值:true

keep_separate_first_letter

启用该选项时,将保留第一个字母分开,例如:刘德华> l,d,h,默认:假的,注意:查询结果也许是太模糊,由于长期过频

limit_first_letter_length

设置first_letter结果的最大长度,默认值:16

keep_full_pinyin

当启用该选项,例如:刘德华> [ liu,de,hua],默认值:true

keep_joined_full_pinyin

当启用此选项时,例如:刘德华> [ liudehua],默认值:false

keep_none_chinese

在结果中保留非中文字母或数字,默认值:true

keep_none_chinese_together

保持非中国信一起,默认值:true,如:DJ音乐家- > DJ,yin,yue,jia,当设置为false,例如:DJ音乐家- > D,J,yin,yue,jia,注意:keep_none_chinese必须先启动

keep_none_chinese_in_first_letter

第一个字母保持非中文字母,例如:刘德华AT2016- > ldhat2016,默认值:true

keep_none_chinese_in_joined_full_pinyin

保留非中文字母加入完整拼音,例如:刘德华2016- > liudehua2016,默认:false

none_chinese_pinyin_tokenize

打破非中国信成单独的拼音项,如果他们拼音,默认值:true,如:liudehuaalibaba13zhuanghan- > liu,de,hua,a,li,ba,ba,13,zhuang,han,注意:keep_none_chinese和keep_none_chinese_together应首先启用

keep_original

当启用此选项时,也会保留原始输入,默认值:false

lowercase

小写非中文字母,默认值:true

trim_whitespace

默认值:true

remove_duplicated_term

当启用此选项时,将删除重复项以保存索引,例如:de的> de,默认值:false,注意:位置相关查询可能受影响

ignore_pinyin_offset (ES >= 6.0),不允许重叠token, 默认: true. 如果需要偏移, 需要设置为false

一个完整的动态映射模板(包含geo, pinyin, IK)

PUT /_template/{index_name}

{

"template":"hotel",

"order":1,

"index_patterns":[ # 自动关联 index, (# 和后面的内容需要去掉, 后面不再重复)

"hotel*,vhotel*"

],

"settings":{

"number_of_shards":3,

"number_of_replicas":1,

"refresh_interval":"5s",

"analysis":{

"filter":{

"pinyin_full_filter":{ # 注释: 拼音全拼, 比如 拼音的pinyin -> pinyin 就是全拼

"keep_joined_full_pinyin":"true",

"lowercase":"true",

"keep_original":"false",

"keep_first_letter":"false",

"keep_separate_first_letter":"false",

"type":"pinyin",

"keep_none_chinese":"false",

"limit_first_letter_length":"50",

"keep_full_pinyin":"true"

},

"pinyin_simple_filter":{ # 注释: 拼音简拼, 比如 拼音的pinyin -> py 就是简拼

"keep_joined_full_pinyin":"true",

"lowercase":"true",

"none_chinese_pinyin_tokenize":"false",

"padding_char":" ",

"keep_original":"true",

"keep_first_letter":"true",

"keep_separate_first_letter":"false",

"type":"pinyin",

"keep_full_pinyin":"false"

}

},

"analyzer":{

"pinyinFullIndexAnalyzer":{

"filter":[

"asciifolding",

"lowercase",

"pinyin_full_filter"

],

"type":"custom",

"tokenizer":"ik_max_word"

},

"ik_pinyin_analyzer":{

"filter":[

"asciifolding",

"lowercase",

"pinyin_full_filter",

"word_delimiter"

],

"type":"custom",

"tokenizer":"ik_smart"

},

"ikIndexAnalyzer":{

"filter":[

"asciifolding",

"lowercase"

],

"type":"custom",

"tokenizer":"ik_max_word"

},

"pinyinSimpleIndexAnalyzer":{

"type":"custom",

"tokenizer":"ik_max_word",

"filter":[

"pinyin_simple_filter",

"lowercase"

]

}

}

}

},

"mappings":{

"hotel_type":{

"_all":{

"enabled":true

},

"_source":{

"enabled":true

},

"dynamic":"true",

"dynamic_templates":[

{

"id_fields":{ # id 字段, 自动匹配 *_id, *_num, *_type, *_star, *_facility 这些字段为 integer

"match_pattern":"regex",

"match":"[a-z_]*(id){1}|[a-z]*(_num){1}|[a-z]*(_type){1}|[a-z]*(_star){1}|[a-z_]*(facility){1}",

"match_mapping_type":"long",

"mapping":{

"type":"integer",

"fields":{

"raw":{

"type":"integer"

}

}

}

}

},

{

"float_fields":{ # 浮点字段, 自动匹配 *_score_*

"match_pattern":"regex",

"match":"([a-z]*(_score_){1}[a-z]*)",

"match_mapping_type":"double",

"mapping":{

"type":"float",

"fields":{

"keyword":{

"type":"keyword"

}

}

}

}

},

{

"es_fields":{ # 西班牙语字段

"match":"*_es",

"match_mapping_type":"string",

"mapping":{

"type":"text",

"analyzer":"spanish"

}

}

},

{

"en_fields":{ # 英文字段

"match":"*_en",

"match_mapping_type":"string",

"mapping":{

"type":"text",

"analyzer":"ik_max_word",

"search_analyzer":"ik_max_word",

"fields":{

"raw":{

"type":"keyword",

"ignore_above":256

}

}

}

}

},

{

"cn_fields":{ # 中文自动, 匹配 *_cn

"match":"*_cn",

"match_mapping_type":"string",

"mapping":{

"type":"text",

"analyzer":"ik_max_word",

"search_analyzer":"ik_max_word",

"fields":{

"raw":{

"type":"keyword",

"ignore_above":256

}

}

}

}

},

{

"date_fields":{ # 日期字段, 匹配 *_date, *_time

"match_pattern":"regex",

"match":"[a-z_]*(date){1}|[a-z_]*(time){1}",

"match_mapping_type":"string",

"mapping":{

"type":"date",

"format":"yyyy-MM-dd HH:mm:ss || yyyy-MM-dd HH:mm:ss.SSS || yyyy-MM-dd || epoch_millis || strict_date_optional_time"

}

}

},

{

"geo_fields":{ # 地理信息坐标, 用来计算 距离的, 匹配字段 location

"match":"location",

"match_mapping_type":"string",

"mapping":{

"type":"geo_point",

"location":{

"keyword":{

"type":"geo_point"

}

}

}

}

}

],

"properties":{ # 属性字段, 定义 location 为 geo_point

"location":{

"type":"geo_point"

},

"name_cn":{

"type":"text",

"fields":{

"fpy":{

"type":"text",

"index":true,

"analyzer":"pinyinFullIndexAnalyzer"

},

"spy":{

"type":"text",

"index":true,

"analyzer":"pinyinSimpleIndexAnalyzer"

}

},

"analyzer":"ikIndexAnalyzer"

},

"name_en":{

"type":"text",

"fields":{

"fpy":{

"type":"text",

"index":true,

"analyzer":"pinyinFullIndexAnalyzer"

},

"spy":{

"type":"text",

"index":true,

"analyzer":"pinyinSimpleIndexAnalyzer"

}

},

"analyzer":"ikIndexAnalyzer"

},

"address_cn":{

"type":"text",

"fields":{

"fpy":{

"type":"text",

"index":true,

"analyzer":"pinyinFullIndexAnalyzer"

},

"spy":{

"type":"text",

"index":true,

"analyzer":"pinyinSimpleIndexAnalyzer"

}

},

"analyzer":"ikIndexAnalyzer"

}

}

}

}

}

插入数据后, 查询(匹配 成都, 成, chengdu, fpy是全拼, spy是简拼(cd))

GET http://localhost:9200/hotel/hotel_type/_search

{

"query": {

"bool": {

"must": [

{

"multi_match": {

"query": "成",

"fields": [

"name_cn.fpy",

"name_cn.spy"

]

}

}

]

}

},

"from": 0,

"size": 20

}

今天的文章debian10安装中文语言包_buster版本分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/65300.html