hadoop的FileSplit简单使用

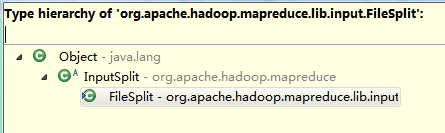

FileSplit类继承关系:

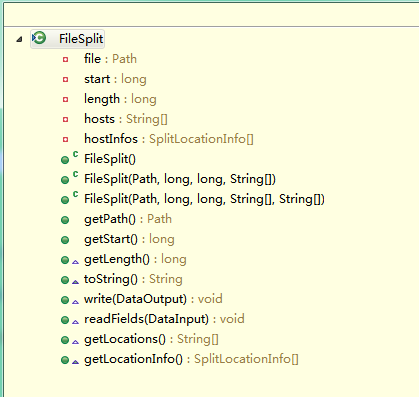

FileSplit类中的属性和方法:

作业输入:

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/input/inputpath1.txt

- hadoop a

- spark a

- hive a

- hbase a

- tachyon a

- storm a

- redis a

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/input/inputpath2.txt

- hadoop b

- spark b

- kafka b

- tachyon b

- oozie b

- flume b

- sqoop b

- solr b

- hadoop@hadoop:/home/hadoop/blb$

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/input/inputpath1.txt

- hadoop a

- spark a

- hive a

- hbase a

- tachyon a

- storm a

- redis a

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/input/inputpath2.txt

- hadoop b

- spark b

- kafka b

- tachyon b

- oozie b

- flume b

- sqoop b

- solr b

- hadoop@hadoop:/home/hadoop/blb$

代码:

- import java.io.IOException;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.NullWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapred.SplitLocationInfo;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.input.FileSplit;

- import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

- import org.apache.hadoop.util.GenericOptionsParser;

- public class GetSplitMapReduce {

- public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

- Configuration conf = new Configuration();

- String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

- if(otherArgs.length!=2){

- System.err.println(“Usage databaseV1 <inputpath> <outputpath>”);

- }

- Job job = Job.getInstance(conf, GetSplitMapReduce.class.getSimpleName() + “1”);

- job.setJarByClass(GetSplitMapReduce.class);

- job.setMapOutputKeyClass(Text.class);

- job.setMapOutputValueClass(Text.class);

- job.setOutputKeyClass(Text.class);

- job.setOutputValueClass(NullWritable.class);

- job.setMapperClass(MyMapper1.class);

- job.setNumReduceTasks(0);

- job.setInputFormatClass(TextInputFormat.class);

- job.setOutputFormatClass(TextOutputFormat.class);

- FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

- FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

- job.waitForCompletion(true);

- }

- public static class MyMapper1 extends Mapper<LongWritable, Text, Text, NullWritable>{

- @Override

- protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, NullWritable>.Context context)

- throws IOException, InterruptedException {

- FileSplit fileSplit=(FileSplit) context.getInputSplit();

- String pathname=fileSplit.getPath().getName(); //获取目录名字

- int depth = fileSplit.getPath().depth(); //获取目录深度

- Class<? extends FileSplit> class1 = fileSplit.getClass(); //获取当前类

- long length = fileSplit.getLength(); //获取文件长度

- SplitLocationInfo[] locationInfo = fileSplit.getLocationInfo(); //获取位置信息

- String[] locations = fileSplit.getLocations(); //获取位置

- long start = fileSplit.getStart(); //The position of the first byte in the file to process.

- String string = fileSplit.toString();

- //fileSplit.

- context.write(new Text(“====================================================================================”), NullWritable.get());

- context.write(new Text(“pathname–“+pathname), NullWritable.get());

- context.write(new Text(“depth–“+depth), NullWritable.get());

- context.write(new Text(“class1–“+class1), NullWritable.get());

- context.write(new Text(“length–“+length), NullWritable.get());

- context.write(new Text(“locationInfo–“+locationInfo), NullWritable.get());

- context.write(new Text(“locations–“+locations), NullWritable.get());

- context.write(new Text(“start–“+start), NullWritable.get());

- context.write(new Text(“string–“+string), NullWritable.get());

- }

- }

- }

- import java.io.IOException;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.NullWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapred.SplitLocationInfo;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.input.FileSplit;

- import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

- import org.apache.hadoop.util.GenericOptionsParser;

- public class GetSplitMapReduce {

- public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

- Configuration conf = new Configuration();

- String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

- if(otherArgs.length!=2){

- System.err.println(“Usage databaseV1 <inputpath> <outputpath>”);

- }

- Job job = Job.getInstance(conf, GetSplitMapReduce.class.getSimpleName() + “1”);

- job.setJarByClass(GetSplitMapReduce.class);

- job.setMapOutputKeyClass(Text.class);

- job.setMapOutputValueClass(Text.class);

- job.setOutputKeyClass(Text.class);

- job.setOutputValueClass(NullWritable.class);

- job.setMapperClass(MyMapper1.class);

- job.setNumReduceTasks(0);

- job.setInputFormatClass(TextInputFormat.class);

- job.setOutputFormatClass(TextOutputFormat.class);

- FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

- FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

- job.waitForCompletion(true);

- }

- public static class MyMapper1 extends Mapper<LongWritable, Text, Text, NullWritable>{

- @Override

- protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, NullWritable>.Context context)

- throws IOException, InterruptedException {

- FileSplit fileSplit=(FileSplit) context.getInputSplit();

- String pathname=fileSplit.getPath().getName(); //获取目录名字

- int depth = fileSplit.getPath().depth(); //获取目录深度

- Class<? extends FileSplit> class1 = fileSplit.getClass(); //获取当前类

- long length = fileSplit.getLength(); //获取文件长度

- SplitLocationInfo[] locationInfo = fileSplit.getLocationInfo(); //获取位置信息

- String[] locations = fileSplit.getLocations(); //获取位置

- long start = fileSplit.getStart(); //The position of the first byte in the file to process.

- String string = fileSplit.toString();

- //fileSplit.

- context.write(new Text(“====================================================================================”), NullWritable.get());

- context.write(new Text(“pathname–“+pathname), NullWritable.get());

- context.write(new Text(“depth–“+depth), NullWritable.get());

- context.write(new Text(“class1–“+class1), NullWritable.get());

- context.write(new Text(“length–“+length), NullWritable.get());

- context.write(new Text(“locationInfo–“+locationInfo), NullWritable.get());

- context.write(new Text(“locations–“+locations), NullWritable.get());

- context.write(new Text(“start–“+start), NullWritable.get());

- context.write(new Text(“string–“+string), NullWritable.get());

- }

- }

- }

对应inputpath2.txt文件的输出:

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/out2/part-m-00000

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@4ff41ba0

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@2341ce62

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@35549603

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@4444ba4f

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@7c23bb8c

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@dee2400

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@d7d8325

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@2b2cf90e

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/out2/part-m-00000

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@4ff41ba0

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@2341ce62

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@35549603

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@4444ba4f

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@7c23bb8c

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@dee2400

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@d7d8325

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

- ====================================================================================

- pathname–inputpath2.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–66

- locationInfo–null

- locations–[Ljava.lang.String;@2b2cf90e

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath2.txt:0+66

对应inputpath1.txt文件的输出:

- hadoop@hadoop:/home/hadoop/blb$ hdfs dfs -text /user/hadoop/libin/out2/part-m-00001

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@4ff41ba0

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@2341ce62

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@35549603

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@4444ba4f

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@7c23bb8c

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@dee2400

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- ====================================================================================

- pathname–inputpath1.txt

- depth–5

- class1–class org.apache.hadoop.mapreduce.lib.input.FileSplit

- length–58

- locationInfo–null

- locations–[Ljava.lang.String;@d7d8325

- start–0

- string–hdfs://hadoop:9000/user/hadoop/libin/input/inputpath1.txt:0+58

- hadoop@hadoop:/home/hadoop/blb$

今天的文章filespy_fit文件用什么软件打开「建议收藏」分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/79187.html