上一篇我们通过WireShark抓包获取到了RTSP通信的流程,本篇文章通过代码去分析每个流程的工作原理。

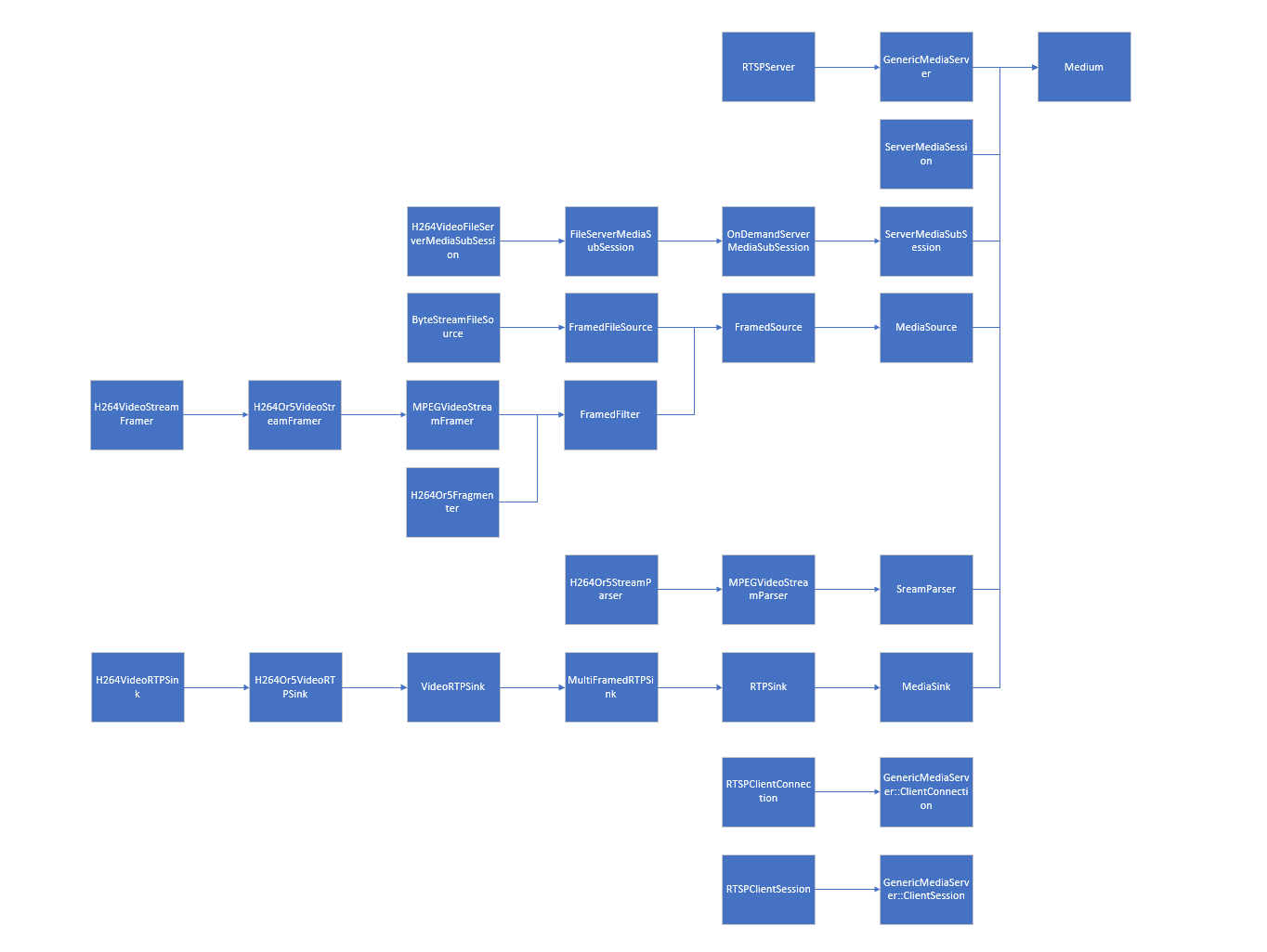

live555的继承关系太过复杂,所以做了个图简单记录一下与h264文件传输相关的类继承关系

一、OPTION

OPTION比较简单,就是客户端向服务端请求可用的方法。服务端收到客户端发来的OPTION指令后,调用函数handleCmd_OPTIONS进行处理

void RTSPServer::RTSPClientConnection::handleCmd_OPTIONS() {

snprintf((char*)fResponseBuffer, sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\nCSeq: %s\r\n%sPublic: %s\r\n\r\n",

fCurrentCSeq, dateHeader(), fOurRTSPServer.allowedCommandNames());

}

服务端处理就是按照合适把自己支持的命令发送回客户端。可用看到live555支持的RTSP指令有

OPTIONS, DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE, GET_PARAMETER, SET_PARAMETER。

char const* RTSPServer::allowedCommandNames() {

return "OPTIONS, DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE, GET_PARAMETER, SET_PARAMETER";

}

二、DESCRIBE

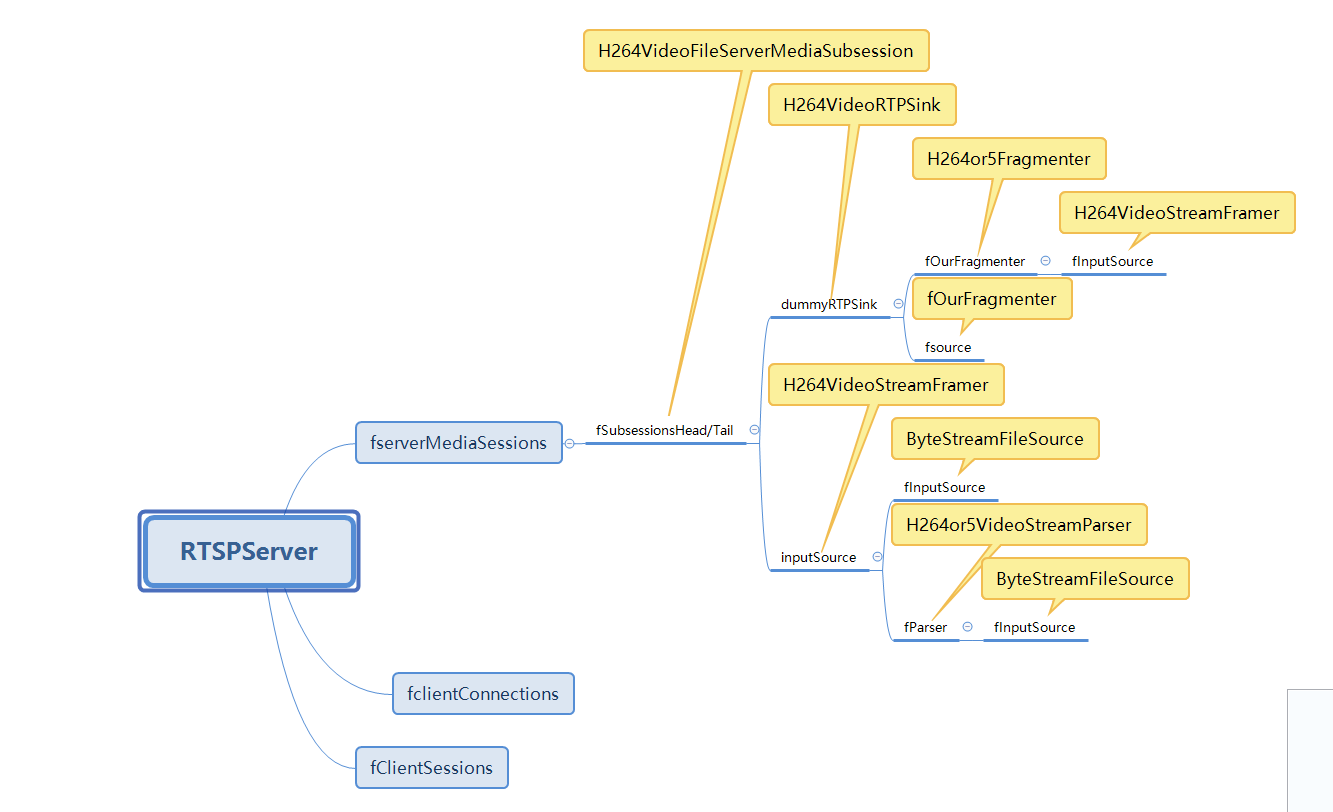

live555很多类的关键变量命名是一样的,所以做了个图简单记录下在模拟h264RTP时的关键变量的实际类型。后面分析代码时觉得乱时可以回头看看这张图。

DESCRIBE的过程是客户端向服务端请求媒体描述文件,服务端向客户端回复sdp信息。

1.处理DESCRIBE命令

void RTSPServer::RTSPClientConnection

::handleCmd_DESCRIBE(char const* urlPreSuffix, char const* urlSuffix, char const* fullRequestStr) {

char urlTotalSuffix[2*RTSP_PARAM_STRING_MAX];

// enough space for urlPreSuffix/urlSuffix'\0'

urlTotalSuffix[0] = '\0';

if (urlPreSuffix[0] != '\0') {

strcat(urlTotalSuffix, urlPreSuffix);

strcat(urlTotalSuffix, "/");

}

strcat(urlTotalSuffix, urlSuffix);

if (!authenticationOK("DESCRIBE", urlTotalSuffix, fullRequestStr)) return;

// We should really check that the request contains an "Accept:" #####

// for "application/sdp", because that's what we're sending back #####

// Begin by looking up the "ServerMediaSession" object for the specified "urlTotalSuffix":

fOurServer.lookupServerMediaSession(urlTotalSuffix, DESCRIBELookupCompletionFunction, this);

}

可以看到,服务端的handleCmd_DESCRIBE函数。首先是通过urlTotalSuffix找到对应的MediaSession,找到后调用ESCRIBELookupCompletionFunction函数。

void RTSPServer::RTSPClientConnection

::DESCRIBELookupCompletionFunction(void* clientData, ServerMediaSession* sessionLookedUp) {

RTSPServer::RTSPClientConnection* connection = (RTSPServer::RTSPClientConnection*)clientData;

connection->handleCmd_DESCRIBE_afterLookup(sessionLookedUp);

}

void RTSPServer::RTSPClientConnection

::handleCmd_DESCRIBE_afterLookup(ServerMediaSession* session) {

char* sdpDescription = NULL;

char* rtspURL = NULL;

do {

if (session == NULL) {

handleCmd_notFound();

break;

}

// Increment the "ServerMediaSession" object's reference count, in case someone removes it

// while we're using it:

session->incrementReferenceCount();

// Then, assemble a SDP description for this session:

sdpDescription = session->generateSDPDescription(fAddressFamily);

if (sdpDescription == NULL) {

// This usually means that a file name that was specified for a

// "ServerMediaSubsession" does not exist.

setRTSPResponse("404 File Not Found, Or In Incorrect Format");

break;

}

unsigned sdpDescriptionSize = strlen(sdpDescription);

// Also, generate our RTSP URL, for the "Content-Base:" header

// (which is necessary to ensure that the correct URL gets used in subsequent "SETUP" requests).

rtspURL = fOurRTSPServer.rtspURL(session, fClientInputSocket);

snprintf((char*)fResponseBuffer, sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\nCSeq: %s\r\n"

"%s"

"Content-Base: %s/\r\n"

"Content-Type: application/sdp\r\n"

"Content-Length: %d\r\n\r\n"

"%s",

fCurrentCSeq,

dateHeader(),

rtspURL,

sdpDescriptionSize,

sdpDescription);

} while (0);

if (session != NULL) {

// Decrement its reference count, now that we're done using it:

session->decrementReferenceCount();

if (session->referenceCount() == 0 && session->deleteWhenUnreferenced()) {

fOurServer.removeServerMediaSession(session);

}

}

delete[] sdpDescription;

delete[] rtspURL;

}

2.生成SDP信息

找到session后,让这个session生成SDP描述信息。调用generateSDPDescription函数

for (subsession = fSubsessionsHead; subsession != NULL;

subsession = subsession->fNext) {

char const* sdpLines = subsession->sdpLines(addressFamily);

if (sdpLines == NULL) continue; // the media's not available

sdpLength += strlen(sdpLines);

}

在generateSDPDescription时,查找session的subsession,添加每个subsession的sdp信息。

我们这个例子中只有一个subsession,就是H264VideoFileServerMediaSubsession。

而H264VideoFileServerMediaSubsession继承于OnDemandServerMediaSubsession。所以接下来会调用OnDemandServerMediaSubsession的sdpLines来生成sdp信息。

3.获取subsession的sdp信息

char const*

OnDemandServerMediaSubsession::sdpLines(int addressFamily) {

if (fSDPLines == NULL) {

// We need to construct a set of SDP lines that describe this

// subsession (as a unicast stream). To do so, we first create

// dummy (unused) source and "RTPSink" objects,

// whose parameters we use for the SDP lines:

unsigned estBitrate;

FramedSource* inputSource = createNewStreamSource(0, estBitrate);

if (inputSource == NULL) return NULL; // file not found

Groupsock* dummyGroupsock = createGroupsock(nullAddress(addressFamily), 0);

unsigned char rtpPayloadType = 96 + trackNumber()-1; // if dynamic

RTPSink* dummyRTPSink = createNewRTPSink(dummyGroupsock, rtpPayloadType, inputSource);

if (dummyRTPSink != NULL && dummyRTPSink->estimatedBitrate() > 0) estBitrate = dummyRTPSink->estimatedBitrate();

setSDPLinesFromRTPSink(dummyRTPSink, inputSource, estBitrate);

Medium::close(dummyRTPSink);

delete dummyGroupsock;

closeStreamSource(inputSource);

}

return fSDPLines;

}

由于初始化时我们不知道H264VideoFileServerMediaSubsession的sps和pps等信息,所以live555通过模拟发送RTP流的方式,先读取H264文件并解析来获取sps和pps信息。

首先是创建一个输入源;

然后创建一个模拟的Groupsock,这个模拟的Groupsock是个空的地址,端口也设置的是0;

然后利用这个Groupsock和输入源创建一个RTP消费者,由于这个RTPSink的IP端口都是假的,所以就不会真正的发送RTP流。

最后通过setSDPLinesFromRTPSink获取到这个subsession的SDP信息。

由于这个过程只是为了获取SDP信息,所以获取到SDP信息以后就把创建的这些模拟的媒体资源全部释放掉了。

4.创建媒体输入源

这个Subsession是H264VideoFileServerMediaSubsession类型的,所以会调用H264VideoFileServerMediaSubsession的createNewStreamSource。

FramedSource* H264VideoFileServerMediaSubsession::createNewStreamSource(unsigned /*clientSessionId*/, unsigned& estBitrate) {

estBitrate = 500; // kbps, estimate

// Create the video source:

ByteStreamFileSource* fileSource = ByteStreamFileSource::createNew(envir(), fFileName);

if (fileSource == NULL) return NULL;

fFileSize = fileSource->fileSize();

// Create a framer for the Video Elementary Stream:

return H264VideoStreamFramer::createNew(envir(), fileSource);

}

在这个函数里,会先创建文件源,创建了一个ByteStreamFileSource类型的字节流文件源。

然后利用这个文件源创建一个H264VideoStreamFramer。

H264VideoStreamFramer

::H264VideoStreamFramer(UsageEnvironment& env, FramedSource* inputSource, Boolean createParser,

Boolean includeStartCodeInOutput, Boolean insertAccessUnitDelimiters)

: H264or5VideoStreamFramer(264, env, inputSource, createParser,

includeStartCodeInOutput, insertAccessUnitDelimiters) {

}

H264VideoStreamFramer继承于H264or5VideoStreamFramer。

H264or5VideoStreamFramer

::H264or5VideoStreamFramer(int hNumber, UsageEnvironment& env, FramedSource* inputSource,

Boolean createParser,

Boolean includeStartCodeInOutput, Boolean insertAccessUnitDelimiters)

: MPEGVideoStreamFramer(env, inputSource),

fHNumber(hNumber), fIncludeStartCodeInOutput(includeStartCodeInOutput),

fInsertAccessUnitDelimiters(insertAccessUnitDelimiters),

fLastSeenVPS(NULL), fLastSeenVPSSize(0),

fLastSeenSPS(NULL), fLastSeenSPSSize(0),

fLastSeenPPS(NULL), fLastSeenPPSSize(0) {

fParser = createParser

? new H264or5VideoStreamParser(hNumber, this, inputSource, includeStartCodeInOutput)

: NULL;

fFrameRate = 30.0; // We assume a frame rate of 30 fps, unless we learn otherwise (from parsing a VPS or SPS NAL unit)

}

在这里面会创建一个解析器,来解析h264的文件。

5.获取SDPLine

void OnDemandServerMediaSubsession

::setSDPLinesFromRTPSink(RTPSink* rtpSink, FramedSource* inputSource, unsigned estBitrate) {

if (rtpSink == NULL) return;

char const* mediaType = rtpSink->sdpMediaType();

unsigned char rtpPayloadType = rtpSink->rtpPayloadType();

struct sockaddr_storage const& addressForSDP = rtpSink->groupsockBeingUsed().groupAddress();

portNumBits portNumForSDP = ntohs(rtpSink->groupsockBeingUsed().port().num());

AddressString ipAddressStr(addressForSDP);

char* rtpmapLine = rtpSink->rtpmapLine();

char const* rtcpmuxLine = fMultiplexRTCPWithRTP ? "a=rtcp-mux\r\n" : "";

char const* rangeLine = rangeSDPLine();

char const* auxSDPLine = getAuxSDPLine(rtpSink, inputSource);

if (auxSDPLine == NULL) auxSDPLine = "";

char const* const sdpFmt =

"m=%s %u RTP/AVP %d\r\n"

"c=IN %s %s\r\n"

"b=AS:%u\r\n"

"%s"

"%s"

"%s"

"%s"

"a=control:%s\r\n";

unsigned sdpFmtSize = strlen(sdpFmt)

+ strlen(mediaType) + 5 /* max short len */ + 3 /* max char len */

+ 3/*IP4 or IP6*/ + strlen(ipAddressStr.val())

+ 20 /* max int len */

+ strlen(rtpmapLine)

+ strlen(rtcpmuxLine)

+ strlen(rangeLine)

+ strlen(auxSDPLine)

+ strlen(trackId());

char* sdpLines = new char[sdpFmtSize];

sprintf(sdpLines, sdpFmt,

mediaType, // m= <media>

portNumForSDP, // m= <port>

rtpPayloadType, // m= <fmt list>

addressForSDP.ss_family == AF_INET ? "IP4" : "IP6", ipAddressStr.val(), // c= address

estBitrate, // b=AS:<bandwidth>

rtpmapLine, // a=rtpmap:... (if present)

rtcpmuxLine, // a=rtcp-mux:... (if present)

rangeLine, // a=range:... (if present)

auxSDPLine, // optional extra SDP line

trackId()); // a=control:<track-id>

delete[] (char*)rangeLine; delete[] rtpmapLine;

delete[] fSDPLines; fSDPLines = strDup(sdpLines);

delete[] sdpLines;

}

以此生成sdp媒体类型、负载类型、IP地址等等信息。

重点在getAuxSDPLine函数,这个函数会去生成h264文件相关的Sdp信息。

由于我们是H264VideoFileServerMediaSubsession,所以回去调用H264VideoFileServerMediaSubsession的getAuxSDPLine函数。

char const* H264VideoFileServerMediaSubsession::getAuxSDPLine(RTPSink* rtpSink, FramedSource* inputSource) {

if (fAuxSDPLine != NULL) return fAuxSDPLine; // it's already been set up (for a previous client)

if (fDummyRTPSink == NULL) {

// we're not already setting it up for another, concurrent stream

// Note: For H264 video files, the 'config' information ("profile-level-id" and "sprop-parameter-sets") isn't known

// until we start reading the file. This means that "rtpSink"s "auxSDPLine()" will be NULL initially,

// and we need to start reading data from our file until this changes.

fDummyRTPSink = rtpSink;

// Start reading the file:

fDummyRTPSink->startPlaying(*inputSource, afterPlayingDummy, this);

// Check whether the sink's 'auxSDPLine()' is ready:

checkForAuxSDPLine(this);

}

envir().taskScheduler().doEventLoop(&fDoneFlag);

return fAuxSDPLine;

}

这个函数去判断目前是否已经生成了sdpline了,如果没有,就通过启动模拟RTPSink的方式去生成Sdpline。并且checkForAuxSDPLine来检测是否生成。

我们先来看一下checkForAuxSDPLine函数

void H264VideoFileServerMediaSubsession::checkForAuxSDPLine1() {

nextTask() = NULL;

char const* dasl;

if (fAuxSDPLine != NULL) {

// Signal the event loop that we're done:

setDoneFlag();

} else if (fDummyRTPSink != NULL && (dasl = fDummyRTPSink->auxSDPLine()) != NULL) {

fAuxSDPLine = strDup(dasl);

fDummyRTPSink = NULL;

// Signal the event loop that we're done:

setDoneFlag();

} else if (!fDoneFlag) {

// try again after a brief delay:

int uSecsToDelay = 100000; // 100 ms

nextTask() = envir().taskScheduler().scheduleDelayedTask(uSecsToDelay,

(TaskFunc*)checkForAuxSDPLine, this);

}

}

可以看到,这个函数就是一直循环检测SDPLine或者模拟RTPSink的SDPLine是否生成,如果没生成就过100ms继续检测,直到生成后就把fDoneFlag置位,这时任务调度器就会停止工作。

char const* H264VideoRTPSink::auxSDPLine() {

// Generate a new "a=fmtp:" line each time, using our SPS and PPS (if we have them),

// otherwise parameters from our framer source (in case they've changed since the last time that

// we were called):

H264or5VideoStreamFramer* framerSource = NULL;

u_int8_t* vpsDummy = NULL; unsigned vpsDummySize = 0;

u_int8_t* sps = fSPS; unsigned spsSize = fSPSSize;

u_int8_t* pps = fPPS; unsigned ppsSize = fPPSSize;

if (sps == NULL || pps == NULL) {

// We need to get SPS and PPS from our framer source:

if (fOurFragmenter == NULL) return NULL; // we don't yet have a fragmenter (and therefore not a source)

framerSource = (H264or5VideoStreamFramer*)(fOurFragmenter->inputSource());

if (framerSource == NULL) return NULL; // we don't yet have a source

framerSource->getVPSandSPSandPPS(vpsDummy, vpsDummySize, sps, spsSize, pps, ppsSize);

if (sps == NULL || pps == NULL) return NULL; // our source isn't ready

}

// Set up the "a=fmtp:" SDP line for this stream:

u_int8_t* spsWEB = new u_int8_t[spsSize]; // "WEB" means "Without Emulation Bytes"

unsigned spsWEBSize = removeH264or5EmulationBytes(spsWEB, spsSize, sps, spsSize);

if (spsWEBSize < 4) {

// Bad SPS size => assume our source isn't ready

delete[] spsWEB;

return NULL;

}

u_int32_t profileLevelId = (spsWEB[1]<<16) | (spsWEB[2]<<8) | spsWEB[3];

delete[] spsWEB;

char* sps_base64 = base64Encode((char*)sps, spsSize);

char* pps_base64 = base64Encode((char*)pps, ppsSize);

char const* fmtpFmt =

"a=fmtp:%d packetization-mode=1"

";profile-level-id=%06X"

";sprop-parameter-sets=%s,%s\r\n";

unsigned fmtpFmtSize = strlen(fmtpFmt)

+ 3 /* max char len */

+ 6 /* 3 bytes in hex */

+ strlen(sps_base64) + strlen(pps_base64);

char* fmtp = new char[fmtpFmtSize];

sprintf(fmtp, fmtpFmt,

rtpPayloadType(),

profileLevelId,

sps_base64, pps_base64);

delete[] sps_base64;

delete[] pps_base64;

delete[] fFmtpSDPLine; fFmtpSDPLine = fmtp;

return fFmtpSDPLine;

}

可以看到,这个函数就是检测是否有SPS和PPS信息,如果有就能根据SPS和PPS生成对应的SDPLine。

然后我们回过头来继续看一下启动RTPSink后是如何生成SPS和PPS信息的的。

6.启动RTPSink

Boolean MediaSink::startPlaying(MediaSource& source,

afterPlayingFunc* afterFunc,

void* afterClientData) {

// Make sure we're not already being played:

if (fSource != NULL) {

envir().setResultMsg("This sink is already being played");

return False;

}

// Make sure our source is compatible:

if (!sourceIsCompatibleWithUs(source)) {

envir().setResultMsg("MediaSink::startPlaying(): source is not compatible!");

return False;

}

fSource = (FramedSource*)&source;

fAfterFunc = afterFunc;

fAfterClientData = afterClientData;

return continuePlaying();

}

开始播放后会去调用虚函数continuePlaying。我们的RTPSink是H264or5VideoRTPSink类型的,所以就去调用H264or5VideoRTPSink的continuePlaying

Boolean H264or5VideoRTPSink::continuePlaying() {

// First, check whether we have a 'fragmenter' class set up yet.

// If not, create it now:

if (fOurFragmenter == NULL) {

fOurFragmenter = new H264or5Fragmenter(fHNumber, envir(), fSource, OutPacketBuffer::maxSize,

ourMaxPacketSize() - 12/*RTP hdr size*/);

} else {

fOurFragmenter->reassignInputSource(fSource);

}

fSource = fOurFragmenter;

// Then call the parent class's implementation:

return MultiFramedRTPSink::continuePlaying();

}

这里面创建了一个H264或H265的分片管理器。然后调用父类MultiFramedRTPSink::continuePlaying;

Boolean MultiFramedRTPSink::continuePlaying() {

// Send the first packet.

// (This will also schedule any future sends.)

buildAndSendPacket(True);

return True;

}

...

void MultiFramedRTPSink::buildAndSendPacket(Boolean isFirstPacket) {

nextTask() = NULL;

fIsFirstPacket = isFirstPacket;

// Set up the RTP header:

unsigned rtpHdr = 0x80000000; // RTP version 2; marker ('M') bit not set (by default; it can be set later)

rtpHdr |= (fRTPPayloadType<<16);

rtpHdr |= fSeqNo; // sequence number

fOutBuf->enqueueWord(rtpHdr);

// Note where the RTP timestamp will go.

// (We can't fill this in until we start packing payload frames.)

fTimestampPosition = fOutBuf->curPacketSize();

fOutBuf->skipBytes(4); // leave a hole for the timestamp

fOutBuf->enqueueWord(SSRC());

// Allow for a special, payload-format-specific header following the

// RTP header:

fSpecialHeaderPosition = fOutBuf->curPacketSize();

fSpecialHeaderSize = specialHeaderSize();

fOutBuf->skipBytes(fSpecialHeaderSize);

// Begin packing as many (complete) frames into the packet as we can:

fTotalFrameSpecificHeaderSizes = 0;

fNoFramesLeft = False;

fNumFramesUsedSoFar = 0;

packFrame();

}

void MultiFramedRTPSink::packFrame() {

// Get the next frame.

// First, skip over the space we'll use for any frame-specific header:

fCurFrameSpecificHeaderPosition = fOutBuf->curPacketSize();

fCurFrameSpecificHeaderSize = frameSpecificHeaderSize();

fOutBuf->skipBytes(fCurFrameSpecificHeaderSize);

fTotalFrameSpecificHeaderSizes += fCurFrameSpecificHeaderSize;

// See if we have an overflow frame that was too big for the last pkt

if (fOutBuf->haveOverflowData()) {

// Use this frame before reading a new one from the source

unsigned frameSize = fOutBuf->overflowDataSize();

struct timeval presentationTime = fOutBuf->overflowPresentationTime();

unsigned durationInMicroseconds = fOutBuf->overflowDurationInMicroseconds();

fOutBuf->useOverflowData();

afterGettingFrame1(frameSize, 0, presentationTime, durationInMicroseconds);

} else {

// Normal case: we need to read a new frame from the source

if (fSource == NULL) return;

fSource->getNextFrame(fOutBuf->curPtr(), fOutBuf->totalBytesAvailable(),

afterGettingFrame, this, ourHandleClosure, this);

}

}

continuePlaying以此调用到了packFrame函数,这个函数中检测到数据不足时会调用fSource->getNextFrame。要记得这个fSource就是之前创建的片段管理器,类型是H264or5Fragmenter。

getNextFrame函数都会去调用虚函数doGetNextFrame,doGetNextFrame是每个子类自己实现的。

所以这里会调用到H264or5Fragmenter的doGetNextFrame函数。

if (fNumValidDataBytes == 1) {

// We have no NAL unit data currently in the buffer. Read a new one:

fInputSource->getNextFrame(&fInputBuffer[1], fInputBufferSize - 1,

afterGettingFrame, this,

FramedSource::handleClosure, this);

}

H264or5Fragmenter的doGetNextFrame又会去调用InputSource->getNextFrame。

而这个InputSource的类型是H264VideoStreamFramer,可以回去看关键变量类型图。

所以就调用到H264VideoStreamFramer::doGetNextFrame。它继承自H264or5VideoStreamFramer,自身没有重写虚函数,所以调用H264or5VideoStreamFramer::doGetNextFrame.

else {

// Do the normal delivery of a NAL unit from the parser:

MPEGVideoStreamFramer::doGetNextFrame();

}

它又去调用MPEGVideoStreamFramer::doGetNextFrame()

void MPEGVideoStreamFramer::doGetNextFrame() {

fParser->registerReadInterest(fTo, fMaxSize);

continueReadProcessing();

}

continueReadProcessing又去调用解析器的解析函数。

unsigned acquiredFrameSize = fParser->parse();

这个fParser是H264or5VideoStreamParser类型的,所以会去调用H264or5VideoStreamParser::parse。

在parse函数中可以看到,读取文件数据并解析,解析后判断是否是SPS或PPS类型的nalu,如果是的话就设置SPS和PPS等信息。至此算是得到了SPS和PPS信息了。

usingSource()->saveCopyOfSPS(fStartOfFrame + fOutputStartCodeSize, curFrameSize() - fOutputStartCodeSize);

...

usingSource()->saveCopyOfPPS(fStartOfFrame + fOutputStartCodeSize, curFrameSize() - fOutputStartCodeSize);

得到SPS和PPS信息后,结合之前H264VideoRTPSink::auxSDPLine()函数,就能生成对应的SDPLine信息了。从而生成了SDP信息并发送给客户端。完成DESCRIBE的整个流程。

今天的文章live555 rtsp server_script5009「建议收藏」分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/87257.html