通过这篇文章为大家介绍崔庆才老师对Python——urllib库的讲解

本文共有约1200字,建议阅读时间8分钟,并且注重理论与实践相结合

用电脑观看的可以点击阅读原文即可跳转到CSDN网页方便操作

目录:

一、什么是Urllib库?

二、urllib用法讲解

一、什么是Urllib库?

Python内置的HTTP请求库

urllib.request:请求模块

urllib.error:异常处理模块

urllib.parse:url解析模块(拆分、合并等)

urllib.robotparser:robot.txt解析模块

二、urllib用法讲解

1.urlopen

解析

urllib.request.urlopen(url,data = None,[timeout]*,cafile = None,capath = None,cadefault = False,context = None)#urlopen前三个分别(网站,网站的数据,超时设置)爬虫第一步(urlopen操作):

from urllib import request

response = request.urlopen('http://www.baidu.com')

print(response.read().decode('utf-8'))#获取响应体的内容post类型的请求(parse操作):

from urllib import parse

data = bytes(parse.urlencode({'word':'hello'}),encoding = 'utf8')

response1 = request.urlopen('http://httpbin.org/post',data = data)#http://httpbin.org/是一个做http测试的网站

print(response1.read())timeou超时设置

response2 = request.urlopen('http://httpbin.org/get',timeout = 1)#将超时时间设置为1秒

print(response2.read())try:

response3 = request.urlopen('http://httpbin.org/get',timeout = 0.1)#将超时时间设置为0.1秒

except error.URLError as e:

if isinstance(e.reason,socket.timeout):#使用isinstance判断error的原因是否是timeout

print('TIME OUT')2.响应

响应类型

print(type(response))#保留原本的response,自己也可以另行设置一个新的response

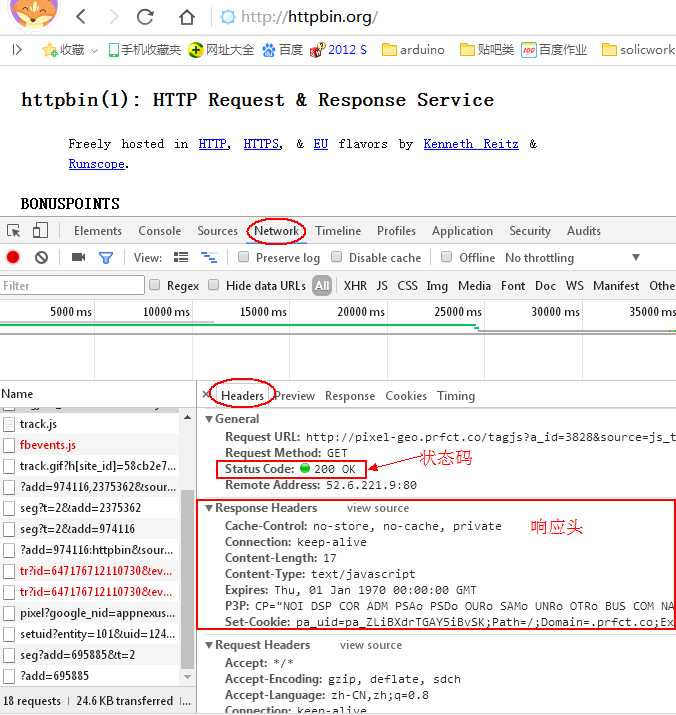

Out[20]: http.client.HTTPResponse状态码、响应头

print(response.status)#状态码

print(response.getheaders())#响应头

print(response.getheaders('Set-Cookie'))#响应头内信息类型为字典的,可以通过键名找到对应的值3.Request

from urllib import request

from urllib import parse,error

request1 = request.Request('http://python.org/')#此步骤为请求,对比urllib的使用可知可省略

response = request.urlopen(request1)

print(response.read().decode('utf-8'))from urllib import parse,request,error

import socket

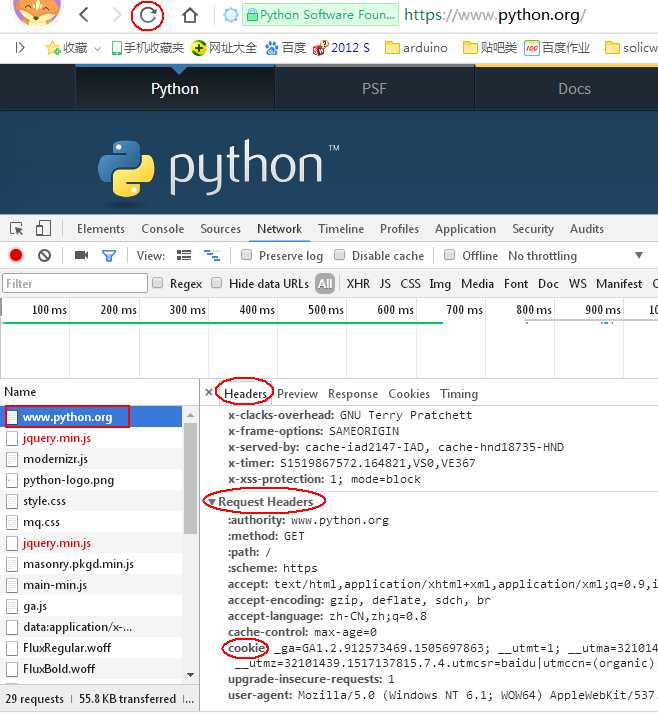

url = 'http://httpbin.org/post'#构造一个POST请求

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64)',

'Host':'httpbin.org'

}

dict1 = {

'name':'Germey'

}

data = bytes(parse.urlencode(dict1),encoding='utf8')#fontdata数据

req = request.Request(url = url,data = data,headers = headers,method = 'POST')#整一个Request()的一个结构

response = request.urlopen(req)

print(response.read().decode('utf-8'))#输出结构中可以看出我们前面所构造的headers和dict1下面为构造POST请求的另一种方式:

req1 = request.Request(url = url,data = data,method = 'POST')

req1.add_header('User-Agent','Mozilla/5.0 (Windows NT 6.1; WOW64)')#使用add_header添加

response = request.urlopen(req1)

print(response.read().decode('utf-8'))4.Headler:

代理(https://docs.python.org/3/library/urllib.request.html#module-urllib.request官方文档)

from urllib import request

proxy_handler = request.ProxyHandler(

{'http':'http://127.0.0.1:9743',

'https':'https://127.0.0.1:9743'

})#此IP为过期IP,最近我的途径被封了,无法为大家展示><sorry

opener = request.build_opener(proxy_handler)

response = opener.open('http://www.baidu.com')

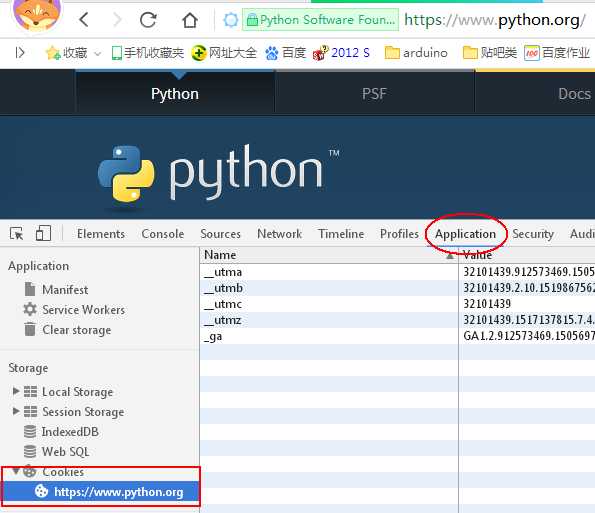

print(response.read())5.Cookie(客户端保存,用来记录客户身份的文本文件、维持登录状态)

from urllib import request

from http import cookiejar

cookie =cookiejar.CookieJar()#设置一个cookie栈

handler = request.HTTPCookieProcessor(cookie)

opener = request.build_opener(handler)

response =opener.open('http://www.baidu.com')

for item in cookie:

print(item.name+'='+item.value)6.异常处理

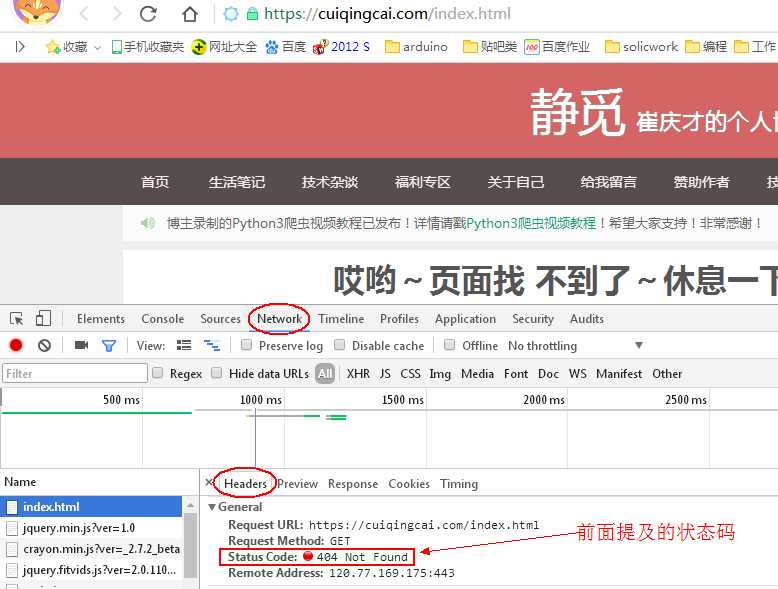

from urllib import error

#我们试着访问一个不存在的网址

try:

response = request.urlopen('http://www.cuiqingcai.com/index.html')#http://www.cuiqingcai.com/此链接为崔老师的个人博客

except error.URLError as e:

print(e.reason)#通过审查可以查到我们捕捉的异常是否与之相符

可以捕获的异常(https://docs.python.org/3/library/urllib.error.html#module-urllib.error官方文档):

try:

response = request.urlopen('http://www.cuiqingcai.com/index.html')

except error.HTTPError as e: #最好先捕捉HTTPError再捕捉其他的异常

print(e.reason,e.code,e.headers,sep='\n')

except error.URLError as e:

print(e.reason)

else:

print('Request Successfully')try:

response = request.urlopen('http://www.baidu.com',timeout = 0.01)#超时异常

except error.URLError as e:

print(type(e.reason))

if isinstance(e.reason,socket.timeout):#判断error类型

print('TIME OUT')7.URL解析(https://docs.python.org/3/library/urllib.parse.html#module-urllib.parse官方文档):

urlparse(将url进行分割,分割成好几个部分,再依次将其复制)

parse.urlparse(urlstring,scheme='',allow_fragments = True)#(url,协议类型,#后面的东西)from urllib.parse import urlparse

result = urlparse('https://www.baidu.com/s?wd=urllib&ie=UTF-8')

print(type(result),result) #<class 'urllib.parse.ParseResult'>

#无协议类型指定,自行添加的情况

result = urlparse('www.baidu.com/s?wd=urllib&ie=UTF-8',scheme = 'https')

print(result)

#有指定协议类型,添加的情况

result1 = urlparse('http://www.baidu.com/s?wd=urllib&ie=UTF-8',scheme = 'https')

print(result1)

#allow_fragments参数使用

result1 = urlparse('http://www.baidu.com/s?#comment',allow_fragments = False)

result2 = urlparse('http://www.baidu.com/s?wd=urllib&ie=UTF-8#comment',allow_fragments = False)

print(result1,result2)#allow_fragments=False表示#后面的东西不能填,原本在fragment位置的参数就会往上一个位置拼接,可以对比result1和result2的区别urlunparse(urlparse的反函数)

举个栗子

from urllib.parse import urlunparse

#data可以通过urlparse得出的参数往里面带,注意:即使是空符号也要写进去,不然会出错

data = ['https', '', 'www.baidu.com/s', '', 'wd=urllib&ie=UTF-8', '']

print(urlunparse(data))urjoin(拼接URL):

from urllib.parse import urljoin

#总的来说:无论是正常链接或是随便打的,都可以拼接,如果同时出现完整链接'http'或是'https',不会产生拼接,而会打印后者的链接

print(urljoin('http://www.baidu.com','FQA.html'))

http://www.baidu.com/FQA.html

print(urljoin('http://www.baidu.com','http://www.caiqingcai.com/FQA.html'))

http://www.caiqingcai.com/FQA.html

print(urljoin('https://www.baidu.com/about.html','http://www.caiqingcai.com/FQA.html'))

http://www.caiqingcai.com/FQA.html

print(urljoin('http://www.baidu.com/about.html','https://www.caiqingcai.com/FQA.html'))

https://www.caiqingcai.com/FQA.htmlurlencode(字典对象转化为get请求参数):

from urllib.parse import urlencode

params = {

'name':'Arise',

'age':'21'

}

base_url = 'http://www.baidu.com?'

url = base_url+urlencode(params)

print(url)

http://www.baidu.com?name=Arise&age=21robotparser(用来解析robot.txt):

官方文档:https://docs.python.org/3/library/urllib.robotparser.html#module-urllib.robotparser(只做了解)

import urllib.robotparser

rp = urllib.robotparser.RobotFileParser()

rp.set_url("http://www.musi-cal.com/robots.txt")

rp.read()

rrate = rp.request_rate("*")

rrate.requests

#3

rrate.seconds

#20

rp.crawl_delay("*")

#6

rp.can_fetch("*", "http://www.musi-cal.com/cgi-bin/search?city=San+Francisco")

#False

rp.can_fetch("*", "http://www.musi-cal.com/")

#True今天的文章urllib库使用详解分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:http://bianchenghao.cn/11285.html