首先,先了解什么是爬虫。爬虫的原理和机制是什么。

1。作为前端开发者 我们都知道打开F12可以查看页面的属性。请求某个地址以后会给你返回一个html页面然后在浏览器上加载运行解析成我们可以看到的网页。

2。网页中一般都夹带着该网站的一些数据信息,比如安居客的有房源信息之类的一些公开数据

3。这个时候如果需要数据就可以使用爬虫去爬取一些公共数据。爬虫其实就是用代码去模拟人的正常操作

let request = require('request')

let cheerio = require('cheerio')

安装两个库 request 和 cheerio 一个是发送Http请求的一个是用jquery的语法去解析Html文档的

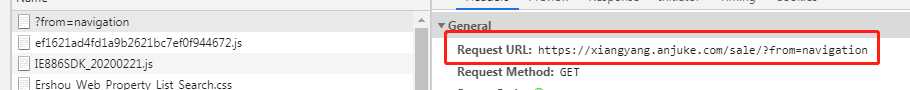

- 打开安居客,打开F12,看你请求的地址比如:

xiangyang.anjuke.com找到接口地址

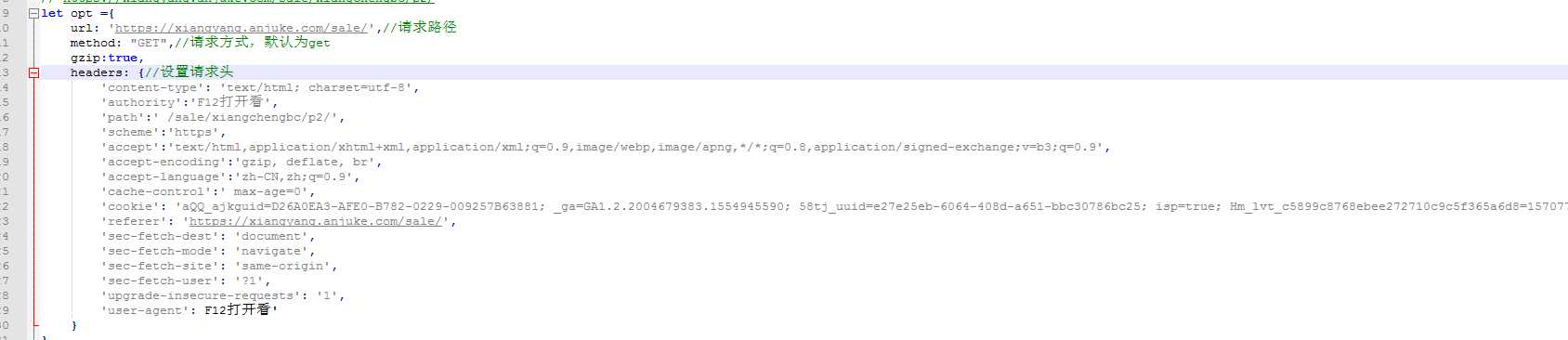

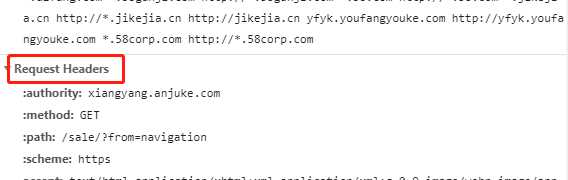

看他返回一个 html文档 往下扒拉你可以看见房源数据。你现在看可以看见总不能拿个笔每个都记下来吧 这个时候就需要爬虫帮个你干活了。- 首先 组装http的header 伪装浏览器。在浏览器的请求中会自动给你带上 user-agent属性,该属性主要是告知对方我是浏览器 用的什么系统

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36'- 而且不光是对user-agent有检测 对cookie也是有进行,如果你在请求的过程中没有带上她的cookie也会检测出来你不是浏览器请求进行封ip处理,这个时候我们就把浏览器的cookie复制出来给组装到我们的header上

'cookie': 'aQQ_ajkguid=D26A0EA3-AFE0-B782-0229-009257B63881; _ga=GA1.2.2004679383.1554945590.....referer属性是告知我的上一级路径是什么,这个我不清楚是否有进行检测。保险起见还是加上比较好- 比如:

'referer': 'https://xiangyang.anjuke.com/sale/' - 这是我组装headers 里面的东西 你都可以从F12里面的header给复制过来

- 就是因为做了这个组装的header 我再去请求别人才知道我是一个浏览器 不是爬虫 伪装成功,接下来就可以去解析返回的Html文档了

- 由于安居客网站是有反扒机制,所以不能直接对地址进行请求。需要伪装成浏览器进行请求。他可以通过对http header进行检测,如果这个header里面没有带一些浏览器的信息则会被检测出来进行封ip。

let request = require('request')

let cheerio = require('cheerio')

const fs = require("fs")

const {

promises } = require('dns')

const {

resolve } = require('path')

const {

rejects } = require('assert')

// https://xiangyang.anjuke.com/sale/xiangchengbc/p2/

let opt ={

url: 'https://xiangyang.anjuke.com/sale/',//请求路径

method: "GET",//请求方式,默认为get

gzip:true,

headers: {

//设置请求头

'content-type': 'text/html; charset=utf-8',

'authority':'xiangyang.anjuke.com',

'path':' /sale/xiangchengbc/p2/',

'scheme':'https',

'accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'accept-encoding':'gzip, deflate, br',

'accept-language':'zh-CN,zh;q=0.9',

'cache-control':' max-age=0',

'cookie': 'aQQ_ajkguid=D26A0EA3-AFE0-B782-0229-009257B63881; _ga=GA1.2.2004679383.1554945590; 58tj_uuid=e27e25eb-6064-408d-a651-bbc30786bc25; isp=true; Hm_lvt_c5899c8768ebee272710c9c5f365a6d8=1570773334; id58=e87rkF64xDNPu8IxBAe8Ag==; sessid=56B31019-F035-AA6E-1E4A-0D7A19C2F0F1; lps=http%3A%2F%2Fwww.anjuke.com%2F%3Fpi%3DPZ-baidu-pc-all-biaoti%7Chttps%3A%2F%2Fsp0.baidu.com%2F9q9JcDHa2gU2pMbgoY3K%2Fadrc.php%3Ft%3D06KL00c00f7Hj1f0dNFM00PpAsKZME7X00000FnoxNC00000V2IFCW.THvs_oeHEtY0UWdBmy-bIy9EUyNxTAT0T1dhPHw-ujNhnW0snjwBmWbk0ZRqn1I7PjnsPRD3nRDYfbujwRPArj03PWnLnHf1nHcYwWm0mHdL5iuVmv-b5Hn1PHb1PWbznW0hTZFEuA-b5HDv0ARqpZwYTZnlQzqLILT8my4JIyV-QhPEUitOTAbqR7CVmh7GuZRVTAnVmyk_QyFGmyqYpfKWThnqnHDk%26tpl%3Dtpl_11534_22672_17382%26l%3D1518152595%26attach%3Dlocation%253D%2526linkName%253D%2525E6%2525A0%252587%2525E5%252587%252586%2525E5%2525A4%2525B4%2525E9%252583%2525A8-%2525E6%2525A0%252587%2525E9%2525A2%252598-%2525E4%2525B8%2525BB%2525E6%2525A0%252587%2525E9%2525A2%252598%2526linkText%253D%2525E5%2525AE%252589%2525E5%2525B1%252585%2525E5%2525AE%2525A2-%2525E5%252585%2525A8%2525E6%252588%2525BF%2525E6%2525BA%252590%2525E7%2525BD%252591%2525EF%2525BC%25258C%2525E6%252596%2525B0%2525E6%252588%2525BF%252520%2525E4%2525BA%25258C%2525E6%252589%25258B%2525E6%252588%2525BF%252520%2525E6%25258C%252591%2525E5%2525A5%2525BD%2525E6%252588%2525BF%2525E4%2525B8%25258A%2525E5%2525AE%252589%2525E5%2525B1%252585%2525E5%2525AE%2525A2%2525EF%2525BC%252581%2526xp%253Did%28%252522m3359369220_canvas%252522%29%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FH2%25255B1%25255D%25252FA%25255B1%25255D%2526linkType%253D%2526checksum%253D186%26wd%3D%25E5%25AE%2589%25E5%25B1%2585%25E5%25AE%25A2%26issp%3D1%26f%3D8%26ie%3Dutf-8%26rqlang%3Dcn%26tn%3Dbaiduhome_pg%26inputT%3D2219; twe=2; __xsptplusUT_8=1; _gid=GA1.2.335072086.1592892879; new_uv=14; init_refer=https%253A%252F%252Fsp0.baidu.com%252F9q9JcDHa2gU2pMbgoY3K%252Fadrc.php%253Ft%253D06KL00c00f7Hj1f0dNFM00PpAsKZME7X00000FnoxNC00000V2IFCW.THvs_oeHEtY0UWdBmy-bIy9EUyNxTAT0T1dhPHw-ujNhnW0snjwBmWbk0ZRqn1I7PjnsPRD3nRDYfbujwRPArj03PWnLnHf1nHcYwWm0mHdL5iuVmv-b5Hn1PHb1PWbznW0hTZFEuA-b5HDv0ARqpZwYTZnlQzqLILT8my4JIyV-QhPEUitOTAbqR7CVmh7GuZRVTAnVmyk_QyFGmyqYpfKWThnqnHDk%2526tpl%253Dtpl_11534_22672_17382%2526l%253D1518152595%2526attach%253Dlocation%25253D%252526linkName%25253D%252525E6%252525A0%25252587%252525E5%25252587%25252586%252525E5%252525A4%252525B4%252525E9%25252583%252525A8-%252525E6%252525A0%25252587%252525E9%252525A2%25252598-%252525E4%252525B8%252525BB%252525E6%252525A0%25252587%252525E9%252525A2%25252598%252526linkText%25253D%252525E5%252525AE%25252589%252525E5%252525B1%25252585%252525E5%252525AE%252525A2-%252525E5%25252585%252525A8%252525E6%25252588%252525BF%252525E6%252525BA%25252590%252525E7%252525BD%25252591%252525EF%252525BC%2525258C%252525E6%25252596%252525B0%252525E6%25252588%252525BF%25252520%252525E4%252525BA%2525258C%252525E6%25252589%2525258B%252525E6%25252588%252525BF%25252520%252525E6%2525258C%25252591%252525E5%252525A5%252525BD%252525E6%25252588%252525BF%252525E4%252525B8%2525258A%252525E5%252525AE%25252589%252525E5%252525B1%25252585%252525E5%252525AE%252525A2%252525EF%252525BC%25252581%252526xp%25253Did%28%25252522m3359369220_canvas%25252522%29%2525252FDIV%2525255B1%2525255D%2525252FDIV%2525255B1%2525255D%2525252FDIV%2525255B1%2525255D%2525252FDIV%2525255B1%2525255D%2525252FDIV%2525255B1%2525255D%2525252FH2%2525255B1%2525255D%2525252FA%2525255B1%2525255D%252526linkType%25253D%252526checksum%25253D186%2526wd%253D%2525E5%2525AE%252589%2525E5%2525B1%252585%2525E5%2525AE%2525A2%2526issp%253D1%2526f%253D8%2526ie%253Dutf-8%2526rqlang%253Dcn%2526tn%253Dbaiduhome_pg%2526inputT%253D2219; als=0; new_session=0; ctid=135; _gat=1; wmda_uuid=60169e9bd5e5395ecda466bf092f7a7e; wmda_new_uuid=1; wmda_session_id_6289197098934=1592893057179-bd1c6672-3389-aaa1; wmda_visited_projects=%3B6289197098934; __xsptplus8=8.19.1592892879.1592893104.10%232%7Csp0.baidu.com%7C%7C%7C%25E5%25AE%2589%25E5%25B1%2585%25E5%25AE%25A2%7C%23%23lYUbAxX3aaSpiOkKtNLyO-rIJp0KZUlO%23; xxzl_cid=a3d4cae6516b45ce9178ae2de4ff244c; xzuid=6b8811c7-40d3-444f-98ed-c1b1218c77d9',

'referer': 'https://xiangyang.anjuke.com/sale/',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-fetch-site': 'same-origin',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36'

}

}

let json = []

let initUrl = 'https://xiangyang.anjuke.com/sale'

let count = 1

let RequestAnjuke = (opt)=>{

return new Promise((resolve,rejects) =>{

request(opt,function(error,response,body){

if(error){

rejects(error)

}

resolve(body)

})

}).then(body =>{

let $ = cheerio.load(body)

$('#houselist-mod-new').find('li').each(function(i,j){

let data ={

}

data.url = $(j).children('.item-img').children('img').attr('src')

data.data = {

'house-title':$(j).children('.house-details').children('.house-title').text().replace(/\s+/g,""),

'house-title-href':$(j).children('.house-details').children('.house-title').children('a').attr('href'),

'details-item':$(j).children('.house-details').children('.details-item').text().replace(/\s+/g,""),

'house-details':$(j).children('.house-details').children('.details-item1').text().replace(/\s+/g,""),

'tags-span':$(j).children('.house-details').children('.tags-bottom').text().replace(/\s+/g,""),

}

data.price = $(j).children('.pro-price').children('.price-det').text().replace(/\s+/g,"万")

data.average_price = $(j).children('.pro-price').children('.unit-price').text()

json.push(data)

})

if(count == 50){

let house_data = {

data:json,

len:json.length

}

fs.writeFile("house_details.txt", JSON.stringify(house_data), error => {

if (error) return console.log("写入文件失败,原因是" + error.message);

console.log("写入成功");

});

return

}

count++

let url = opt.url

opt.headers.referer = url

opt.url = initUrl+'/p'+count+'/'

console.log(opt.url+'----------------------'+opt.headers.referer)

RequestAnjuke(opt)

}).catch(err =>{

console.log(err)

})

}

RequestAnjuke(opt)

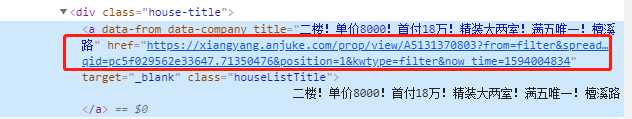

打开F12查看元素发现了 href的链接就是房源详情的链接地址,这个时候呢。我们就需要用cheerio模块把内容中的url获取出来,这个时候你可以进行直接访问然后拿出来他的详细信息进行组合处理。也可以进行存储来操作,我测试一个一次好像这个链接是有时效性的。所以,在获取出来后尽快进行操作

我用的递归去进行翻页爬取,我手动翻了一共有50页 所以我设置了一个count值进行翻页查询。代码闲下来的时候上传github

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/37998.html