音乐推荐系统

数据获取

任何的机器学习算法解决问题,首先要考虑的是数据,数据从何而来?

对于酷狗音乐/网易音乐这样的企业而言,用户的收藏和播放数据是可以直接获得的—-歌单

数据说明

歌单的形式

{

"result": {

"id": 111450065,

"status": 0,

"commentThreadId": "A_PL_0_111450065",

"trackCount": 120,

"updateTime": 1460164523907,

"commentCount": 227,

"ordered": true,

"anonimous": false,

"highQuality": false,

"subscribers": [],

"playCount": 687070,

"trackNumberUpdateTime": 1460164523907,

"createTime": 1443528317662,

"name": "带本书去旅行吧,人生最美好的时光在路上。",

"cloudTrackCount": 0,

"shareCount": 149,

"adType": 0,

"trackUpdateTime": 1494134249465,

"userId": 39256799,

"coverImgId": 3359008023885470,

"coverImgUrl": "http://p1.music.126.net/2ZFcuSJ6STR8WgzkIi2U-Q==/3359008023885470.jpg",

"artists": null,

"newImported": false,

"subscribed": false,

"privacy": 0,

"specialType": 0,

"description": "现在是一年中最美好的时节,世界上很多地方都不冷不热,有湛蓝的天空和清冽的空气,正是出游的好时光。长假将至,你是不是已经收拾行装准备出发了?行前焦虑症中把衣服、洗漱用品、充电器之类东西忙忙碌碌地丢进箱子,打进背包的时候,我打赌你肯定会留个位置给一位好朋友:书。不是吗?不管是打发时间,小读怡情,还是为了做好攻略备不时之需,亦或是为了小小地装上一把,你都得有一本书傍身呀。读大仲马,我是复仇的伯爵;读柯南道尔,我穿梭在雾都的暗夜;读村上春树,我是寻羊的冒险者;读马尔克斯,目睹百年家族兴衰;读三毛,让灵魂在撒哈拉流浪;读老舍,嗅着老北京的气息;读海茵莱茵,于科幻狂流遨游;读卡夫卡,在城堡中审判……读书的孩子不会孤单,读书的孩子永远幸福。",

"subscribedCount": 10882,

"totalDuration": 0,

"tags": [

"旅行",

"钢琴",

"安静"]

"creator": {

"followed": false,

"remarkName": null,

"expertTags": [

"古典",

"民谣",

"华语"

],

"userId": 39256799,

"authority": 0,

"userType": 0,

"gender": 1,

"backgroundImgId": 3427177752524551,

"city": 360600,

"mutual": false,

"avatarUrl": "http://p1.music.126.net/TLRTrJpOM5lr68qJv1IyGQ==/1400777825738419.jpg",

"avatarImgIdStr": "1400777825738419",

"detailDescription": "",

"province": 360000,

"description": "",

"birthday": 637516800000,

"nickname": "有梦人生不觉寒",

"vipType": 0,

"avatarImgId": 1400777825738419,

"defaultAvatar": false,

"djStatus": 0,

"accountStatus": 0,

"backgroundImgIdStr": "3427177752524551",

"backgroundUrl": "http://p1.mus## 标题ic.126.net/LS96S_6VP9Hm7-T447-X0g==/3427177752524551.jpg",

"signature": "漫无目的的乱听,听着,听着,竟然灵魂出窍了。更多精品音乐美图分享请加我微信hu272367751。微信是我的精神家园,有我最真诚的分享。",

"authStatus": 0}

"tracks": [{

歌曲1},{

歌曲2}, ...]

}

}

歌曲的格式

{

"id": 29738501,

"name": "跟着你到天边 钢琴版",

"duration": 174001,

"hearTime": 0,

"commentThreadId": "R_SO_4_29738501",

"score": 40,

"mvid": 0,

"hMusic": null,

"disc": "",

"fee": 0,

"no": 1,

"rtUrl": null,

"ringtone": null,

"rtUrls": [],

"rurl": null,

"status": 0,

"ftype": 0,

"mp3Url": "http://m2.music.126.net/vrVa20wHs8iIe0G8Oe7I9Q==/3222668581877701.mp3",

"audition": null,

"playedNum": 0,

"copyrightId": 0,

"rtype": 0,

"crbt": null,

"popularity": 40,

"dayPlays": 0,

"alias": [],

"copyFrom": "",

"position": 1,

"starred": false,,

"starredNum": 0

"bMusic": {

"name": "跟着你到天边 钢琴版",

"extension": "mp3",

"volumeDelta": 0.0553125,

"sr": 44100,

"dfsId": 3222668581877701,

"playTime": 174001,

"bitrate": 96000,

"id": 52423394,

"size": 2089713

},

"lMusic": {

"name": "跟着你到天边 钢琴版",

"extension": "mp3",

"volumeDelta": 0.0553125,

"sr": 44100,

"dfsId": 3222668581877701,

"playTime": 174001,

"bitrate": 96000,

"id": 52423394,

"size": 2089713

},

"mMusic": {

"name": "跟着你到天边 钢琴版",

"extension": "mp3",

"volumeDelta": -0.000265076,

"sr": 44100,

"dfsId": 3222668581877702,

"playTime": 174001,

"bitrate": 128000,

"id": 52423395,

"size": 2785510

},

"artists": [

{

"img1v1Url": "http://p1.music.126.net/6y-UleORITEDbvrOLV0Q8A==/5639395138885805.jpg",

"name": "群星",

"briefDesc": "",

"albumSize": 0,

"img1v1Id": 0,

"musicSize": 0,

"alias": [],

"picId": 0,

"picUrl": "http://p1.music.126.net/6y-UleORITEDbvrOLV0Q8A==/5639395138885805.jpg",

"trans": "",

"id": 122455

}

],

"album": {

"id": 3054006,

"status": 2,

"type": null,

"tags": "",

"size": 69,

"blurPicUrl": "http://p1.music.126.net/2XLMVZhzVZCOunaRCOQ7Bg==/3274345629219531.jpg",

"copyrightId": 0,

"name": "热门华语248",

"companyId": 0,

"songs": [],

"description": "",

"pic": 3274345629219531,

"commentThreadId": "R_AL_3_3054006",

"publishTime": 1388505600004,

"briefDesc": "",

"company": "",

"picId": 3274345629219531,

"alias": [],

"picUrl": "http://p1.music.126.net/2XLMVZhzVZCOunaRCOQ7Bg==/3274345629219531.jpg",

"artists": [

{

"img1v1Url": "http://p1.music.126.net/6y-UleORITEDbvrOLV0Q8A==/5639395138885805.jpg",

"name": "群星",

"briefDesc": "",

"albumSize": 0,

"img1v1Id": 0,

"musicSize": 0,

"alias": [],

"picId": 0,

"picUrl": "http://p1.music.126.net/6y-UleORITEDbvrOLV0Q8A==/5639395138885805.jpg",

"trans": "",

"id": 122455

}

],

"artist": {

"img1v1Url": "http://p1.music.126.net/6y-UleORITEDbvrOLV0Q8A==/5639395138885805.jpg",

"name": "",

"briefDesc": "",

"albumSize": 0,

"img1v1Id": 0,

"musicSize": 0,

"alias": [],

"picId": 0,

"picUrl": "http://p1.music.126.net/6y-UleORITEDbvrOLV0Q8A==/5639395138885805.jpg",

"trans": "",

"id": 0

}

}

}

数据解析

给大家原始数据和这份数据说明的原因是:里面包含非常多的信息(风格,歌手,歌曲播放次数,歌曲时长,歌曲发行时间…),大家思考后一定会想到如何使用它们进一步完善推荐系统

我们这里依旧使用最基础的音乐信息,我们认为同一个歌单中的歌曲,有比较高的相似性,同时都是做单的同学喜欢的

原始数据=>歌单数据

抽取 歌单名称,歌单id,收藏数,所属分类 4个歌单维度的信息

抽取 歌曲id,歌曲名,歌手,歌曲热度 等4个维度信息歌曲的信息

组织成如下格式:

漫步西欧小镇上##小语种,旅行##69413685##474 18682332::Wäg vo dir::Joy Amelie::70.0 4335372::Only When I Sleep::The Corrs::60.0 2925502::Si Seulement::Lynnsha::100.0 21014930::Tu N’As Pas Cherché…::La Grande Sophie::100.0 20932638::Du behöver aldrig mer vara rädd::Lasse Lindh::25.0 17100518::Silent Machine::Cat Power::60.0 3308096::Kor pai kon diew : ชอไปคนเดียว::Palmy::5.0 1648250::les choristes::Petits Chanteurs De Saint Marc::100.0 4376212::Paddy’s Green Shamrock Shore::The High Kings::25.0 2925400::A Todo Color::Las Escarlatinas::95.0 19711402::Comme Toi::Vox Angeli::75.0 3977526::Stay::Blue Cafe::100.0 2538518::Shake::Elize::85.0 2866799::Mon Ange::Jena Lee::85.0 5191949::Je M’appelle Helene::Hélène Rolles::85.0 20036323::Ich Lieb’ Dich Immer Noch So Sehr::Kate & Ben::100.0

歌单数据=>推荐系统格式数据

主流的python推荐系统框架,支持的最基本数据格式为movielens dataset,其评分数据格式为 user item rating timestamp。

project = offline modelling + online prediction

1)offline

python脚本语言

2)online

效率至上 C++/Java

原则:能离线预先算好的,都离线算好,最优的形式:线上是一个K-V字典

1.针对用户推荐 网易云音乐(每日30首歌/7首歌)

2.针对歌曲 在你听某首歌的时候,找“相似歌曲

保存歌单和歌曲信息备用

我们需要保存 歌单id=>歌单名 和 歌曲id=>歌曲名 的信息后期备用。

#coding: utf-8

import cPickle as pickle

import sys

def parse_playlist_get_info(in_line, playlist_dic, song_dic):

contents = in_line.strip().split("\t")

name, tags, playlist_id, subscribed_count = contents[0].split("##")

playlist_dic[playlist_id] = name

for song in contents[1:]:

try:

song_id, song_name, artist, popularity = song.split(":::")

song_dic[song_id] = song_name+"\t"+artist

except:

print "song format error"

print song+"\n"

def parse_file(in_file, out_playlist, out_song):

#从歌单id到歌单名称的映射字典

playlist_dic = {

}

#从歌曲id到歌曲名称的映射字典

song_dic = {

}

for line in open(in_file):

parse_playlist_get_info(line, playlist_dic, song_dic)

#把映射字典保存在二进制文件中

pickle.dump(playlist_dic, open(out_playlist,"wb"))

#可以通过 playlist_dic = pickle.load(open("playlist.pkl","rb"))重新载入

pickle.dump(song_dic, open(out_song,"wb"))

python推荐系统库Surprise

1.简单易用,同时支持多种推荐算法:

基础算法/baseline algorithms

基于近邻方法(协同过滤)/neighborhood methods

矩阵分解方法/matrix factorization-based (SVD, PMF, SVD++, NMF)

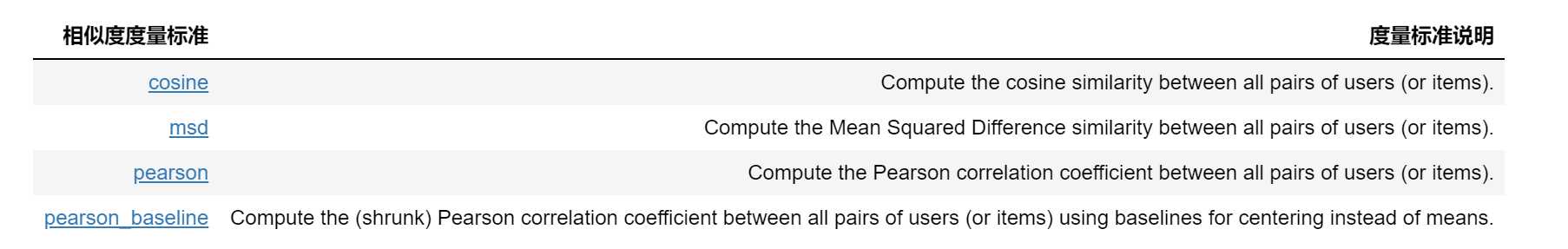

2.其中基于近邻的方法(协同过滤)可以设定不同的度量准则

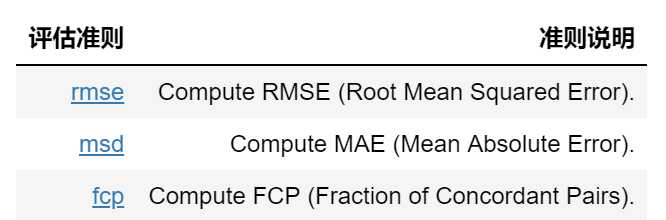

3.支持不同的评估准则

使用示例

基本方法

# 可以使用上面提到的各种推荐系统算法

from surprise import SVD

from surprise import Dataset

from surprise import evaluate, print_perf

# 默认载入movielens数据集

data = Dataset.load_builtin('ml-100k')

# k折交叉验证(k=3)

data.split(n_folds=3)

# 试一把SVD矩阵分解

algo = SVD()

# 在数据集上测试一下效果

perf = evaluate(algo, data, measures=['RMSE', 'MAE'])

#输出结果

print_perf(perf)

载入自己的数据集方法

# 指定文件所在路径

file_path = os.path.expanduser('~/.surprise_data/ml-100k/ml-100k/u.data')

# 告诉文本阅读器,文本的格式是怎么样的

reader = Reader(line_format='user item rating timestamp', sep='\t')

# 加载数据

data = Dataset.load_from_file(file_path, reader=reader)

# 手动切分成5折(方便交叉验证)

data.split(n_folds=5)

算法调参(让推荐系统有更好的效果)

这里实现的算法用到的算法无外乎也是SGD等,因此也有一些超参数会影响最后的结果,我们同样可以用sklearn中常用到的网格搜索交叉验证(GridSearchCV)来选择最优的参数。简单的例子如下所示:

# 定义好需要优选的参数网格

param_grid = {

'n_epochs': [5, 10], 'lr_all': [0.002, 0.005],

'reg_all': [0.4, 0.6]}

# 使用网格搜索交叉验证

grid_search = GridSearch(SVD, param_grid, measures=['RMSE', 'FCP'])

# 在数据集上找到最好的参数

data = Dataset.load_builtin('ml-100k')

data.split(n_folds=3)

grid_search.evaluate(data)

# 输出调优的参数组

# 输出最好的RMSE结果

print(grid_search.best_score['RMSE'])

# >>> 0.96117566386

# 输出对应最好的RMSE结果的参数

print(grid_search.best_params['RMSE'])

# >>> {'reg_all': 0.4, 'lr_all': 0.005, 'n_epochs': 10}

# 最好的FCP得分

print(grid_search.best_score['FCP'])

# >>> 0.702279736531

# 对应最高FCP得分的参数

print(grid_search.best_params['FCP'])

# >>> {'reg_all': 0.6, 'lr_all': 0.005, 'n_epochs': 10}

数据集上训练模型

用协同过滤构建模型并进行预测

movielens的例子

# 可以使用上面提到的各种推荐系统算法

from surprise import KNNWithMeans

from surprise import Dataset

from surprise import evaluate, print_perf

# 默认载入movielens数据集

data = Dataset.load_builtin('ml-100k')

# k折交叉验证(k=3)

data.split(n_folds=3)

# 试一把SVD矩阵分解

algo = KNNWithMeans()

# 在数据集上测试一下效果

perf = evaluate(algo, data, measures=['RMSE', 'MAE'])

#输出结果

print_perf(perf)

""" 以下的程序段告诉大家如何在协同过滤算法建模以后,根据一个item取回相似度最高的item,主要是用到algo.get_neighbors()这个函数 """

from __future__ import (absolute_import, division, print_function,

unicode_literals)

import os

import io

from surprise import KNNBaseline

from surprise import Dataset

def read_item_names():

""" 获取电影名到电影id 和 电影id到电影名的映射 """

file_name = (os.path.expanduser('~') +

'/.surprise_data/ml-100k/ml-100k/u.item')

rid_to_name = {

}

name_to_rid = {

}

with io.open(file_name, 'r', encoding='ISO-8859-1') as f:

for line in f:

line = line.split('|')

rid_to_name[line[0]] = line[1]#id 到name

name_to_rid[line[1]] = line[0]

return rid_to_name, name_to_rid

# 首先,用算法计算相互间的相似度

data = Dataset.load_builtin('ml-100k')

trainset = data.build_full_trainset()#构建稀疏矩阵

sim_options = {

'name': 'pearson_baseline', 'user_based': False}

algo = KNNBaseline(sim_options=sim_options)

algo.train(trainset)

# 获取电影名到电影id 和 电影id到电影名的映射

rid_to_name, name_to_rid = read_item_names()

# 拿出来Toy Story这部电影对应的item id

toy_story_raw_id = name_to_rid['Toy Story (1995)']

toy_story_raw_id

#rid 原始编号 iid 内部映射ID

toy_story_inner_id = algo.trainset.to_inner_iid(toy_story_raw_id)

toy_story_inner_id

# 找到最近的10个邻居

toy_story_neighbors = algo.get_neighbors(toy_story_inner_id, k=10)

toy_story_neighbors

# 从近邻的id映射回电影名称 rid 原始编号 iid 内部映射ID

toy_story_neighbors = (algo.trainset.to_raw_iid(inner_id)

for inner_id in toy_story_neighbors)

toy_story_neighbors = (rid_to_name[rid]

for rid in toy_story_neighbors)

print()

print('The 10 nearest neighbors of Toy Story are:')

for movie in toy_story_neighbors:

print(movie)

# 拿出来Toy Story这部电影对应的item id

toy_story_raw_id = name_to_rid['Toy Story (1995)']

toy_story_inner_id = algo.trainset.to_inner_iid(toy_story_raw_id)

# 找到最近的10个邻居

toy_story_neighbors = algo.get_neighbors(toy_story_inner_id, k=10)

# 从近邻的id映射回电影名称

toy_story_neighbors = (algo.trainset.to_raw_iid(inner_id)

for inner_id in toy_story_neighbors)

toy_story_neighbors = (rid_to_name[rid]

for rid in toy_story_neighbors)

print()

print('The 10 nearest neighbors of Toy Story are:')

for movie in toy_story_neighbors:

print(movie)

音乐预测的例子

from __future__ import (absolute_import, division, print_function, unicode_literals)

import os

import io

from surprise import KNNBaseline, Reader

from surprise import Dataset

import cPickle as pickle

# 重建歌单id到歌单名的映射字典

id_name_dic = pickle.load(open("popular_playlist.pkl","rb"))

print("加载歌单id到歌单名的映射字典完成...")

# 重建歌单名到歌单id的映射字典

name_id_dic = {

}

for playlist_id in id_name_dic:

name_id_dic[id_name_dic[playlist_id]] = playlist_id

print("加载歌单名到歌单id的映射字典完成...")

file_path = os.path.expanduser('./popular_music_suprise_format.txt')

# 指定文件格式

reader = Reader(line_format='user item rating timestamp', sep=',')

# 从文件读取数据

music_data = Dataset.load_from_file(file_path, reader=reader)

# 计算歌曲和歌曲之间的相似度

print("构建数据集...")

trainset = music_data.build_full_trainset()

#sim_options = {'name': 'pearson_baseline', 'user_based': False}

查找最近的user(在这里是歌单)

print("开始训练模型...")

#sim_options = {'user_based': False}

#algo = KNNBaseline(sim_options=sim_options)

algo = KNNBaseline()

algo.train(trainset)

current_playlist = name_id_dic.keys()[39]

print("歌单名称", current_playlist)

# 取出近邻

# 映射名字到id

playlist_id = name_id_dic[current_playlist]

print("歌单id", playlist_id)

# 取出来对应的内部user id => to_inner_uid

playlist_inner_id = algo.trainset.to_inner_uid(playlist_id)

print("内部id", playlist_inner_id)

playlist_neighbors = algo.get_neighbors(playlist_inner_id, k=10)

# 把歌曲id转成歌曲名字

# to_raw_uid映射回去

playlist_neighbors = (algo.trainset.to_raw_uid(inner_id)

for inner_id in playlist_neighbors)

playlist_neighbors = (id_name_dic[playlist_id]

for playlist_id in playlist_neighbors)

print()

print("和歌单 《", current_playlist, "》 最接近的10个歌单为:\n")

for playlist in playlist_neighbors:

print(playlist, algo.trainset.to_inner_uid(name_id_dic[playlist]))

针对用户进行预测

import cPickle as pickle

# 重建歌曲id到歌曲名的映射字典

song_id_name_dic = pickle.load(open("popular_song.pkl","rb"))

print("加载歌曲id到歌曲名的映射字典完成...")

# 重建歌曲名到歌曲id的映射字典

song_name_id_dic = {

}

for song_id in song_id_name_dic:

song_name_id_dic[song_id_name_dic[song_id]] = song_id

print("加载歌曲名到歌曲id的映射字典完成...")

#内部编码的4号用户

user_inner_id = 4

user_rating = trainset.ur[user_inner_id]

items = map(lambda x:x[0], user_rating)

for song in items:

print(algo.predict(user_inner_id, song, r_ui=1), song_id_name_dic[algo.trainset.to_raw_iid(song)])

用矩阵分解进行预测

### 使用NMF

from surprise import NMF, evaluate

from surprise import Dataset

file_path = os.path.expanduser('./popular_music_suprise_format.txt')

# 指定文件格式

reader = Reader(line_format='user item rating timestamp', sep=',')

# 从文件读取数据

music_data = Dataset.load_from_file(file_path, reader=reader)

# 构建数据集和建模

algo = NMF()

trainset = music_data.build_full_trainset()

algo.train(trainset)

user_inner_id = 4

user_rating = trainset.ur[user_inner_id]

items = map(lambda x:x[0], user_rating)

for song in items:

print(algo.predict(algo.trainset.to_raw_uid(user_inner_id), algo.trainset.to_raw_iid(song), r_ui=1), song_id_name_dic[algo.trainset.to_raw_iid(song)])

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/38693.html