文章目录

01 引言

声明:本文为《Kubernetes权威指南:从Docker到Kubernetes实践全接触(第5版)》的读书笔记

通过前面的博客,大致知道了k8s里面Volume存储的一些概念了,有兴趣的童鞋可以参考下:

- 《k8s教程(Volume篇)-k8s存储机制概述》

- 《k8s教程(Volume篇)-PV详解》

- 《k8s教程(Volume篇)-PVC详解》

- 《k8s教程(Volume篇)-StorageClass详解》

本文结合之前的知识,从定义StorageClass、创建 GlusterFS和Heketi服务、 用户申请PVC到创建Pod使用存储资源,对StorageClass和动态资源分配进行详细说明。

02 准备工作

首先在各 Node上安装 GlusterFS客户端:

yum install glusterfs glusterfs-fuse

GlusterFS管理服务容器 需要以特权模式运行,在kube-apiserver的启动参数中增加:

--allow-privileged=true

给要部署GlusterFS管理服务的节点打上storagenode=glusterfs标签,是为了将GlusterFS容器定向部署到安装了GlusterFS的 Node上:

$ kubectl label node k8s-node-1 storagenode=glusterfs

$ kubectl label node k8s-node-2 storagenode=glusterfs

$ kubectl label node k8s-node-3 storagenode=glusterfs

03 创建GlusterFS管理服务容器集群

GlusterFS管理服务容器以DaemonSet的方式进行部署,确保在每个Node上都运行—个GlusterFS管理服务。

glusterfs-daemonset.yaml的内容如下:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: glusterfs

labels:

glusterfs: daemonset

annotations:

description: GlusterFS Daenonset

tags: glusterfs

spec:

template:

metadata:

name: glusterfs

labels:

glusterfs-node: pod

spec:

nodeSelector:

storagenode: glusterfs

hostNetwork: true

containers:

- image: gluster/gluster-centos:latest

name: glusterfs

volumeMounts:

- name: glusterfs-heketi

mountPath: "/var/lib/heketi"

- name: glusterfs-run

mountPath: "/run"

- name: glusterfs-lvm

mount Path: "/run/1vm"

- name: glusterfs-etc

mountPath: "/etc/glusterfs"

- name: glusterfs-1ogs

mountPath: "/var/log /glusterfs"

- name: glusterfs-config

mountPath: "/var/lib/glusterd"

- name: glusterfs-dev

mountPath: "/dev"

- name: glusterfs-misc

mountPath: "/var/lio/misc/glusterfsd"

- name: glusterfs-cgroup

mountPath: "/sys/fs/cgroup"

readonly: true

- name: glusterfs-ss1

mountPath: "/etc/ssl"

readonly: true

securityContext:

capabilities: {

}

privileged: true

readinessProbe:

timeoutSeconds: 3

initialDelaySeconds: 60

exec:

command:

- "/bin/bash"

- "-c"

- systemctl status glusterd.service

livenessProbe:

timeoutSeconds: 3

initialDelaySeconds: 60

exec:

command:

- "/bin/bash"

- "-c"

- systemctl status glusterd.service

volumes:

- name: glusterfs-heketi

hostPath:

path: "/var/lib/heketi"

- name: glusterfs-run

- name: qlusterfs-1vn

hostPath:

path: " /run/1vm"

- name: glusterfs-etc

hostPath:

path: "/etc/glusterfs"

- name: glusterfs-1ogs

hostPath:

path:"/var/log/glusterfs"

- name: glusterfs-config

hostPath:

path:"/var/lib/glusterd"

- name: glusterfs-dev

hostPath:

path: "/dev"

- name: glusterfs-nise

hostPath:

path: "/var/1ib/misc/glusterfsd"

- name: glusterfs-cgroup

hostPath:

path: "/sys/fs/cgroup"

- name: glusterfs-ssl

hostPath:

path: "/etc/ssl"

创建:

$ kubectl create -f glusterfs-daemonset.yaml

daemonset.apps/glusterfs created

查询pod:

$ kubect1 get po

NAME READY STATUS RESTARTS AGE

glusterfs-k2src 1/1 Running 0 1m

glusterfs-q32z2 1/1 Running 0 1m

04 创建Heketi服务

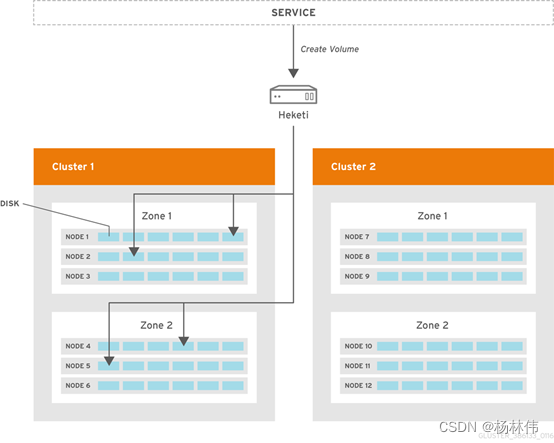

Heketi是一个 提供RESTful API管理GlusterFS卷的框架,能够在OpenStack、 Kubernetes、 OpenShift等云平台上实现动态存储资源供应,支持GlusterFS多集群管理,便于管理员对GlusterFS进行操作。

下图展示了Heketi的功能:

4.1 创建ServiceAccount及RBAC授权

在部署Heketi服务之前,先创建ServiceAccount并完成RBAC授权:

# heketi-rbac.yam1

apiversion: v1

kind: ServiceAccount

metadata:

name: heketi-service-account

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: heketi

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourees:

- pods/exec

verbs:

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: heketi

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: heketi

subiects:

- kind: ServiceAccount

name: heketi-service-account

namespace: default

创建:

# kubectl create -f heketi-rbac.yaml

serviceaccount/heketi-service-account created

role.rbae.authorization.k8s.io/heketi created

rolebinding.rbac.authorization.k8s.io/heketi created

4.2 部署Heketi服务

# heketi-deployment-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: heketi

labels:

glusterfs: heketi-deployment

deploy-heketi: heketi-deployment

annotations:

description: Defines how to deploy Heketi

spec:

replicas: 1

seleetor:

matchLabels:

name: deploy-heketi

glusterfs: heketi-pod

template:

metadata:

name: deploy-heketi

labels:

name: deploy-heketi

glusterfs: heketi-pod

spec:

serviceAccountName: heketi-service-account

containers:

- image: heketi/heketi

name: deploy-heketi

env:

- name: HEKETI_EXECUTOR

value: kubernetes

- name: HEKETI_FSTAB

value: "/var/1ib/heketi/fstal"

- name: HEKETI_SNAPSHOT_LIMIT

value: "14"

- name: HEKETI_KUBE_GLUSTER_DAEMONSET

value: "y"

ports:

- containerPort: 8080

volumeMounts:

- name: db

mountPath: "/var/lib/heketi"

readinessProbe:

timeoutSeconds: 3

initialDelaySeconds: 3

httpGet:

path: "/hello"

port: 8080

livenessProbe:

timeoutSeconds: 3

initialDelaySeconds: 30

httpGet:

path: "/hello"

port: 8080

volumes:

- name: db

hostPath:

path: "/heketi-datan"

---

kind: Service

apiVersion: v1

metadata:

name: heketi

labels:

glusterfs: heketi-service

deploy-heketi: support

annotations:

description: Exposes Heketi Service

spec:

selector:

name: deploy-heketi

ports:

name: deploy-heketi

port: 8080

targetPort: 8080

需要注意的是,Heketi的DB数据需要持久化保存,建议使用hostPath或其他共享存储进行保存:

$ kubect1 create -f heketi-deploymnent-sve.yaml

deployment.apps/heketi created

service/heketi created

05 通过Heketi服务管理GlusterFS集群

在Heketi能够管理GlusterFS集群之前,首先要为其设置 GlusterFS集群的信息。可以用一个topology.json配置文件 来完成各个GlusterFS节点和设备的定义,Heketi要求在一个GlusterFS集群中至少有3个节点。

- 在topologyjson配置文件 hostnames字段的manage上填写主机名;

- 在storage上填写IP地址;

- devices要求是未创建文件系统的裸设备(可以有多块盘),以供Heketi自动完成PV (Physical Volume )、 VG( Volume Group ) 和 LV ( Logical Volume )的创建。

topology.json文件的内容如下:

{

"clusters": [

{

"nodes": [

{

"node": {

"hostnames": {

"manage": [

"k8s-node-1"

],

"storage": [

"192.168.18.3"

]

},

"zoon": 1

},

"devices": [

"/dev/sdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"k8s-node-2"

],

"storage": [

"192.168.18.4"

]

},

"zoon": 1

},

"devices": [

"/dev/sdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"k8s-node-3"

],

"storage": [

"192.168.18.5"

]

},

"zoon": 1

},

"devices": [

"/dev/sdb"

]

}

]

}

]

}

进入Heketi容器,使用命令行工具heketi-cli完成GlusterFS集群的创建:

$ export HEKETI_CLI_SERVER=http://localhost:8080

$ heketi-cli topology 1oad --json=topology.json

Creating cluster ... ID: f643dalcd64691c5705932a46a95d1d5

Creating node k8S-node-1 ID: 883506b09la22bd13f10bc3d0flo51223

Adding device/dev/sdb OK

Creating node k8s-node-2 ID: e646879689106£82a9c4ac910a865cc8

Adding device/dev/sdb OK

Creating node k8s-node-3 ID: b7783484180£6a592a30baebfb97d9be

Adding device/dev/sdb OK

经过上述操作, Heketi就完成了GlusterFS集群的创建,结果是在GlusterFS集群各个节点的/dev/sdb盘上成功创建了PV和VG。

查看Heketi的topology信息,可以看到Node和Device的详细信息,包括磁盘空间的大小和剩余空间。此时,GlusterFS的Volume和Brick还未创建:

$ heketi-cli topology info

Cluster Id: £643dalcd64691c5705932a46a95dla5

Volumes:

Nodes:

Node Id: 883506b09la22bd13f10bc3d0fb51223

State: online

Cluster Id: £643dalcd64691¢5705932a46a956105

Zone: 1

Management Hostname: k8s-node -1

Storage Hostname: 192.168.18.3

Devices:

Id:b474f14b0903ed03ec80d4a989£943£2

Name: /dev/ sdb

State:online Size (GiB):9

Used (GiB):0

Free (GiB):9

Bricks:

Node Id: b7783484180£6a592a30baebfb97d9be

State: online

Cluster Id: l643dalcd64691c5705932a46a95a1a5

Zone: 1

Management Hostname: k8s-node-3

Storage Hostname: 192.168.18.5

Devices:

Id: fac3fa5aclde3d5bde3aa68f6aa61285

Name: /dev/sdb

State:online

Size (GiB):9

Used (GiB):0

Free (GiB):9

Bricks:

06 定义StorageClass

准备工作已经就绪,集群管理员现在可以在Kubernetes 集群中定义一个 StorageClass了。

storageclass-gluster-heketi.yaml配置文件的内容如下:

apiVersion: storage.k8s.io/v1

kind: Storageclass

metadata:

name: gluster-heketi

provisioner: kubernetes.io/glusterfs

parameters:

resturl: "http: //172.17.2.2:8080"

restauthenabled: "false"

provisioner参数必须被设置为“kubernetes.io/glusterfs”。

resturl的地址需要被设置为API Server所在主机可以访问到的Heketi服务地址,可以使用服务ClusterIP+Port、 PodIP+Port,或将服务映射到物理机。

创建该StorageClass资源对象:

$ kubectl create -f storageclass-gluster-heketi.yaml

storageclass/gluster-heketi created

07 定义PVC

现在,用户可以定义一个PVC申请Glusterfs存储空间了。

下面是PVC的YAML 定义,其中申请了1GiB空间的存儲资源,设置StorageClass为“gluster-heketi”,同时未设置Selector,表示使用动态资源供应模式:

# pvc-gluster-heketi.yaml

apiVersion: V1

kind: PersistentVolumeClaim

metadata:

name: pve-gluster-heketi

spec:

storageClassName: gluster-heketi

accessModes:

- Readwriteonce

resources:

requests:

storage: 1Gi

创建:

$ kubectl create -f ppvc-gluster-heketi.yaml

persistentvolumeclaim/pvc-gluster-heketi created

PVC的定义一旦生成,系统便将触发Heketi进行相应的操作,主要为在 GlusterFS集群中创建brick,再创建并启动一个Volume。

可以在Heketi的日志中查看整个过程:

......

[kubeexec] DEBUG 2020/04/26 00:51:30

/sre/github .com /heketi /heketi/executors/ kubeexec/kubeexec.go:250: Host:

k8s-node-1 Pod: glusterfs-ld7nh Command: gluster --mode=script volume create v01 87b9314cb76bafacfb7e9cdc04fcaf05 replica 3

192.168.18.3:/var/lib/heketi/mounts/vg b474f14b0903ed03ec80d4a989£943£2/brick_ d0 8520c9££769a0a9165£9815671f2cd/brick

192.168.18.5:/var/lib /heketi/mounts /vg fac3fa5aclde 3d5bde 3aa68f6aa61285 /brick 68 18dce118b8a54e9590199d44a3817b/brick

192.168. 18.4:/vef/1tb/heket1 /mounta/vg. 03532e7ab723933e8643o64b3Gxeelaz /bztok.9e

cb8f7fdelae937011£0440le7c6c56/brick

Result: volume create: vo1 87b9314cb76bafacfb7e9cdc0A fcaf05: success: please start the volume to access data

[kubeexec] DEBUG 2020/04/26 00:51:33

/src/github.com/heketi/heketi/executors/kubeexec/kubeexec.go:250: Host:

k8s-node-1 Pod: glusterfs-ld7nh Command: gluster --mode=script volune start vo1 87b9314cb76bafacfbTe 9cdc04fcaf05

Result: volume start: vol 87b9314cb7 6bafacfbTe 9cdc04fcaf05: suecess

......

查看PVC的详情,确认其状态为Bound(已绑定):

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESSMODES STORAGECLASS AGE

pve-gluster-heketi Bound pvc-783cf949-2ala-11e7-8717-000c2 9eaed40 1Gi RWX qluster-heketi 6m

查看PV,可以看到系统通过动态供应机制系统自动创建的PV:

$ kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM STORAGECLASS REASON AGE

pve-783cf949-2a1a-11e7-8717-000c29eaed4o 1Gi Rwx Delete Bound 1 default/pvc-gluster-heketi gluster-heketi 6m

查看该PV的详细信息,可以看到其容量、引用的StorageClass等信息都已正确设置,状态也为Bound,回收策略则为默认的Delete。同时Gluster的Endpoint 和Path也由Heketi自动完成了设置:

$ kubectl describe pv PVC-783c£949-2ala-11e7-8717-000c2geaed40

Name: pvc-783¢£949-2ala-11e7-8717-000c29eaed40

Labels: <none>

Annotations: pv.beta.kubernetes.io/gid-2000

pv.kubernetes.io/bound-by-controller=yes

pv.kubernetes.io/provisioned-by=kubernetes.io/glusterfs

Storageclass: gluster-heketi

Status: Bound

Claim: default/pve-gluster-heketi

Reclaim Policy: Delete

Access Modes: RWX

Capacity: 1Gi

Message:

Source:

Type: Glusterfs (a Glusterfs mount on the host that shares a pod's lifetime)

EndpointsName: glusterfs-dynamic-pvc-gluster-heketi

Path: Vol_87b9314cb76bafacfbTe9cdc04fcaf05

Readonly: false

Events:

<none>

至此,一个可供Pod使用的PVC就创建成功了。接下来Pod就能通过Volume的设置将这个PVC挂载到容器内部进行使用了。

08 Pod使用PVC的存储资源

下面是在Pod中使用PVC定义的存储资源的配置,首先设置一个类型为 persistentVolumeClaim的Volume,然后将其通过volumeMounts设置挂载到容器内的目录路径下,注意,Pod需要与PVC属于同一个命名空间:

# pod-use-pve.yam1

apiVersion: v1

kind: Pod

metadata:

name: pod-use-pvc

spec:

containers:

- name: pod-use-pvc

image: busybox

command:

- sleep

- "3600"

volumeMounts:

- name: qluster-volume

mountPath: "/pv-data"

readonly: false

volumes:

name: qluster-volume

persistentVolumeclaim:

claimName: pvc-gluster-heketi

创建之后,进入容器pod-use-pvc,在/pv-data目录下创建一些文件:

$ kubectl exec -ti pod-use-pve --/bin/sh

/$ cd /pv-data

/$ touch a

/$ echo "hello" >b

可以验证文件a和b在GlusterFS集群中正确生成。

至此,使用Kubernetes最新的动态存储供应模式,配合StorageClass和Heketi 共同搭建基于GlusterFS的共享存储就完成了。

在使用动态存储供应模式的情况下,可以解决静态模式的下列问题:

- 管理员需要预先准备大量的静态PV;

- 系统为PVC选择PV时可能存在PV空间比PVC申请空间大的情况,无法保证没有资源浪费;

所以在Kubernetes中,建议用户优先考虑使用StorageClass的动态存储供应模式进行存储资源的申请、使用、回收等操作。

09 文末

本文主要结合之前的k8s的volume的知识并以GlusterFS为案例走了一下整体的使用流程,希望能帮助到大家,谢谢大家的阅读,本文完!

今天的文章k8s使用ceph动态存储_dockerfile volume分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/85517.html