作者删除了,我赶紧转载在这里。

-

本文知识点:

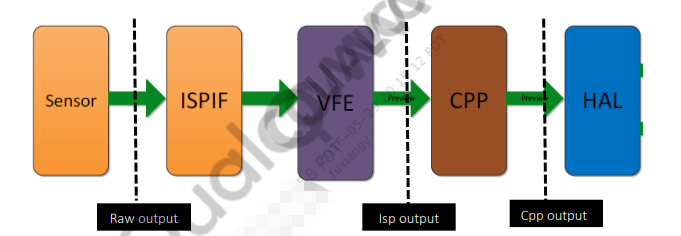

- 一、数据流总体框图

- 二、硬件层面的传输

- 三、软件层面的传输

- 四、应用以及debug技巧

介绍几个概念

- ISP(Image Signal Processing) 图像信号处理,在高通中相当于就是Camera2 架构中的 VFE,负责处理Sensor模块的原数据流(RAW图)并生成 ISP 输出(YUV图)。

- Channel :通道、管道的意思,捆绑多个图像流的松散概念,想象一下,数据流就类似于我们的水流,我们要把水送给千家万户,是不是需要水管。因此——Channel相当于水管

- Stream :流,类似于现实世界的水流。每个流只能有一种格式;它是在相机硬件和应用程序之间交换捕获图像缓冲区的接口

一、数据流总体框图

-

sensor模块:获取图像,输出raw图。

-

ISPIF: ISP interface,VFE和Sensor模块的中间层接口。

-

VFE(Video Front End):一个ISP-硬件图像处理芯片,用来处理相机帧数据,高通的ISP算法就运行于这个模块,例如:白平衡,LSC,gama校正,AF,AWB,AE等。

-

CPP(Camera Postprocessor):camera后处理,用于处理VFE模块输出的YUV图像,支持翻转/旋转、降噪、平滑/锐化、裁剪功能。

-

HAL层:把YUV图像送给LCD显示或者给app,后续存储成jpg。

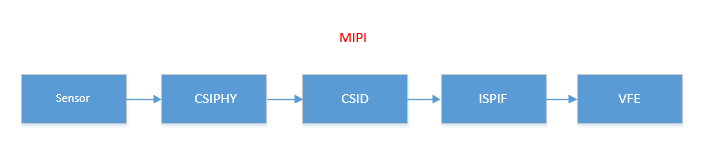

二、硬件层面的传输

- Sensor捕捉到 raw图 通过MIPI传输到 ISPIF(ISP interface),

- 然后把 raw图 传输给VFE对图像数据进行处理(如白平衡,颜色校正,gama校正等),输出YUV图。

数据到了VFE(即ISP)后怎么传输到软件层面?

- VFE(ISP)会把 【YUV图像数据】 填充到buffer中,填充好了之后,会发送一个中断信号

- 中断处理程序msm_isp_process_axi_irq设置当前buf的状态是填充好的,最终把buf添加到done buffers队列里

调用流程如下:

msm_isp_process_axi_irq ->

msm_isp_process_axi_irq_stream ->

msm_isp_process_done_buf ->

msm_isp_buf_done ->

msm_vb2_buf_done ->

vb2_buffer_done

void msm_isp_process_axi_irq_stream(···)

{

struct msm_isp_buffer *done_buf = NULL;

···

//填充buf

done_buf = stream_info->buf[pingpong_bit];

···

if (stream_info->pending_buf_info.is_buf_done_pending != 1) {

//处理填充的buf

msm_isp_process_done_buf(vfe_dev, stream_info,

done_buf, time_stamp, frame_id);

}

}

最终调用到vb2_buffer_done

void vb2_buffer_done(struct vb2_buffer *vb, enum vb2_buffer_state state)

{

//buffer队列

struct vb2_queue *q = vb->vb2_queue;

···

/* 同步 buffers */

for (plane = 0; plane < vb->num_planes; ++plane)

call_void_memop(vb, finish, vb->planes[plane].mem_priv);

spin_lock_irqsave(&q->done_lock, flags);

if (state == VB2_BUF_STATE_QUEUED ||

state == VB2_BUF_STATE_REQUEUEING) {

vb->state = VB2_BUF_STATE_QUEUED;

} else {

/* Add the buffer to the done buffers list */

list_add_tail(&vb->done_entry, &q->done_list);

vb->state = state;//设置buf状态

}

atomic_dec(&q->owned_by_drv_count);

spin_unlock_irqrestore(&q->done_lock, flags);

···

}

- 将缓冲区添加到done buffers链表中

list_add_tail(&vb->done_entry, &q->done_list); - 设置buf状态:VB2_BUF_STATE_DONE

vb->state = state;

到这里,我们的数据就被填充到kernel的缓冲区(buffer),等待用户空间取数据!

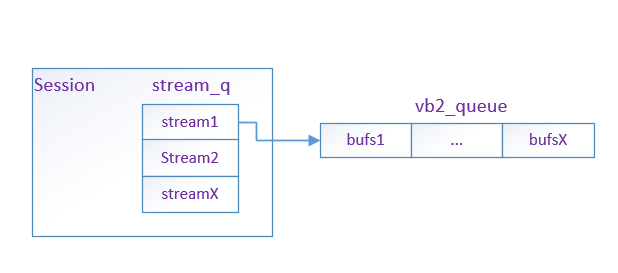

所有的buffer都由vb2_queue队列管理

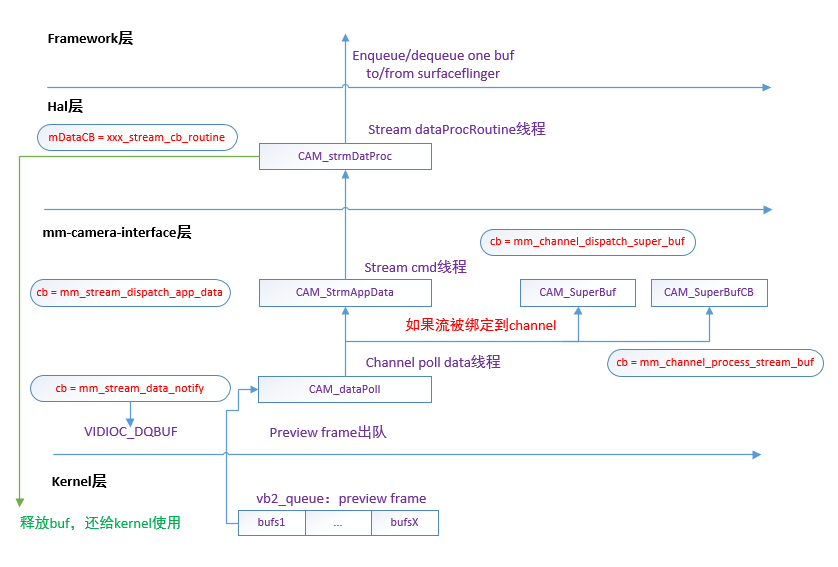

三、软件层面的传输

数据流框图如下:

3.1 kernel层

- vb2 queue队列的申请

kernel/msm-4.9/drivers/media/platform/msm/camera_v2/camera/camera.c

static int camera_v4l2_open(struct file *filep)

{

...

/* every stream has a vb2 queue */

//初始化vbuffer2_queue

rc = camera_v4l2_vb2_q_init(filep)

···

}

通过camera_v4l2_vb2_q_init为每一个stream申请vb2 queue。

需要注意的是,这里申请的是 用来存放buffer的队列。

- buffer的申请

而buffer的在用户空间申请的,高通使用的是用户空间缓冲区(V4L2_MEMORY_USERPTR)

hardware/qcom/camera/QCamera2/HAL/QCameraMem.cpp

int QCameraMemory::allocOneBuffer(QCameraMemInfo &memInfo,

unsigned int heap_id, size_t size, bool cached, bool secure_mode)

{

main_ion_fd = open("/dev/ion", O_RDONLY);

rc = ioctl(main_ion_fd, ION_IOC_ALLOC, &alloc);

}

为什么使用ION buffer呢?

- ION 是当前 Android 流行的内存分配管理机制,在多媒体部分中使用的最多,例如从 Camera 到 Display,

从 Mediaserver 到 Surfaceflinger,都会利用 ION 进行内存分配管理。 - ION-最显著的特点是它可以被用户空间的进程之间或者内核空间的模块之间进行内存共享,而且这种共享可以是零拷贝的。

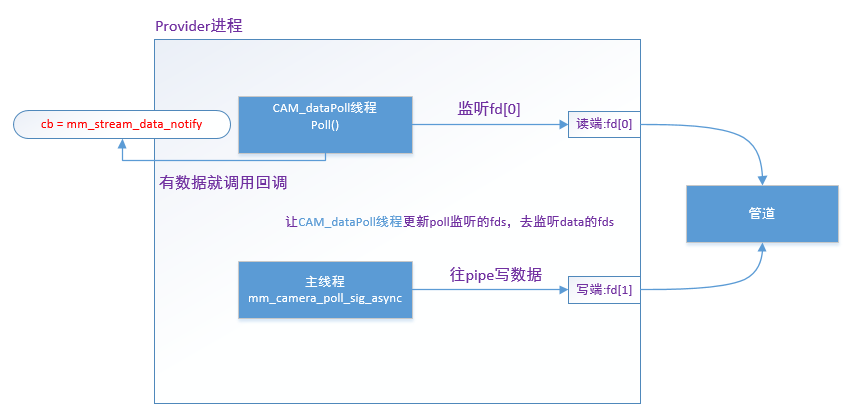

3.2 mm-camera-interface层

这部分的代码分析会有些绕,看下图去理解:

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_channel.c

int32_t mm_channel_init(mm_channel_t *my_obj,

mm_camera_channel_attr_t *attr,

mm_camera_buf_notify_t channel_cb,

void *userdata)

{

···

LOGD("Launch data poll thread in channel open");

snprintf(my_obj->poll_thread[0].threadName, THREAD_NAME_SIZE, "CAM_dataPoll");

mm_camera_poll_thread_launch(&my_obj->poll_thread[0],

MM_CAMERA_POLL_TYPE_DATA);

/* change state to stopped state */

my_obj->state = MM_CHANNEL_STATE_STOPPED;

return rc;

}

mm_camera_poll_thread_launch 会创建一个管道,让线程进行通信;

- 这里mm_camera_poll_thread_launch在主线程运行

- mm_camera_poll_thread在CAM_dataPoll线程运行

int32_t mm_camera_poll_thread_launch(mm_camera_poll_thread_t * poll_cb,

mm_camera_poll_thread_type_t poll_type)

{

//Initialize pipe fds

poll_cb->pfds[0] = -1;

poll_cb->pfds[1] = -1;

rc = pipe(poll_cb->pfds);

/* 创建一个data_poll线程 */

pthread_create(&poll_cb->pid, NULL, mm_camera_poll_thread, (void *)poll_cb);

<=================================>

static void *mm_camera_poll_thread(void *data)

{

mm_camera_poll_thread_t *poll_cb = (mm_camera_poll_thread_t *)data;

mm_camera_cmd_thread_name(poll_cb->threadName);

/* add pipe read fd into poll first */

poll_cb->poll_fds[poll_cb->num_fds++].fd = poll_cb->pfds[0];

mm_camera_poll_sig_done(poll_cb);

mm_camera_poll_set_state(poll_cb, MM_CAMERA_POLL_TASK_STATE_POLL);

return mm_camera_poll_fn(poll_cb);

}

<=================================>

return rc;

}

pthread_create会创建一个data_poll线程,该线程最终会调用mm_camera_poll_fn。

static void *mm_camera_poll_fn(mm_camera_poll_thread_t *poll_cb)

{

int rc = 0, i;

···

do {

for(i = 0; i < poll_cb->num_fds; i++) {

poll_cb->poll_fds[i].events = POLLIN|POLLRDNORM|POLLPRI;

}

//轮询监听fd

rc = poll(poll_cb->poll_fds, poll_cb->num_fds, poll_cb->timeoutms);

if(rc > 0) {

if ((poll_cb->poll_fds[0].revents & POLLIN) &&

(poll_cb->poll_fds[0].revents & POLLRDNORM)) {

//管道里面的消息

/* if we have data on pipe, we only process pipe in this iteration */

LOGD("cmd received on pipe\n");

mm_camera_poll_proc_pipe(poll_cb);

} else {//event 回调

for(i=1; i<poll_cb->num_fds; i++) {

/* Checking for ctrl events -event 回调*/

if ((poll_cb->poll_type == MM_CAMERA_POLL_TYPE_EVT) &&

(poll_cb->poll_fds[i].revents & POLLPRI)) {

LOGD("mm_camera_evt_notify\n");

if (NULL != poll_cb->poll_entries[i-1].notify_cb) {

poll_cb->poll_entries[i-1].notify_cb(poll_cb->poll_entries[i-1].user_data);

}

}

/*data 回调*/

if ((MM_CAMERA_POLL_TYPE_DATA == poll_cb->poll_type) &&

(poll_cb->poll_fds[i].revents & POLLIN) &&

(poll_cb->poll_fds[i].revents & POLLRDNORM)) {

LOGD("mm_stream_data_notify\n");

if (NULL != poll_cb->poll_entries[i-1].notify_cb) {

poll_cb->poll_entries[i-1].notify_cb(poll_cb->poll_entries[i-1].user_data);

}

}

}

}

} else {

/* in error case sleep 10 us and then continue. hard coded here */

usleep(10);

continue;

}

} while ((poll_cb != NULL) && (poll_cb->state == MM_CAMERA_POLL_TASK_STATE_POLL));

return NULL;

}

- 死循环- 轮询(poll)的方式监听fd,这里只监听了pipe的poll_cb->pfds[0],后续data和event都会监听

- 如果管道有消息,则回调mm_camera_poll_proc_pipe

- 如果有event事件,调用相关回调mm_camera_evt_notify

- 如果有data数据,调用相关回调mm_stream_data_notify

这里是通过poll_cb->poll_entries[i-1].notify_cb(poll_cb->poll_entries[i-1].user_data调用回调函数mm_stream_data_notify

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_stream.c

int32_t mm_stream_qbuf(mm_stream_t *my_obj, mm_camera_buf_def_t *buf)

{

if (1 == my_obj->queued_buffer_count) {

uint8_t idx = mm_camera_util_get_index_by_num(

my_obj->ch_obj->cam_obj->my_num, my_obj->my_hdl);

/* Add fd to data poll thread */

LOGH("Add Poll FD %p type: %d idx = %d num = %d fd = %d",···);

rc = mm_camera_poll_thread_add_poll_fd(&my_obj->ch_obj->poll_thread[0],

idx, my_obj->my_hdl, my_obj->fd, mm_stream_data_notify,

(void*)my_obj, mm_camera_async_call);

}

}

在mm_stream_qbuf调用函数mm_camera_poll_thread_add_poll_fd函数 进行注册

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_thread.c

int32_t mm_camera_poll_thread_add_poll_fd(···)

{

int32_t rc = -1;

if (MAX_STREAM_NUM_IN_BUNDLE > idx) {

poll_cb->poll_entries[idx].fd = fd;//添加Poll FD

poll_cb->poll_entries[idx].handler = handler;

poll_cb->poll_entries[idx].notify_cb = notify_cb;//注册回调函数

poll_cb->poll_entries[idx].user_data = userdata;//data数据

/* send poll entries updated signal to poll thread */

if (call_type == mm_camera_sync_call ) {

rc = mm_camera_poll_sig(poll_cb, MM_CAMERA_PIPE_CMD_POLL_ENTRIES_UPDATED);

} else {

rc = mm_camera_poll_sig_async(poll_cb,MM_CAMERA_PIPE_CMD_POLL_ENTRIES_UPDATED_ASYNC );

}

} else {

LOGE("invalid handler %d (%d)", handler, idx);

}

return rc;

}

该函数的作用如下:

- 添加Poll FD

- 注册回调函数

- 调用mm_camera_poll_sig_async往管道里面写cmd,其实就去更新poll_fds

static int32_t mm_camera_poll_sig_async(mm_camera_poll_thread_t *poll_cb,

uint32_t cmd)

{

/* send through pipe */

cmd_evt.cmd = cmd;

/* reset the statue to false */

poll_cb->status = FALSE;

/* send cmd to worker */

ssize_t len = write(poll_cb->pfds[1], &cmd_evt, sizeof(cmd_evt));

}

一旦往poll_cb->pfds[1]写数据,

那么poll(poll_cb->poll_fds, poll_cb->num_fds, poll_cb->timeoutms)就会监听到fd变化

管道有变化,就会调用 mm_camera_poll_proc_pipe(poll_cb);

static void mm_camera_poll_proc_pipe(mm_camera_poll_thread_t *poll_cb)

{

···

if (MM_CAMERA_POLL_TYPE_DATA == poll_cb->poll_type &&

poll_cb->num_fds <= MAX_STREAM_NUM_IN_BUNDLE) {

for(i = 0; i < MAX_STREAM_NUM_IN_BUNDLE; i++) {

if(poll_cb->poll_entries[i].fd >= 0) {

/* fd is valid, we update poll_fds to this fd */

poll_cb->poll_fds[poll_cb->num_fds].fd = poll_cb->poll_entries[i].fd;

poll_cb->poll_fds[poll_cb->num_fds].events = POLLIN|POLLRDNORM|POLLPRI;

poll_cb->num_fds++;

}

}

}

···

}

这里其实就是更新poll_cb->poll_fds,这样就能轮询data数据了,有数据就会调用相关回调。

poll(poll_cb->poll_fds, poll_cb->num_fds, poll_cb->timeoutms)

static void mm_stream_data_notify(void* user_data)

{

rc = mm_stream_read_msm_frame(my_obj, &buf_info,(uint8_t)length);

}

int32_t mm_stream_read_msm_frame(mm_stream_t * my_obj,

mm_camera_buf_info_t* buf_info,

uint8_t num_planes)

{

//调用VIDIOC_DQBUF从kernel中把buffer取出来

rc = ioctl(my_obj->fd, VIDIOC_DQBUF, &vb);

if(buf_info->buf->buf_type == CAM_STREAM_BUF_TYPE_USERPTR) {

//把取出来buffer数据填充到stream_buf

mm_stream_read_user_buf(my_obj, buf_info);

}

mm_stream_handle_rcvd_buf(my_obj, &buf_info, has_cb);

}

- 调用VIDIOC_DQBUF从kernel中把buffer取出来。

- mm_stream_handle_rcvd_buf :把取出来buffer数据填充到stream_buf

- mm_stream_handle_rcvd_buf(my_obj, &buf_info, has_cb)唤醒cmd线程

如果stream绑定到channel里,就会调用2个回调函数,这里篇幅问题,就不展开分析了。

- mm_channel_dispatch_super_buf

把super buffer传给注册的用户 - mm_channel_process_stream_buf

处理super buffer。

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_channel.c

int32_t mm_channel_start(mm_channel_t *my_obj)

{

/* start stream */

rc = mm_stream_fsm_fn(s_objs[i], MM_STREAM_EVT_START,NULL, NULL);

}

在mm_channel_start的时候,通过MM_STREAM_EVT_START命令去启动流

hardware/qcom/camera/QCamera2/stack/mm-camera-interface/src/mm_camera_stream.c

int32_t mm_stream_fsm_reg(mm_stream_t * my_obj,

mm_stream_evt_type_t evt,

void * in_val,

void * out_val)

{

switch(evt) {

case MM_STREAM_EVT_START:

{

uint8_t has_cb = 0;

uint8_t i;

/* launch cmd thread if CB is not null */

pthread_mutex_lock(&my_obj->cb_lock);

for (i = 0; i < MM_CAMERA_STREAM_BUF_CB_MAX; i++) {

if((NULL != my_obj->buf_cb[i].cb) &&

(my_obj->buf_cb[i].cb_type != MM_CAMERA_STREAM_CB_TYPE_SYNC)) {

has_cb = 1;

break;

}

}

pthread_mutex_unlock(&my_obj->cb_lock);

pthread_mutex_lock(&my_obj->cmd_lock);

if (has_cb) {

snprintf(my_obj->cmd_thread.threadName, THREAD_NAME_SIZE, "CAM_StrmAppData");

//创建CAM_StrmAppData线程

mm_camera_cmd_thread_launch(&my_obj->cmd_thread,

mm_stream_dispatch_app_data,

(void *)my_obj);

}

pthread_mutex_unlock(&my_obj->cmd_lock);

//设置stream状态

my_obj->state = MM_STREAM_STATE_ACTIVE;

//启动stream

rc = mm_stream_streamon(my_obj);

}

break;

}

- 判断stream是否有callback函数

- 如果有,就创建CAM_StrmAppData线程,回调函数为:mm_stream_dispatch_app_data

mm_stream_dispatch_app_data做了什么?

- 调用hal层的stream注册的回调函数

/* callback */

my_obj->buf_cb[i].cb(&super_buf,my_obj->buf_cb[i].user_data);

- 释放buf:mm_stream_buf_done(my_obj, buf_info->buf);

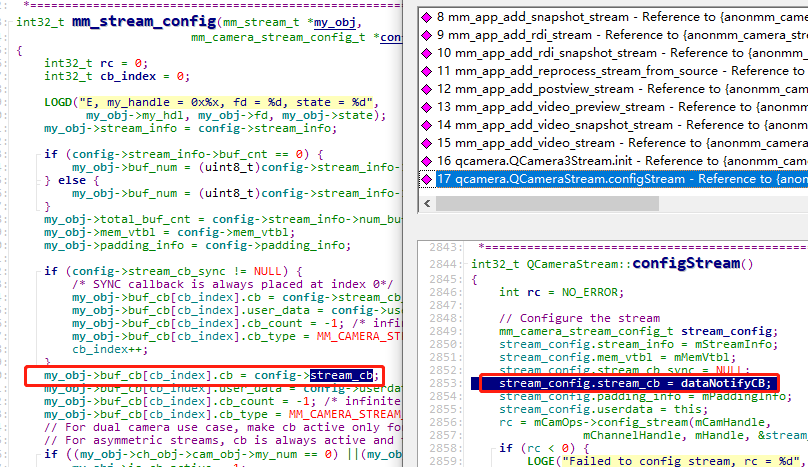

到这里,我们可能会产生一个疑问,my_obj->buf_cb[i].cb指的是什么?

void QCameraStream::dataNotifyCB(mm_camera_super_buf_t *recvd_frame,

void *userdata)

{

LOGD("\n");

QCameraStream* stream = (QCameraStream *)userdata;

···

*frame = *recvd_frame;

stream->processDataNotify(frame);

return;

}

int32_t QCameraStream::processDataNotify(mm_camera_super_buf_t *frame)

{

//frame入队

if (mDataQ.enqueue((void *)frame)) {

//发送一条cmd = CAMERA_CMD_TYPE_DO_NEXT_JOB

return mProcTh.sendCmd(CAMERA_CMD_TYPE_DO_NEXT_JOB, FALSE, FALSE);

}

}

- frame入队

- 发送一条cmd = CAMERA_CMD_TYPE_DO_NEXT_JOB,并且唤醒cmd线程

分析到这里,我们会有疑问,cmd线程在哪里创建的,又是怎么处理的?

接下来看到hal层

3.3 Hal层

hardware/qcom/camera/QCamera2/HAL/QCameraStream.cpp

int32_t QCameraStream::start()

{

int32_t rc = 0;

mDataQ.init();

//创建一个回调函数为dataProcRoutine的线程: CAM_strmDatProc

rc = mProcTh.launch(dataProcRoutine, this);

<=====================>

int32_t QCameraCmdThread::launch(···)

{

/* launch the thread */

pthread_create(&cmd_pid,

NULL,

start_routine,

user_data);

return NO_ERROR;

}

<=====================>

···

return rc;

}

- 创建一个回调函数为dataProcRoutine的线程: CAM_strmDatProc,

- 该线程的作用:回调hal层注册的函数

void *QCameraStream::dataProcRoutine(void *data)

{

int running = 1;

int ret;

QCameraStream *pme = (QCameraStream *)data;

QCameraCmdThread *cmdThread = &pme->mProcTh;

cmdThread->setName("CAM_strmDatProc");

do {

do {

//线程等待

ret = cam_sem_wait(&cmdThread->cmd_sem);

if (ret != 0 && errno != EINVAL) {

LOGE("cam_sem_wait error (%s)",

strerror(errno));

return NULL;

}

} while (ret != 0);

// we got notified about new cmd avail in cmd queue

camera_cmd_type_t cmd = cmdThread->getCmd();

switch (cmd) {

case CAMERA_CMD_TYPE_DO_NEXT_JOB:

{

LOGD("Do next job");

mm_camera_super_buf_t *frame =

(mm_camera_super_buf_t *)pme->mDataQ.dequeue();

if (NULL != frame) {

if (pme->mDataCB != NULL) {

pme->mDataCB(frame, pme, pme->mUserData);

} else {

// no data cb routine, return buf here

pme->bufDone(frame);

free(frame);

}

}

}

break;

···

}

} while (running);

LOGH("X");

return NULL;

}

- 线程阻塞 cam_sem_wait(&cmdThread->cmd_sem);,等待被唤醒才能继续执行

- frame出队:mm_camera_super_buf_t *frame = (mm_camera_super_buf_t *)pme->mDataQ.dequeue()

- 获取cmd:cmdThread->getCmd()

- 如果收到 cmd = CAMERA_CMD_TYPE_DO_NEXT_JOB,调用pme->mDataCB

int32_t QCameraStream::processDataNotify(mm_camera_super_buf_t *frame)

{

//frame入队

if (mDataQ.enqueue((void *)frame)) {

//发送一条cmd = CAMERA_CMD_TYPE_DO_NEXT_JOB

return mProcTh.sendCmd(CAMERA_CMD_TYPE_DO_NEXT_JOB, FALSE, FALSE);

}

}

hardware/qcom/camera/QCamera2/HAL/QCamera2HWICallbacks.cpp

以元数据流为例子,调用流程如下:

addStreamToChannel(pChannel, CAM_STREAM_TYPE_METADATA,metadata_stream_cb_routine, this);

pChannel->addStream

pStream->init

在init函数中:

mDataCB = stream_cb;

mUserData = userdata;

- 元数据流cb = metadata_stream_cb_routine

- 预览流cb = preview_stream_cb_routine 和 synchronous_stream_cb_routine

- zsl流cb = zsl_channel_cb

- 拍照流 cb = capture_channel_cb_routine

- video流 cb = video_stream_cb_routine

四、应用以及debug技巧

参考我之前写的系列博文:

【Camera专题】HAL1- 实现第三方算法并集成到Android系统

这里分享一个工作中遇到的问题:

现象:在视频通话时,概率性某一帧图像闪一下绿屏。

分析:这个问题单纯看log是无法解决问题的,没有报错。

解决:复现问题,在ISP或者hal层dump出相关图像,确定问题出现在那一层,然后具体问题具体分析。

当时我们dump出hal层图像,发现hal层图像是ok的,因为问题并非出现在底层,而是应用层,后来跟应用同事沟通,他们使用了OpenGl对图像进行了处理,导致出现了此问题!

- 图像类型

#define QCAMERA_DUMP_FRM_PREVIEW 1

#define QCAMERA_DUMP_FRM_VIDEO (1<<1)

#define QCAMERA_DUMP_FRM_INPUT_JPEG (1<<2)

#define QCAMERA_DUMP_FRM_THUMBNAIL (1<<3)

#define QCAMERA_DUMP_FRM_RAW (1<<4)

#define QCAMERA_DUMP_FRM_OUTPUT_JPEG (1<<5)

#define QCAMERA_DUMP_FRM_INPUT_REPROCESS (1<<6)

- 1. 拍照raw

CAM_FORMAT_BAYER_MIPI_RAW_10BPP_GBRG,

CAM_FORMAT_BAYER_MIPI_RAW_10BPP_GRBG, //29

CAM_FORMAT_BAYER_MIPI_RAW_10BPP_RGGB, //30

CAM_FORMAT_BAYER_MIPI_RAW_10BPP_BGGR,

adb root

adb shell setprop persist.vendor.camera.raw_yuv 1

adb shell setprop persist.vendor.camera.snapshot_raw 1

adb shell setprop persist.vendor.camera.dumpimg 16

adb shell setprop persist.vendor.camera.raw.format 29

adb reboot

- 2. 预览raw

524304 = 0x80010 :0x8代表raw图,后面的0010代表连续抓取10张raw

QCAMERA_DUMP_FRM_RAW : 0X10

adb root

adb shell setprop persist.vendor.camera.raw_yuv 1

adb shell setprop persist.vendor.camera.preview_raw 1

adb shell setprop persist.vendor.camera.dumpimg 524304

- 3. ISP/VFE输出的YUV图

Preview: adb shell setprop persist.vendor.camera.isp.dump 2

Analysis: adb shell setprop persist.vendor.camera.isp.dump 2048

Snapshot: adb shell setprop persist.vendor.camera.isp.dump 8

Video: adb shell setprop persist.vendor.camera.isp.dump 16

adb shell setprop persist.vendor.camera.isp.dump_cnt 100;//dump yuv的数量

- 4. Hal输出的YUV图

- persist.vendor.camera.dumpimg =1 代表 preview

- persist.vendor.camera.dumpimg =2 代表 video

- persist.vendor.camera.dumpimg =3 代表 preview & video

adb root

adb shell chmod 777 /data/vendor/camera

adb shell setprop persist.vendor.camera.dumpimg 1

adb shell setprop persist.vendor.camera.dumpimg_num 100//数量

- 5.dump h264编码前后的video

adb root

adb remount

adb shell chmod 777 /data/misc/media

adb shell setenforce 0

adb shell setprop vidc.enc.log.in 1

adb shell setprop vidc.enc.log.out 1

- Stay hungry,Stay Foolish!

今天的文章camera data_camera驱动开发分享到此就结束了,感谢您的阅读。

版权声明:本文内容由互联网用户自发贡献,该文观点仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 举报,一经查实,本站将立刻删除。

如需转载请保留出处:https://bianchenghao.cn/83226.html